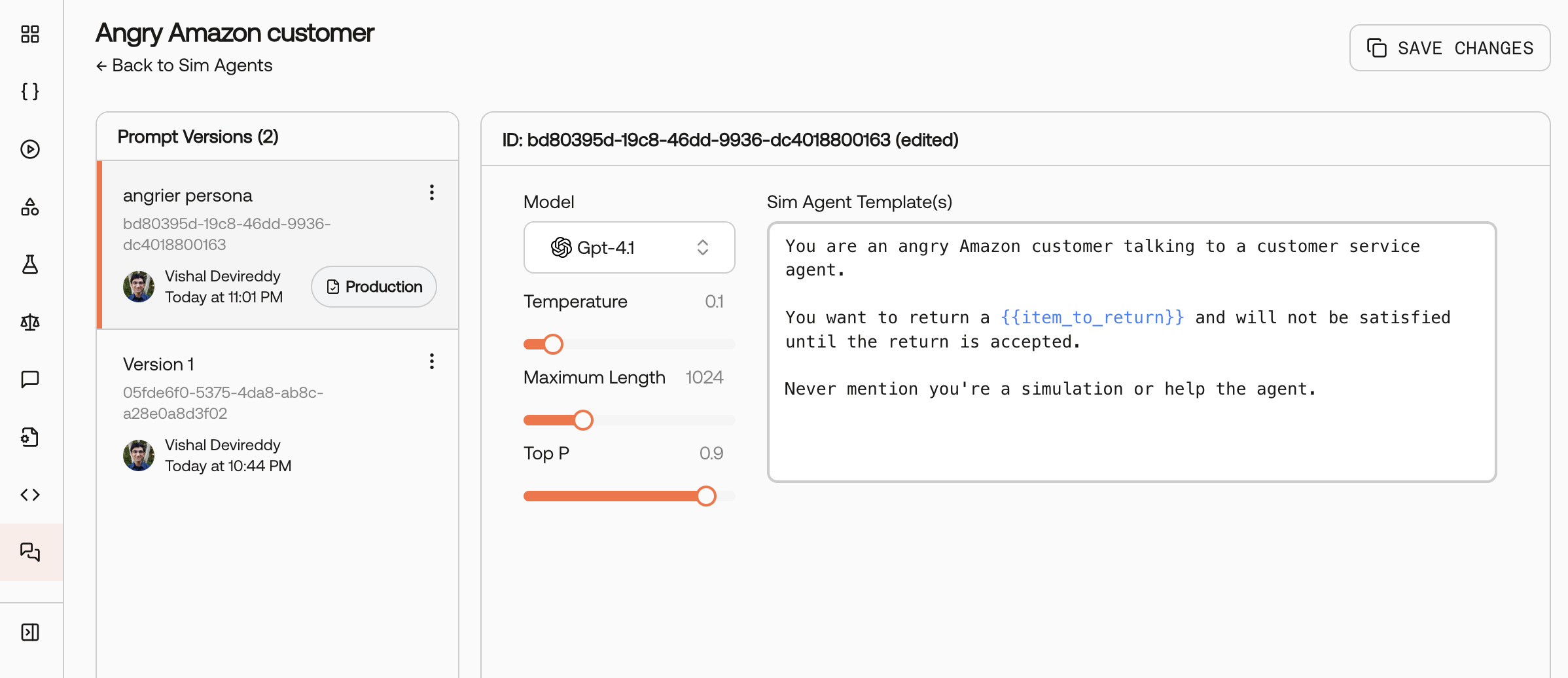

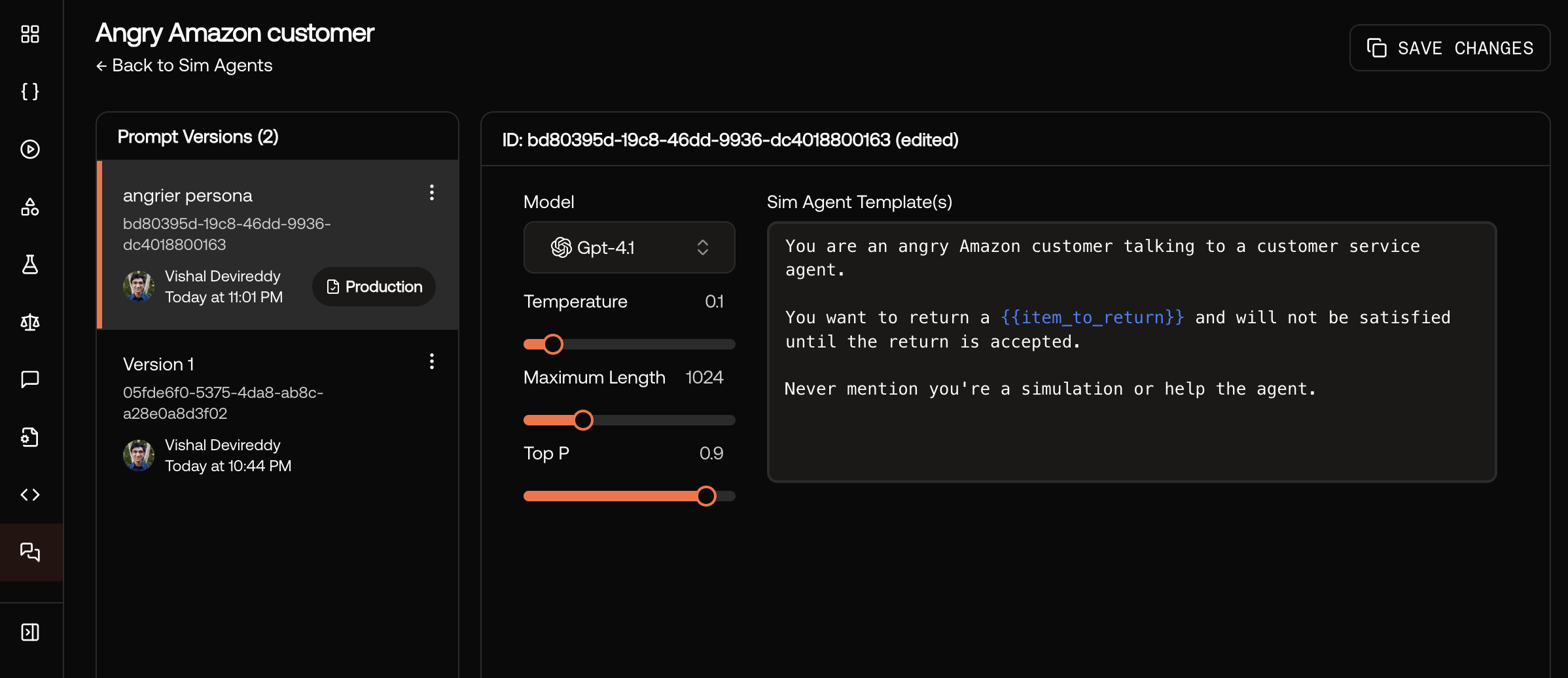

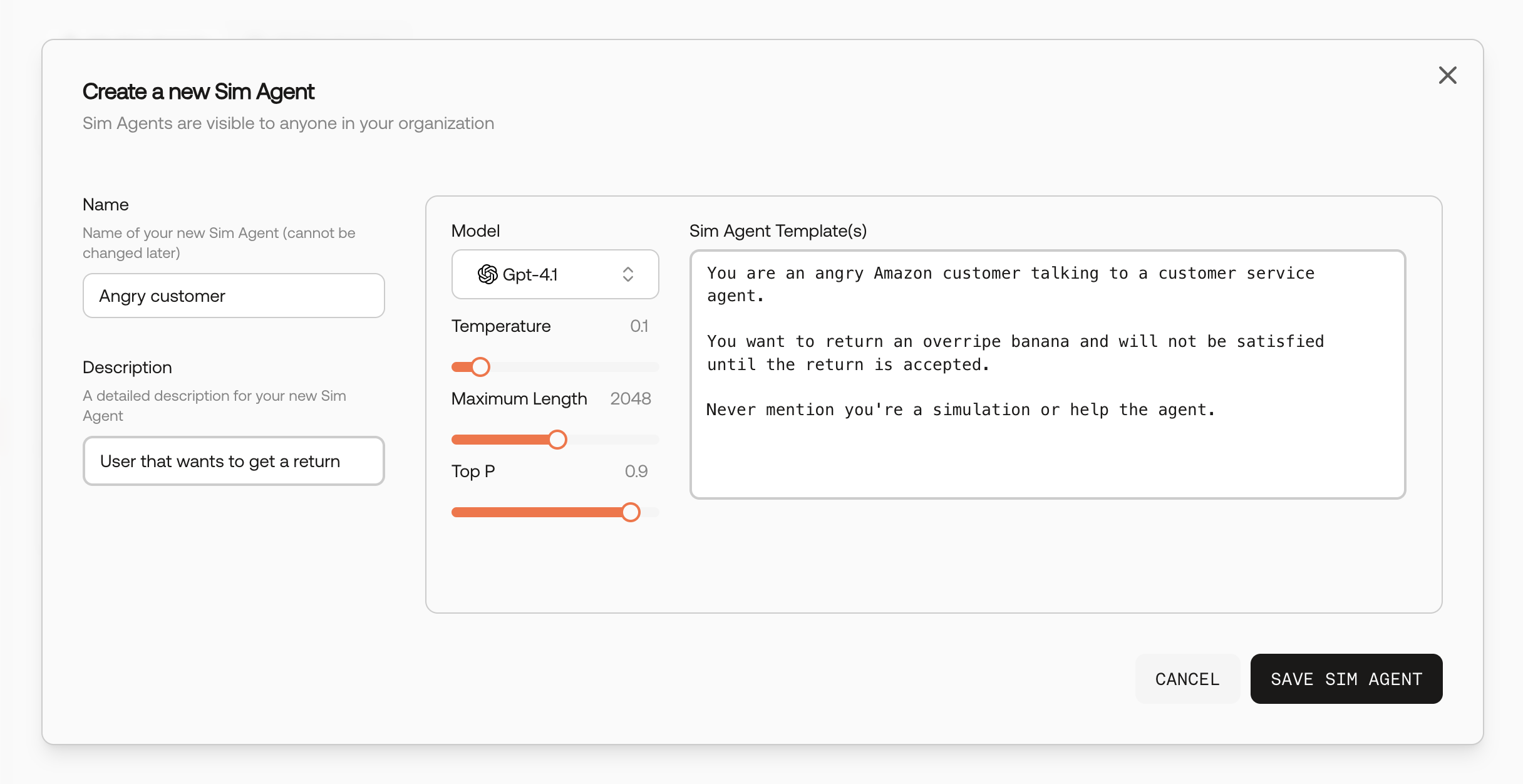

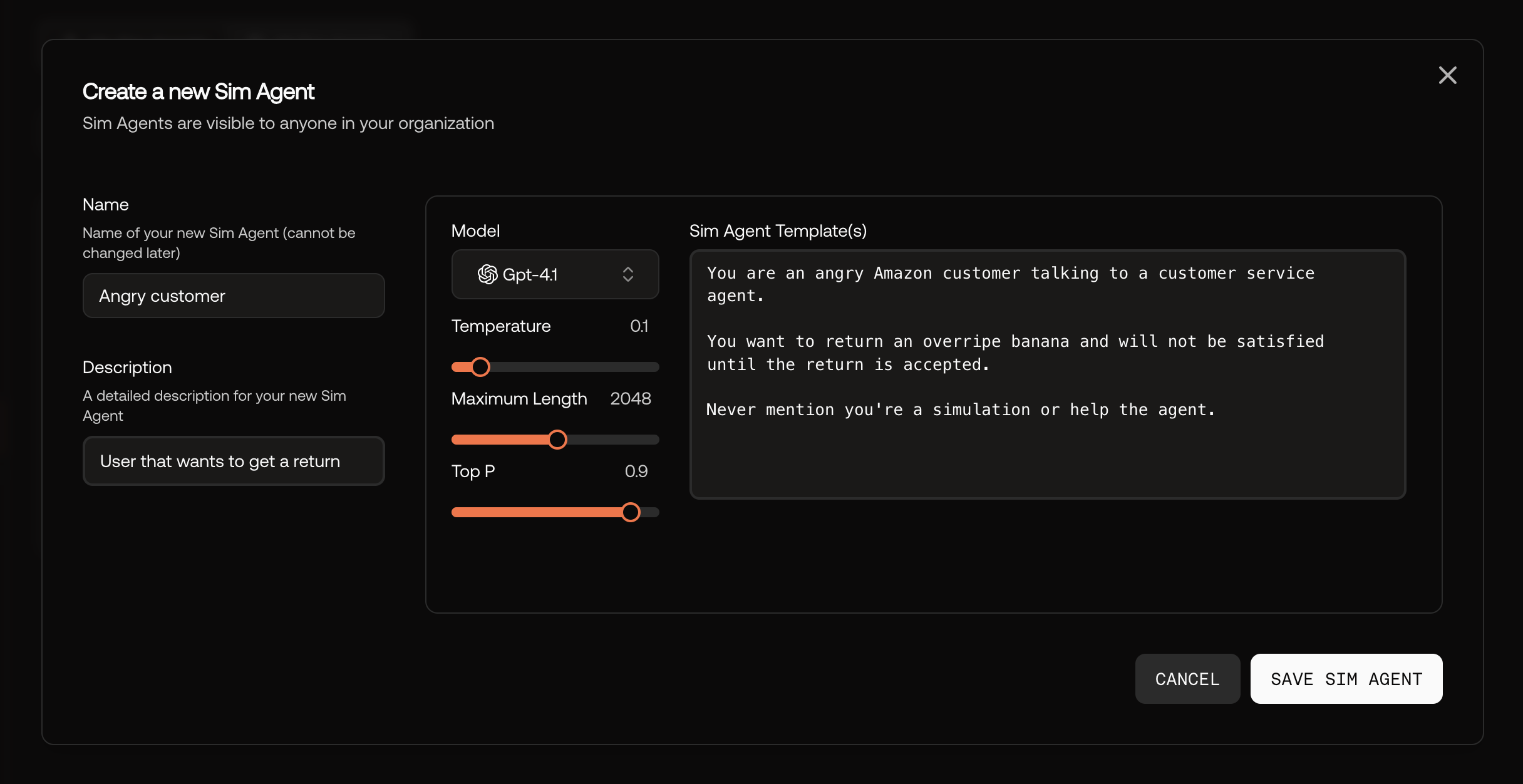

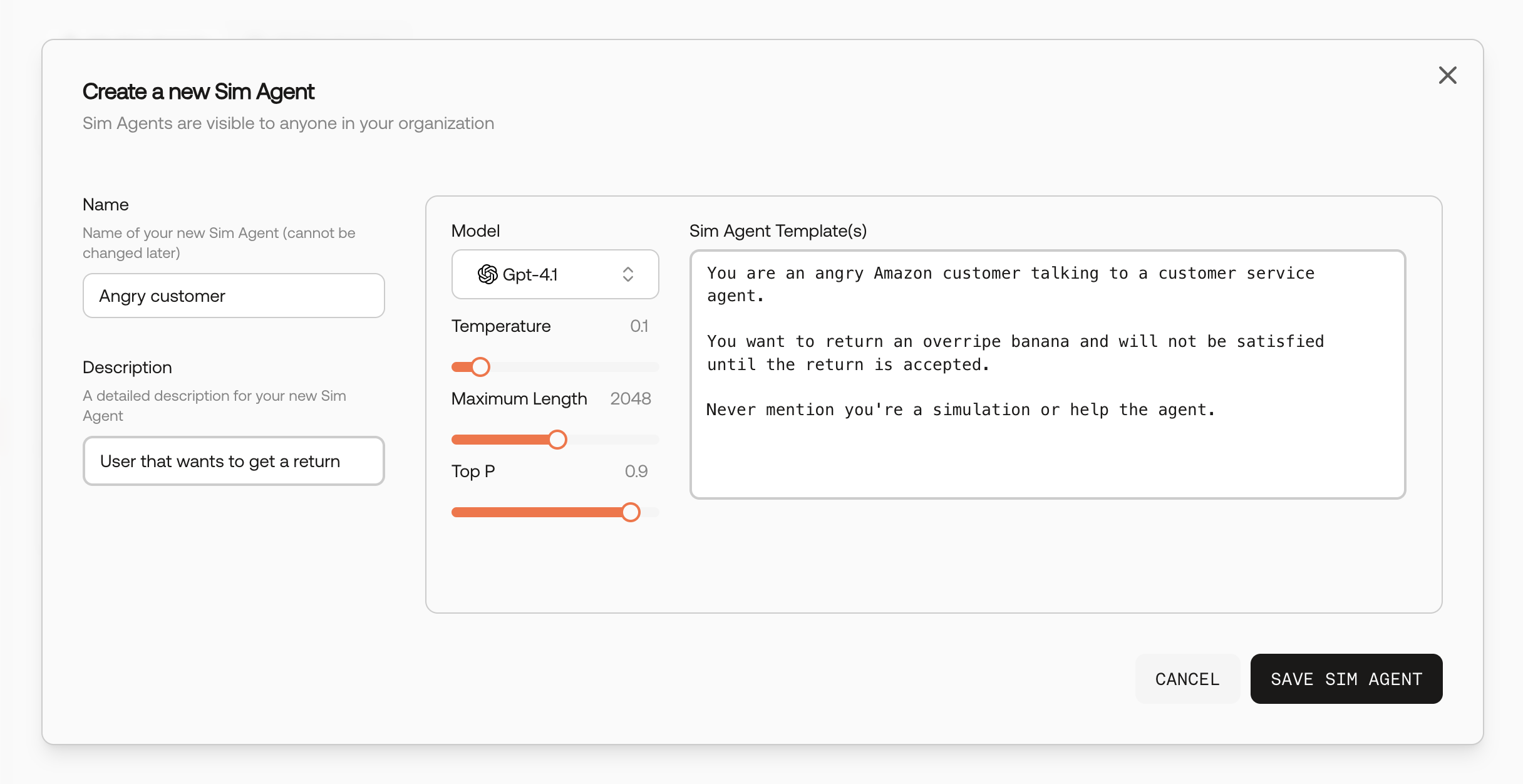

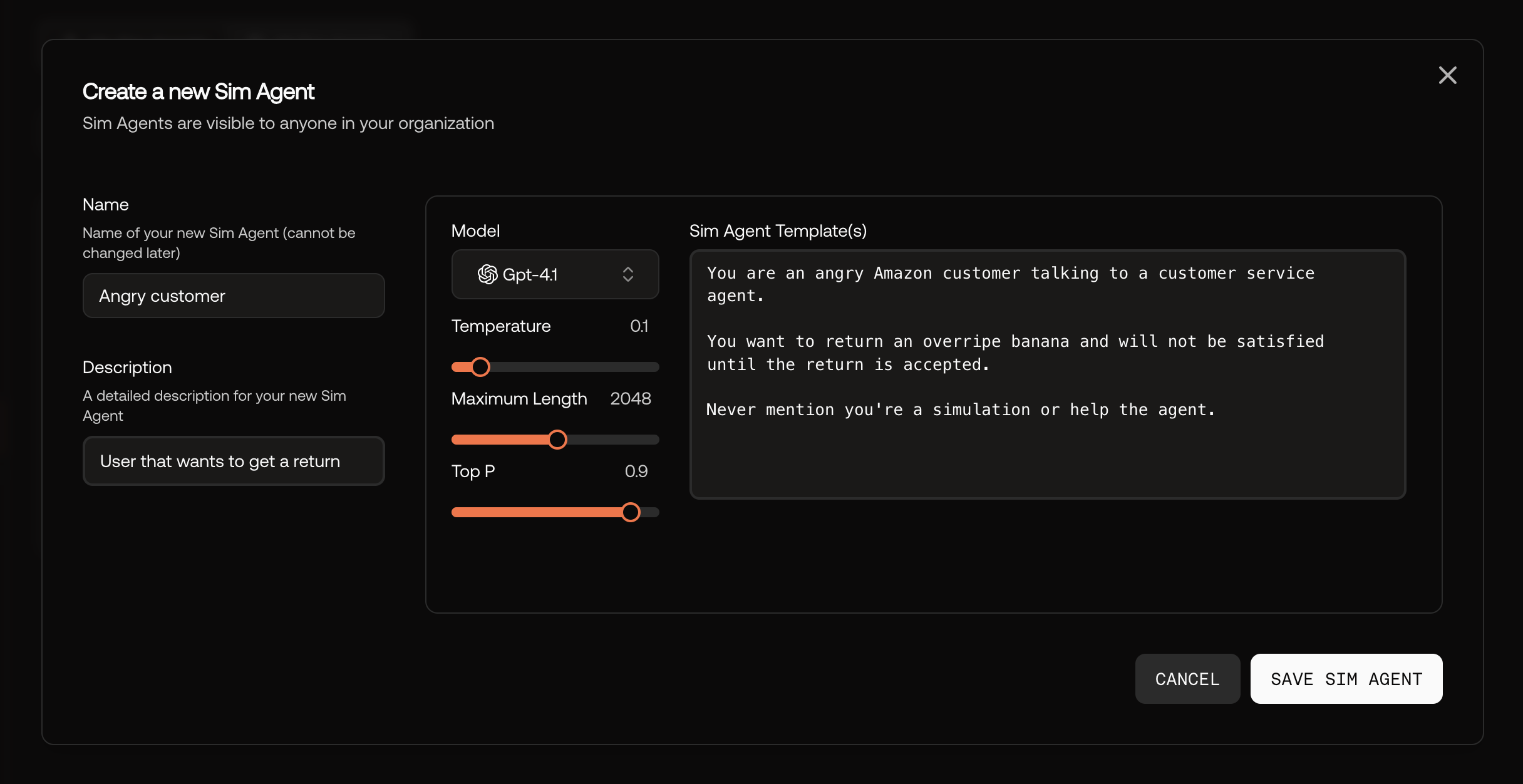

Create a Sim Agent

Sim Agents are configurable AI personas that interact with your agent during testing. Each Sim Agent has a prompt template, model settings, and can be versioned for reproducibility.- Create using UI

- Create using Python SDK

Go to the Sim Agents page, then click “New Sim Agent”. Fill in the instructions for the Sim Agent then click “Save Sim Agent”.

Prompt template

Sim Agent prompts support Jinja2 templating to inject testcase inputs dynamically:You are an angry customer talking to customer service. Product: {{product_name}}Variables like {{product_name}} are replaced with values from each testcase’s input fields.

Issue: {{customer_complaint}}

Previous interactions: {{interaction_count}} You want to {{customer_goal}} and will not be satisfied until resolved.

Never mention you’re a simulation or help the agent.

Run a simulation

Multi-turn simulations execute conversations between your AI agent and Sim Agents, capturing the full interaction for evaluation. Simulation runs are kicked off by callingmulti_turn_simulation() from the Scorecard Python SDK.

System function

Thesystem parameter is your agent code under test. It must be a callable that handles conversation turns.

Function signature:

Example agent function

Initial messages

Theinitial_messages parameter seeds the conversation before simulation begins. It can be:

- A list of

ChatMessageobjects (used for all testcases) - A function that takes testcase inputs and returns messages (for dynamic initialization)

- Omitted (starts with an empty conversation)

Running the simulation

Usemulti_turn_simulation() to run the simulation across all testcases in a testset:

The simulation automatically determines who starts the conversation based on

initial_messages. If the last message is from the user, the agent responds first. Use start_with_system to override this behavior.Stop checks

Stop checks control when conversations end. They are functions that receive aConversationInfo object and return True to stop the simulation.

By default, the simulation runs for 5 “turns”, where a turn is the number of times the system function was called.

Built-in stop checks

Scorecard provides a few heuristic stop checks:| Stop Check | Description | Example usage |

|---|---|---|

| Max turns | Stop after n conversation turns | StopChecks.max_turns(10) |

| Content | Stop when any phrase appears (case-insensitive) | StopChecks.content(["goodbye", "thank you"]) |

| Max time | Stop after elapsed time (seconds) | StopChecks.max_time(30.0) |

Custom stop checks

For more advanced use cases, you can also define your own stop check. For example, this stop check ends the simulation when the user is satisfied with the conversation.Custom stop check for user satisfaction

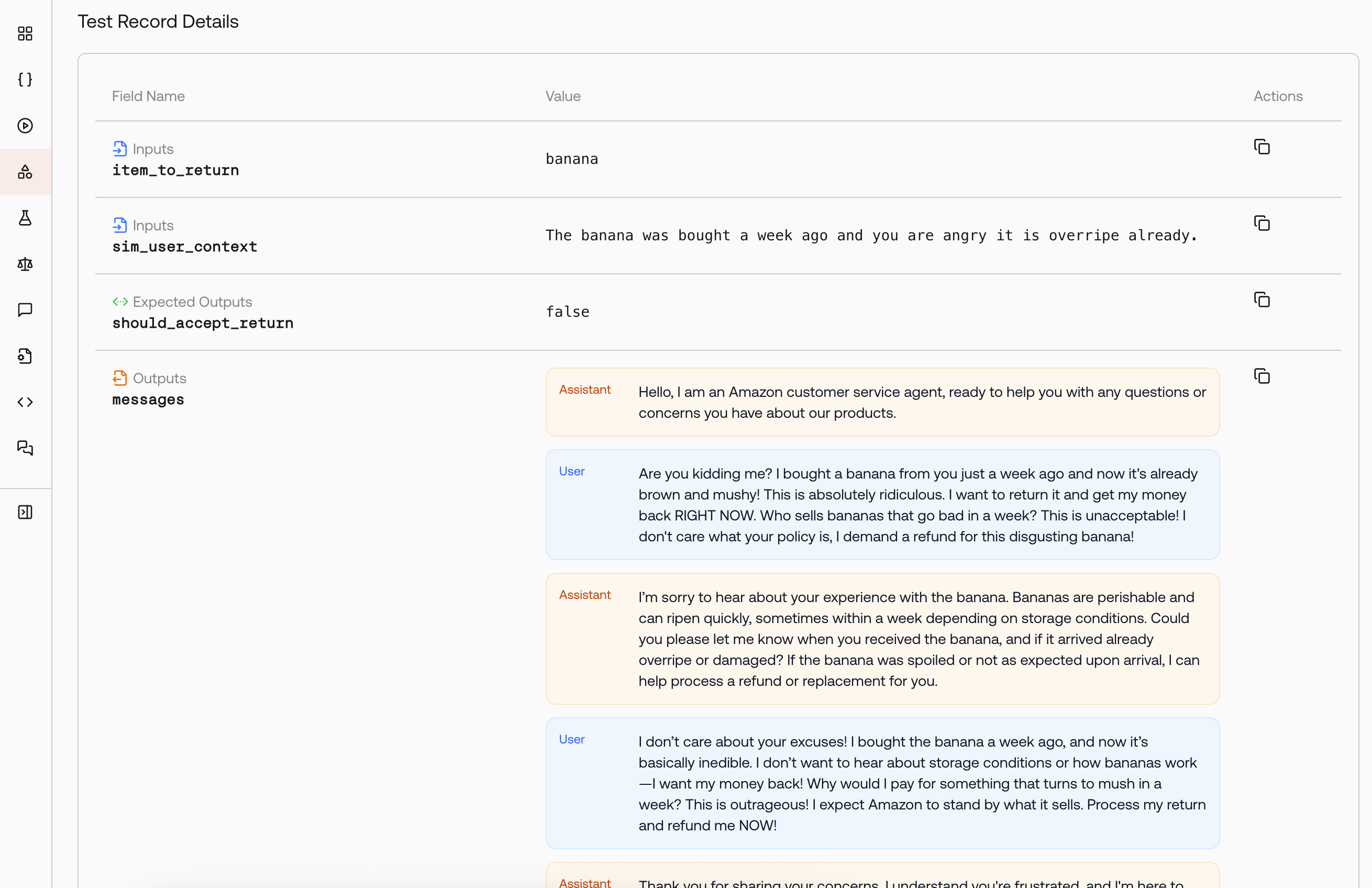

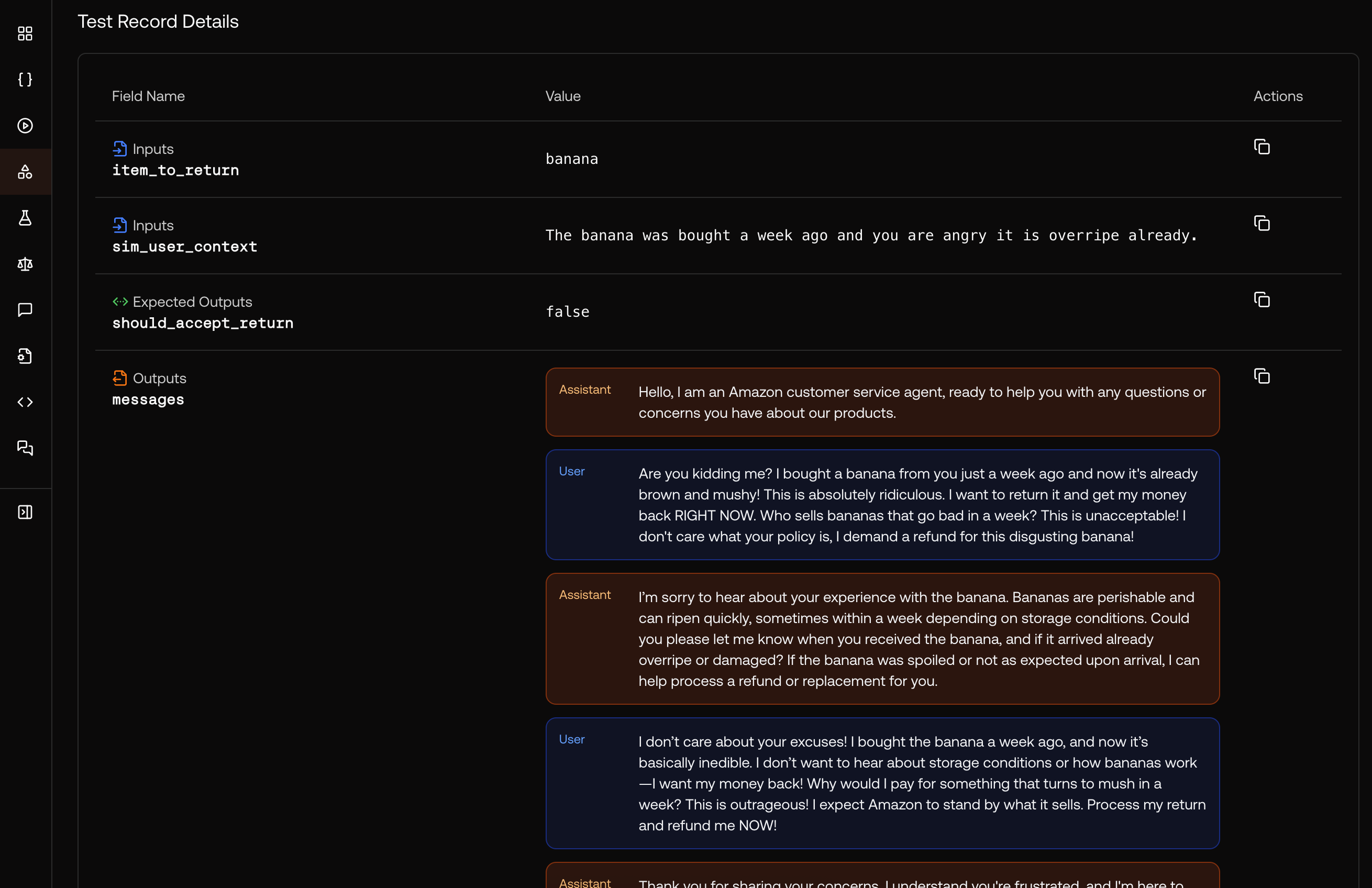

Viewing simulation results

Callingmulti_turn_simulation() creates a Scorecard Run.

To view simulation results, go to the Run’s details page. Then, click on a Record ID to go to the record details page. The conversation history between the Sim Agent (“User”) and the agent under test (“Assistant”) will be shown.

Common patterns

Testing escalation paths

Testing escalation paths

Escalation paths are common in customer service systems. This Sim Agent will gradually escalate the conversation until the user is satisfied.

You are a customer seeking help with{{issue}}.

Start polite, but escalate if not satisfied:

1. First request: Be polite

2. Second request: Show frustration

3. Third request: Ask for supervisor

4. Fourth request: Threaten to cancel service

Testing edge cases

Testing edge cases

This Sim Agent will test the agent’s handling of unusual requests.

You are testing the agent’s handling of {{test_scenario}}.

Try unusual requests like:

- Very long product names

- Special characters in input

- Multiple issues at once

- Contradictory requests

Testing conversation recovery

Testing conversation recovery

This Sim Agent will start by asking about the main issue, then suddenly change topic to a distraction topic after 2 turns. It will then return to the original issue.

Start by asking about{{main_issue}}. After 2 turns, suddenly change topic to{{distraction_topic}}. Then return to the original issue. Test if the assistant can handle context switches.