baseURL to llm.scorecard.io - no SDKs, no dependency management, works with your existing code. Supports OpenAI, Anthropic, and streaming responses.

Using Vercel AI SDK? Check out our AI SDK Wrapper for automatic tracing with zero manual instrumentation.

Need more control? See the SDK wrappers section below for custom spans and deeper integration, or use OpenTelemetry directly.

Steps

1

Get your Scorecard API key

Create a Scorecard account and grab your API key from Settings.

2

Point your client to Scorecard

Change the

baseURL to https://llm.scorecard.io. Everything else in your code stays the same - your LLM calls will be automatically traced.3

View traces in Scorecard

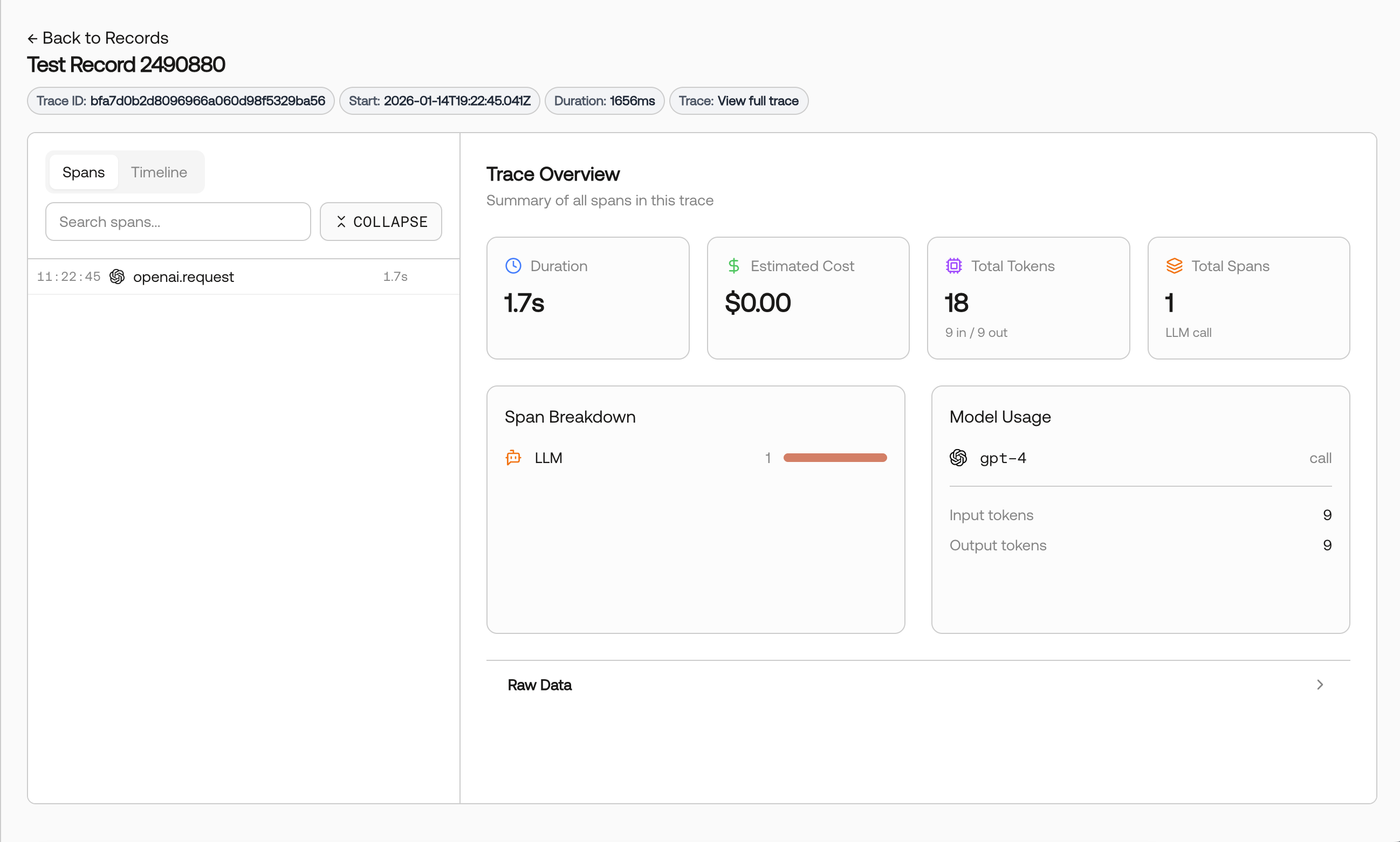

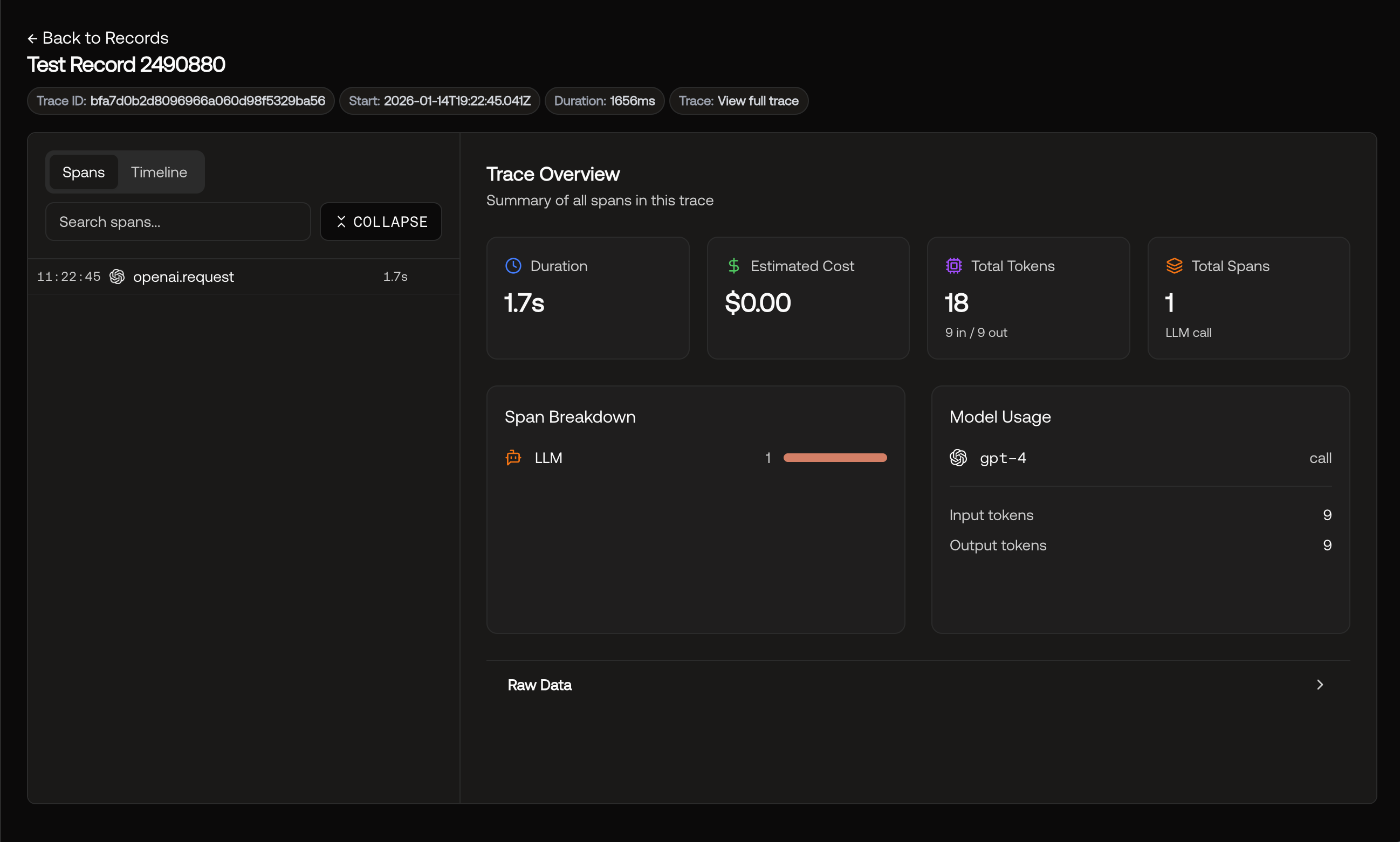

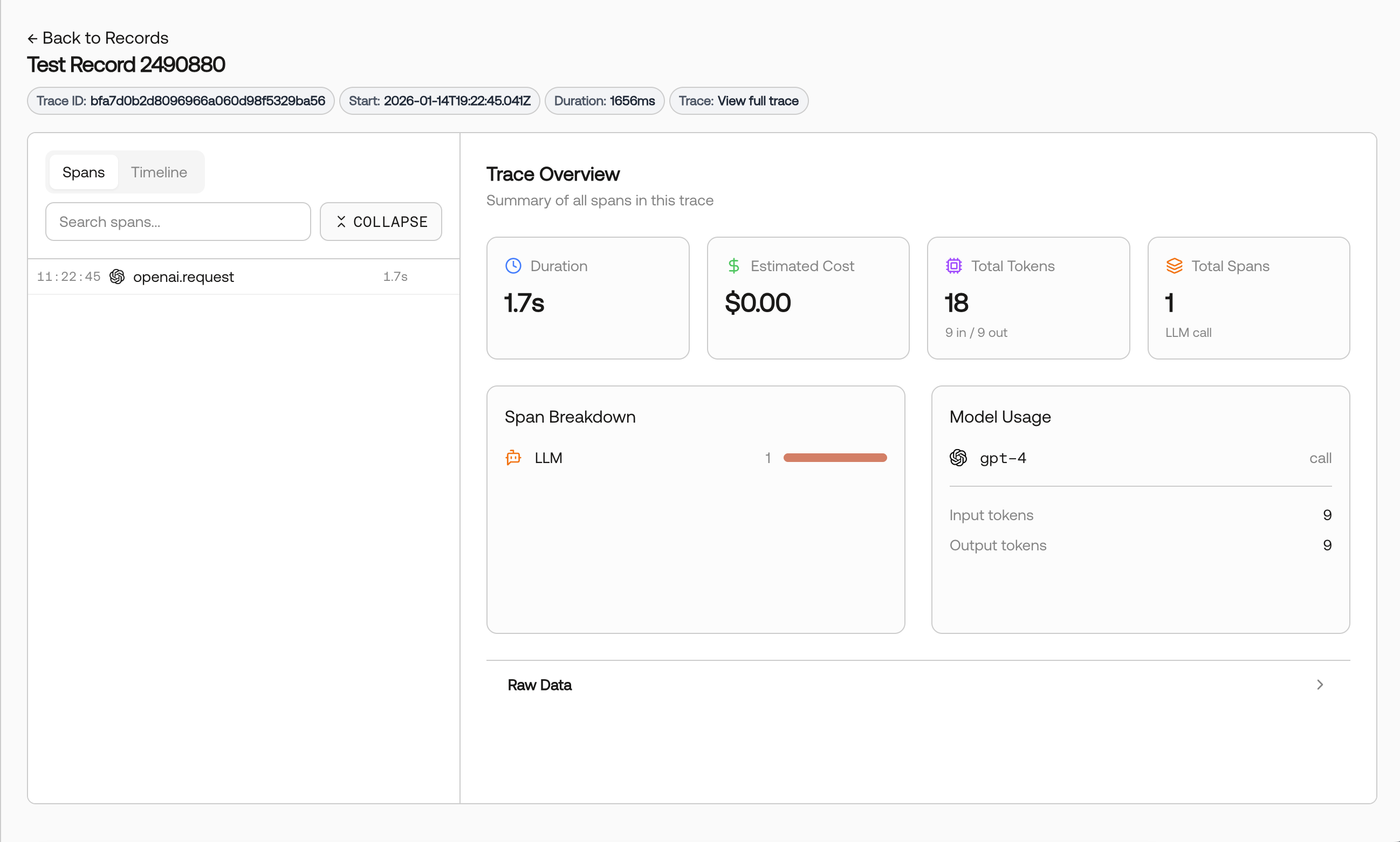

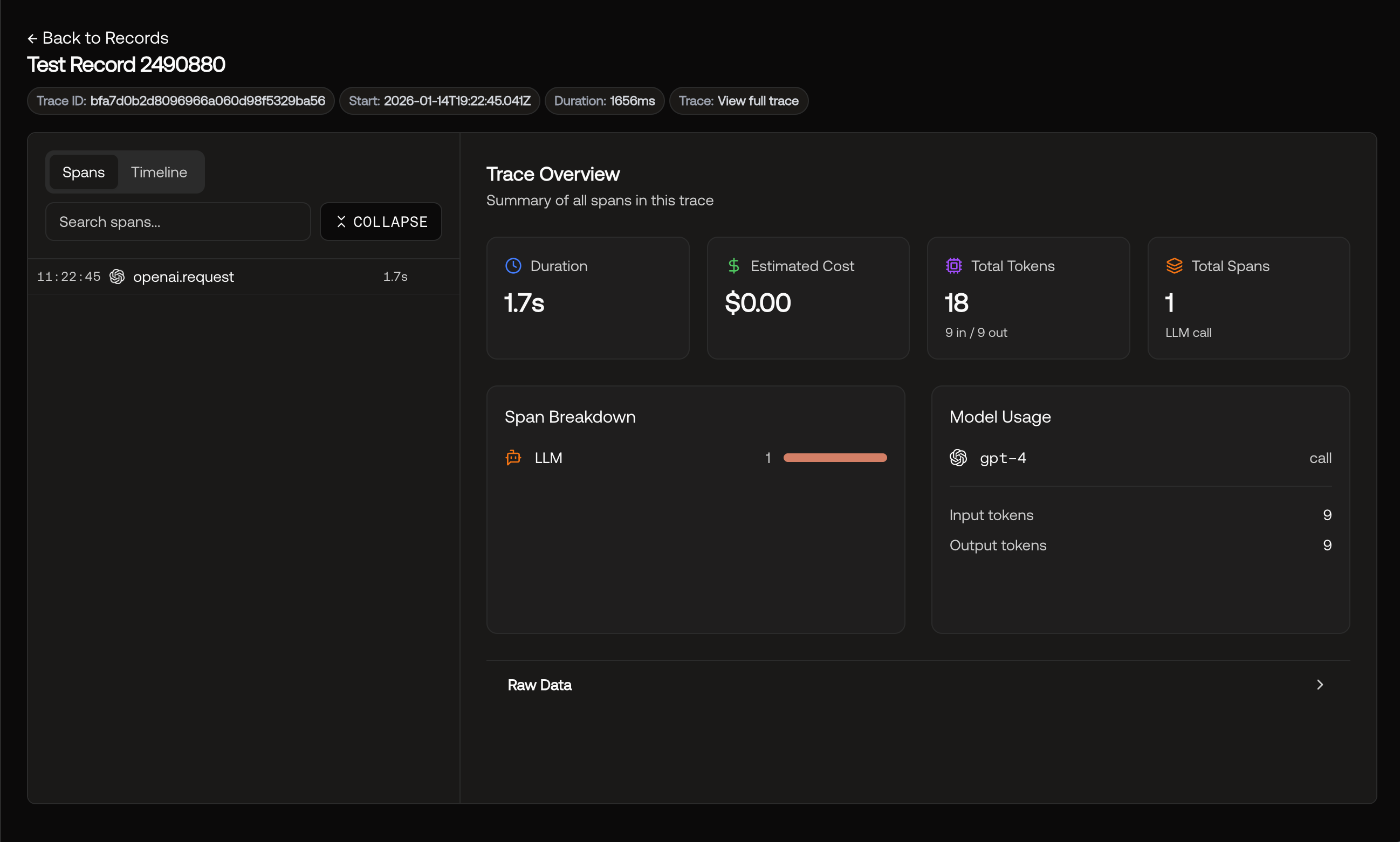

Run your application, then visit app.scorecard.io and navigate to Records.You’ll see full request/response data, token usage, latency, and errors for all your LLM calls. Streaming responses are captured too.

Viewing traces in Records.

How It Works

Scorecard forwards your requests to the LLM provider while capturing telemetry:SDK Wrappers for Deeper Integration

For more control thanllm.scorecard.io, the Scorecard SDK wrappers provide automatic tracing with native OpenTelemetry integration. Wrap your OpenAI or Anthropic client once and all calls are automatically traced - including streaming responses.

The real power comes from custom spans and nested traces. You can create parent spans for your workflows and business logic, and LLM calls will automatically nest as children. This gives you complete visibility into complex multi-step processes.

Basic Usage

Examples

- Node.js Examples - OpenAI, Anthropic, Vercel AI SDK, nested traces, streaming

- Python Examples - OpenAI, Anthropic, nested traces, streaming

- More Examples - Additional integration examples

Where to go next

- Tracing Features - Advanced tracing patterns