LLM observability means knowing exactly what happened in every generation—latency, token usage, prompts, completions, cost, errors and more. Scorecard’s Tracing collects this data automatically—via SDK wrappers, environment variables, OpenLLMetry, an LLM proxy, or direct OpenTelemetry—and displays rich visualisations in the Scorecard UI.

Why Tracing matters

- Debug long or failing requests in seconds.

- Audit prompts & completions for compliance and safety.

- Attribute quality and cost back to specific services or users.

- Feed production traffic into evaluations.

If you call it something else

- Observability / AI spans / request logs: We capture standard OpenTelemetry traces and spans for LLM calls and related operations.

- Agent runs / tools / function calls: These appear as nested spans in the trace tree, with inputs/outputs when available.

- Prompt/Completion pairs: Extracted from common keys (

openinference.*, ai.prompt / ai.response, gen_ai.*) so they can be turned into testcases and scored.

Instrumentation Methods

Choose the method that fits your stack. All methods send traces to Scorecard’s OpenTelemetry endpoint.

Agent Frameworks

SDK Wrappers

Traceloop

LLM Proxy

OpenTelemetry

For frameworks with built-in OpenTelemetry support (Claude Agent SDK, OpenAI Agents SDK). No code changes—just set environment variables.export OTEL_EXPORTER_OTLP_HEADERS="Authorization=Bearer <your-scorecard-api-key>"

export BETA_TRACING_ENDPOINT="https://tracing.scorecard.io/otel"

export ENABLE_BETA_TRACING_DETAILED=1

export OTEL_RESOURCE_ATTRIBUTES="scorecard.project_id=<your-project-id>"

# No code changes required — just set the env vars above and run your app.

import anyio

from claude_agent_sdk import (

AssistantMessage,

TextBlock,

query,

)

async def main():

async for message in query(prompt="What is 2 + 2?"):

if isinstance(message, AssistantMessage):

for block in message.content:

if isinstance(block, TextBlock):

print(f"Claude: {block.text}")

anyio.run(main)

One-line wrapper for OpenAI, Anthropic, or Vercel AI SDK clients. Install the scorecard-ai package and wrap your client—no other changes needed.OpenAIfrom scorecard_ai import wrap

from openai import OpenAI

import os

openai = wrap(

OpenAI(api_key=os.getenv("OPENAI_API_KEY")),

)

response = openai.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "What is the capital of France?"},

],

)

print(response.choices[0].message.content)

from scorecard_ai import wrap

from anthropic import Anthropic

import os

claude = wrap(

Anthropic(api_key=os.getenv("ANTHROPIC_API_KEY")),

)

response = claude.messages.create(

model="claude-sonnet-4-20250514",

max_tokens=1024,

messages=[{"role": "user", "content": "What is the capital of France?"}],

)

text_content = next(

(block for block in response.content if block.type == "text"), None

)

print(text_content.text if text_content else "No response")

import { openai } from '@ai-sdk/openai';

import * as ai from 'ai';

import { wrapAISDK } from 'scorecard-ai';

const aiSDK = wrapAISDK(ai);

const { text } = await aiSDK.generateText({

model: openai('gpt-4o'),

prompt: 'What is the capital of France?',

});

console.log(text);

Universal OpenTelemetry-based tracing via OpenLLMetry. Supports LangChain, LlamaIndex, CrewAI, and 20+ providers.pip install traceloop-sdk

export TRACELOOP_BASE_URL="https://tracing.scorecard.io/otel"

export TRACELOOP_HEADERS="Authorization=Bearer%20<your-scorecard-api-key>"

from traceloop.sdk import Traceloop

from traceloop.sdk.decorators import workflow

from traceloop.sdk.instruments import Instruments

from openai import OpenAI

Traceloop.init(disable_batch=True, instruments={Instruments.OPENAI})

client = OpenAI()

@workflow(name="simple_chat")

def chat():

response = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": "Hello!"}]

)

return response.choices[0].message.content

result = chat()

print(result)

opentelemetry-instrumentation-langchain and use Instruments.LANGCHAIN:from traceloop.sdk import Traceloop

from traceloop.sdk.instruments import Instruments

# IMPORTANT: Initialize Traceloop before importing any LangChain modules.

Traceloop.init(

disable_batch=True,

instruments={Instruments.LANGCHAIN}

)

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

prompt = ChatPromptTemplate.from_messages([

("system", "You are a helpful assistant."),

("user", "{input}")

])

model = ChatOpenAI(model="gpt-4o-mini")

chain = prompt | model

response = chain.invoke({"input": "What is the capital of France?"})

print(response.content)

Instruments.LLAMA_INDEX:from traceloop.sdk import Traceloop

from traceloop.sdk.instruments import Instruments

from llama_index.llms.openai import OpenAI

from llama_index.core.llms import ChatMessage

Traceloop.init(

disable_batch=True,

instruments={Instruments.LLAMA_INDEX, Instruments.OPENAI}

)

llm = OpenAI(model="gpt-4o")

response = llm.chat([ChatMessage(role="user", content="Hello!")])

print(response.message.content)

Instruments.CREWAI:from traceloop.sdk import Traceloop

from traceloop.sdk.instruments import Instruments

from crewai import Agent, Task, Crew

Traceloop.init(

disable_batch=True,

instruments={Instruments.CREWAI, Instruments.OPENAI}

)

researcher = Agent(

role="Researcher",

goal="Research and summarize topics",

backstory="You are an expert researcher.",

)

task = Task(

description="What is the capital of France?",

expected_output="A brief answer",

agent=researcher,

)

crew = Crew(agents=[researcher], tasks=[task])

result = crew.kickoff()

print(result)

Change your baseURL to https://llm.scorecard.io. No SDK, no dependencies—just a URL change.from openai import OpenAI

import os

client = OpenAI(

api_key=os.environ["OPENAI_API_KEY"],

base_url="https://llm.scorecard.io",

default_headers={

"x-scorecard-api-key": os.environ["SCORECARD_API_KEY"],

},

)

response = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": "What is the capital of France?"}],

)

print(response.choices[0].message.content)

Send traces directly using any OpenTelemetry SDK (Python, TypeScript, Java, Go, .NET, Rust).

- Endpoint:

https://tracing.scorecard.io/otel/v1/traces

- Auth:

Authorization: Bearer <SCORECARD_API_KEY>

from anthropic import Anthropic

from opentelemetry import trace

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.exporter.otlp.proto.grpc.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk.resources import Resource

from opentelemetry.semconv.resource import ResourceAttributes

import os

resource = Resource.create({

ResourceAttributes.SERVICE_NAME: "my-app",

})

trace_provider = TracerProvider(resource=resource)

trace_provider.add_span_processor(BatchSpanProcessor(

OTLPSpanExporter(

endpoint="https://tracing.scorecard.io/otel",

headers={"Authorization": f"Bearer {os.environ['SCORECARD_API_KEY']}"}

)

))

trace.set_tracer_provider(trace_provider)

tracer = trace.get_tracer(__name__)

client = Anthropic()

@tracer.start_as_current_span("chat")

def chat(prompt: str) -> str:

span = trace.get_current_span()

span.set_attributes({

"gen_ai.system": "anthropic",

"gen_ai.request.model": "claude-sonnet-4-20250514",

})

message = client.messages.create(

model="claude-sonnet-4-20250514",

max_tokens=1024,

messages=[{"role": "user", "content": prompt}],

)

return message.content[0].text

result = chat("What is the capital of France?")

print(result)

trace.get_tracer_provider().force_flush()

pip install opentelemetry-api opentelemetry-sdk opentelemetry-exporter-otlp-proto-grpc

npm install @opentelemetry/api @opentelemetry/sdk-trace-node @opentelemetry/sdk-trace-base @opentelemetry/exporter-trace-otlp-http @opentelemetry/resources @opentelemetry/semantic-conventions

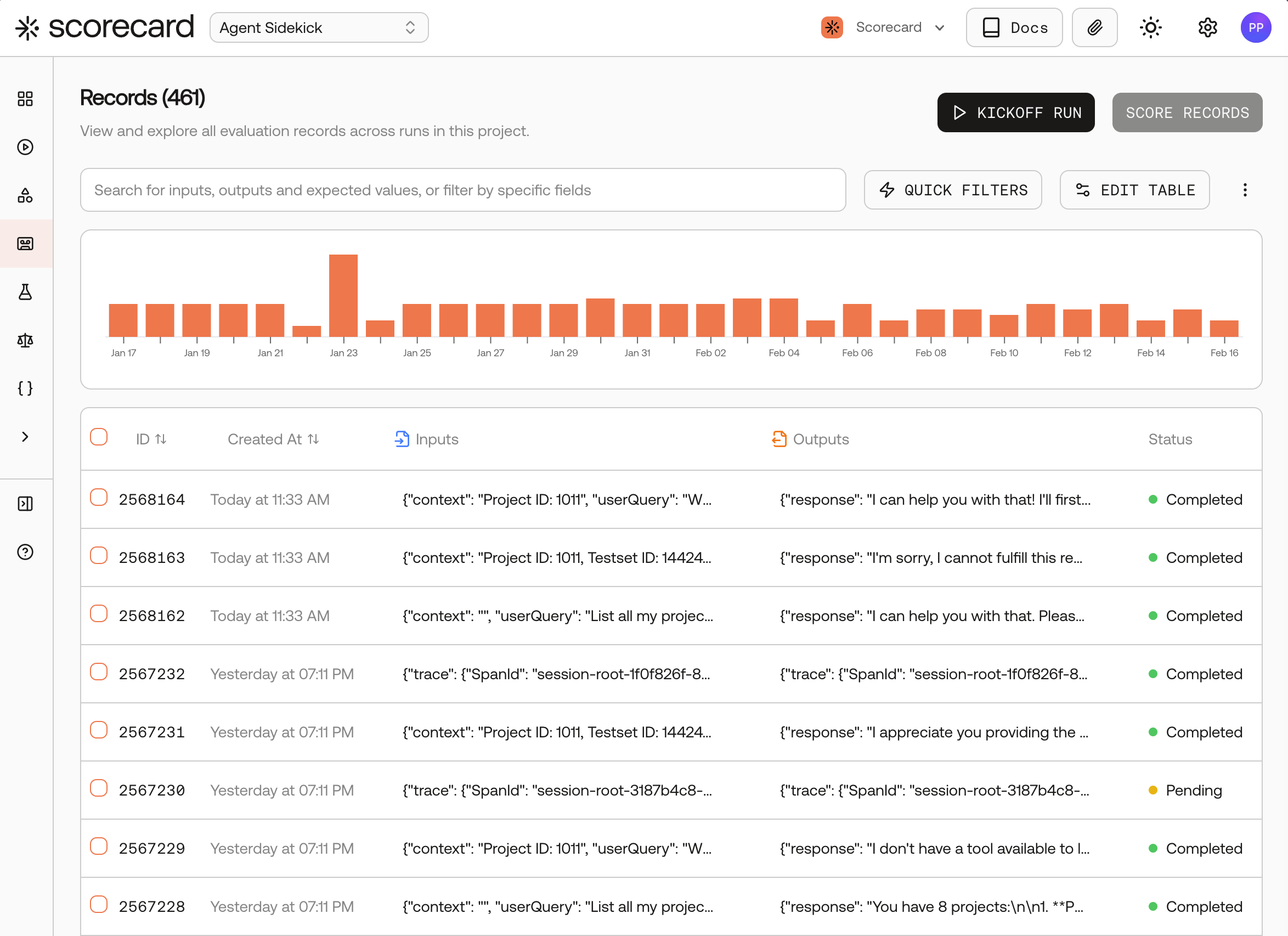

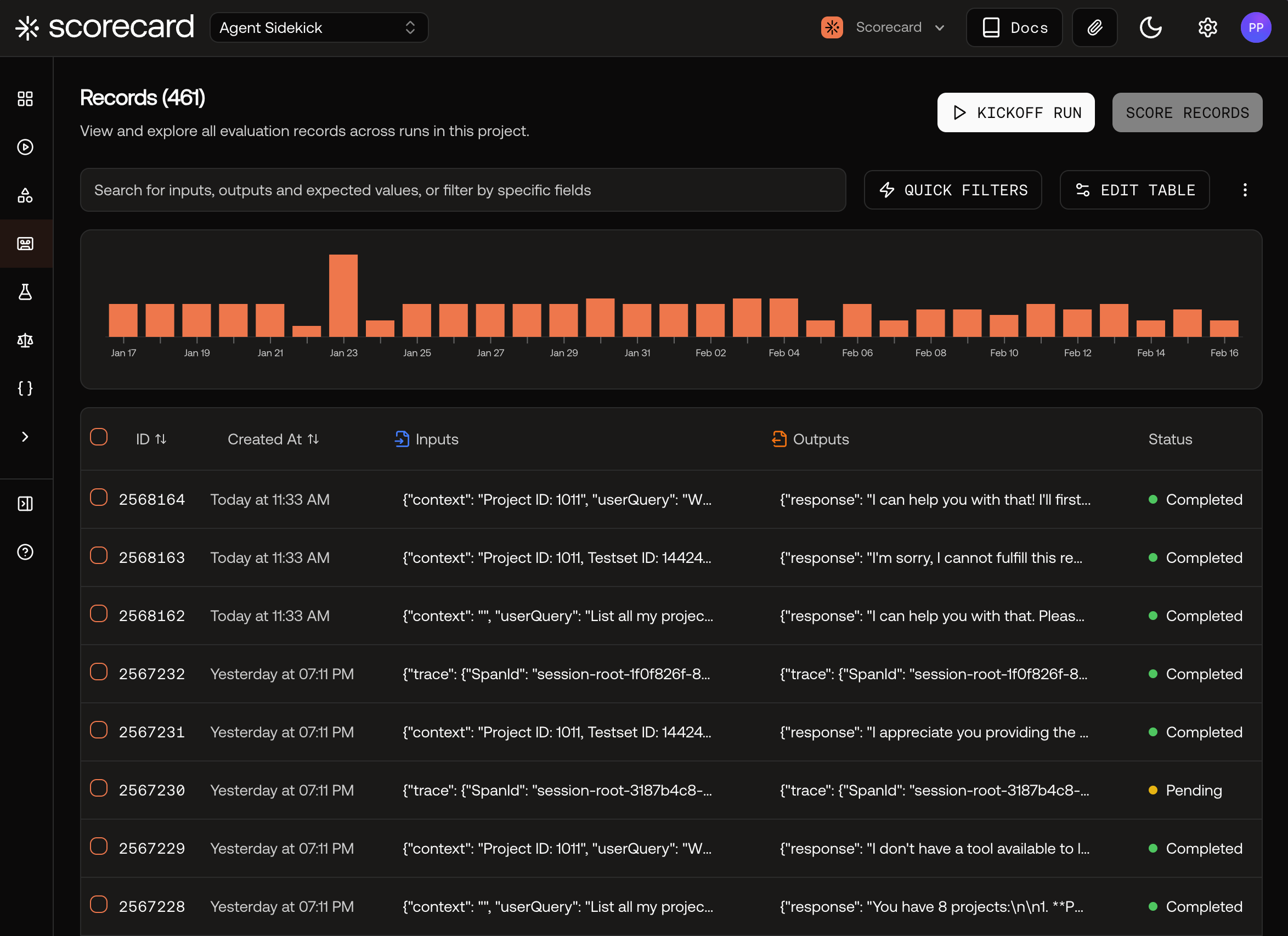

View Traces in the Records Page

Ingested traces appear on the Records page alongside records from other sources (API, Playground, Kickoff). Each row in the table shows:

Each row shows the record’s inputs, outputs, status, source, trace ID, and metric scores. You can search by inputs, outputs, and expected values, or filter by status, source, trace.id, run.id, testcase.id, metric, metadata, time range, and more. Use Quick Filters for presets like “My Recent Records”. Customise visible columns via the Edit Table modal.

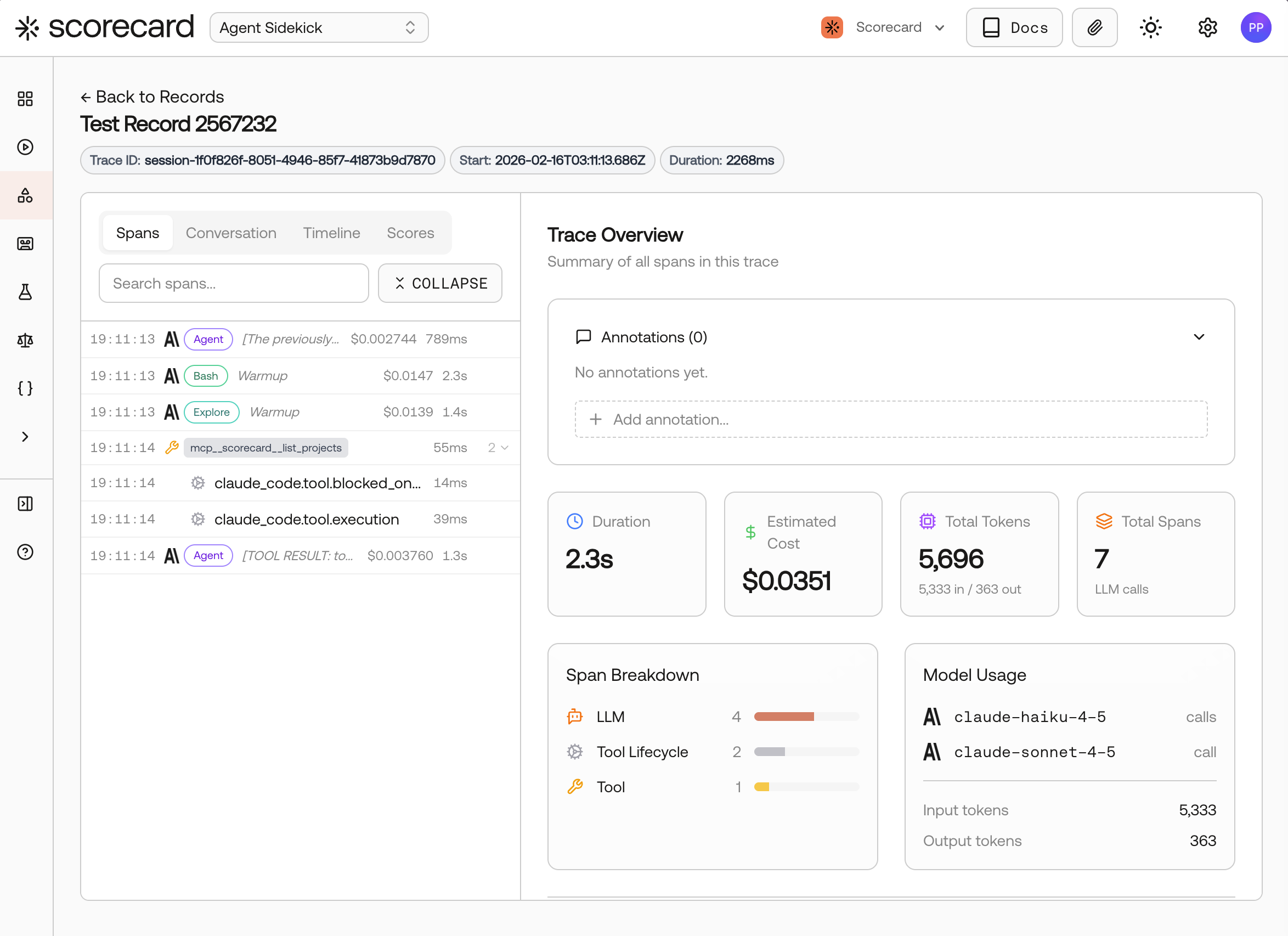

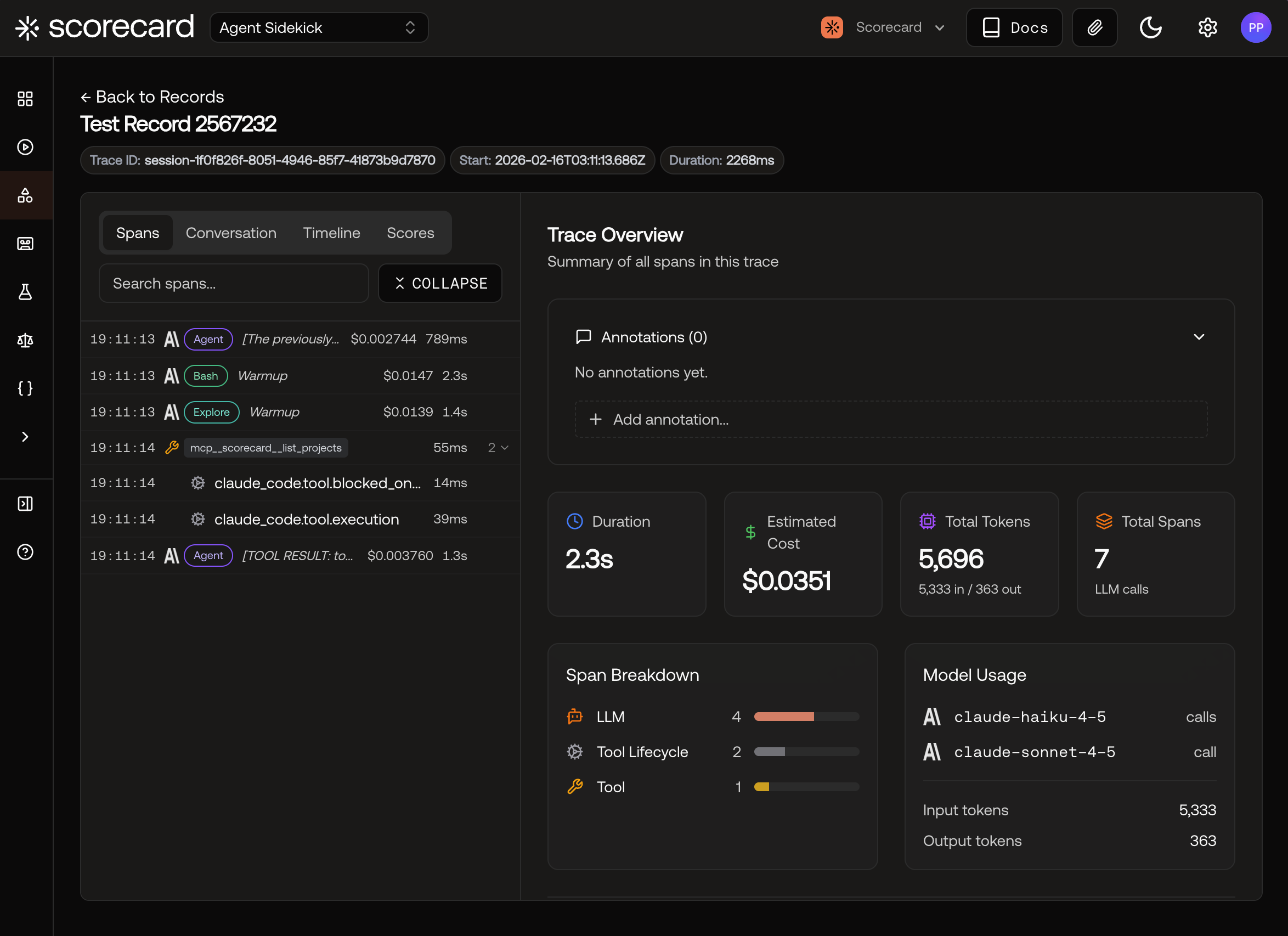

Open a record to see more details including the span tree, conversation (for chat-based traces), timeline, and scores:

Trace Grouping

When running batch operations or multi-step workflows, you can group related traces into a single run using the scorecard.tracing_group_id span attribute. This makes it easier to track and analyze workflows that span multiple LLM calls.

How it works

Add the scorecard.tracing_group_id attribute to your spans with a shared identifier. Scorecard automatically groups spans with the same group ID into a single run.

from opentelemetry import trace

tracer = trace.get_tracer(__name__)

# Use the same group_id for all related operations

group_id = "batch-job-123"

with tracer.start_as_current_span("process_document") as span:

span.set_attribute("scorecard.tracing_group_id", group_id)

# Your LLM call here

with tracer.start_as_current_span("summarize_results") as span:

span.set_attribute("scorecard.tracing_group_id", group_id)

# Another LLM call in the same workflow

Use cases

- Batch processing: Group all documents processed in a single batch job

- Multi-step agents: Track all LLM calls within an agent’s execution

- Workflows: Link related operations across different services

- A/B testing: Group traces by experiment variant for comparison

Traces with the same scorecard.tracing_group_id will appear together in the same run, making it easy to analyze aggregate metrics across related operations.

AI-Specific Error Detection

Scorecard’s tracing goes beyond technical failures to detect AI-specific behavioral issues that traditional observability misses. The system acts as an always-on watchdog, analyzing every AI interaction to catch both obvious technical errors and subtle behavioral problems that could impact user experience.

Silent Failure Detection

The most dangerous errors in AI systems are “silent failures” where your AI responds but incorrectly. Scorecard automatically detects behavioral errors including off-topic responses, workflow interruptions, safety violations, hallucinations, and context loss. These silent failures often go unnoticed without specialized AI observability but can severely impact user trust and application effectiveness.

Technical errors like rate limits, timeouts, and API failures are captured automatically through standard trace error recording. However, AI applications also face unique challenges like semantic drift, safety policy violations, factual accuracy issues, and task completion failures that require intelligent analysis beyond traditional error logging.

Custom Error Detection

Create custom metrics through Scorecard’s UI to detect application-specific behavioral issues. Design AI-powered metrics that analyze trace data for off-topic responses, safety violations, or task completion failures. These custom metrics automatically evaluate your traces and surface problematic interactions that would otherwise go unnoticed in production.

Supported Frameworks & Providers

Scorecard traces LLM applications built with popular open-source frameworks through OpenLLMetry. OpenLLMetry provides automatic instrumentation for:

Application Frameworks

- CrewAI – Multi-agent collaboration

- Haystack – Search and question-answering pipelines

- LangChain – Chains, agents, and tool calls

- Langflow – Visual workflow builder

- LangGraph – Multi-step workflows and state machines

- LiteLLM – Unified interface for 100+ LLMs

- LlamaIndex – RAG pipelines and document retrieval

- OpenAI Agents SDK – Assistants API and function calling

- Vercel AI SDK – Full-stack AI applications

LLM Providers

- Aleph Alpha

- Anthropic

- AWS Bedrock

- AWS SageMaker

- Azure OpenAI

- Cohere

- Google Gemini

- Google Vertex AI

- Groq

- HuggingFace

- IBM Watsonx AI

- Mistral AI

- Ollama

- OpenAI

- Replicate

- Together AI

- and more

Vector Databases

- Chroma

- LanceDB

- Marqo

- Milvus

- Pinecone

- Qdrant

- Weaviate

For the complete list of supported integrations, see the OpenLLMetry repository. All integrations are built on OpenTelemetry standards and maintained by the community. Custom Providers

For frameworks or providers not listed above, you can use:

- HTTP Instrumentation: OpenLLMetry’s

instrument_http() for HTTP-based APIs

- Manual Spans: Emit custom OpenTelemetry spans for proprietary systems

See the OpenLLMetry documentation for manual instrumentation guides.

Use cases

- Production observability for LLM quality and safety

- Debugging slow/failed requests with full span context

- Auditing prompts/completions for compliance

- Attributing token cost and latency to services/cohorts

- Building evaluation datasets from real traffic (Trace to Testcase)

Next steps

- Follow the Tracing Quickstart to send your first trace.

- Set up Claude Agent SDK Tracing with zero code changes.

- Instrument LangChain chains and agents.

- Use the Vercel AI SDK wrapper for full-stack AI apps.

- Open the Colab notebook for an interactive tour.

Happy tracing! 🚀