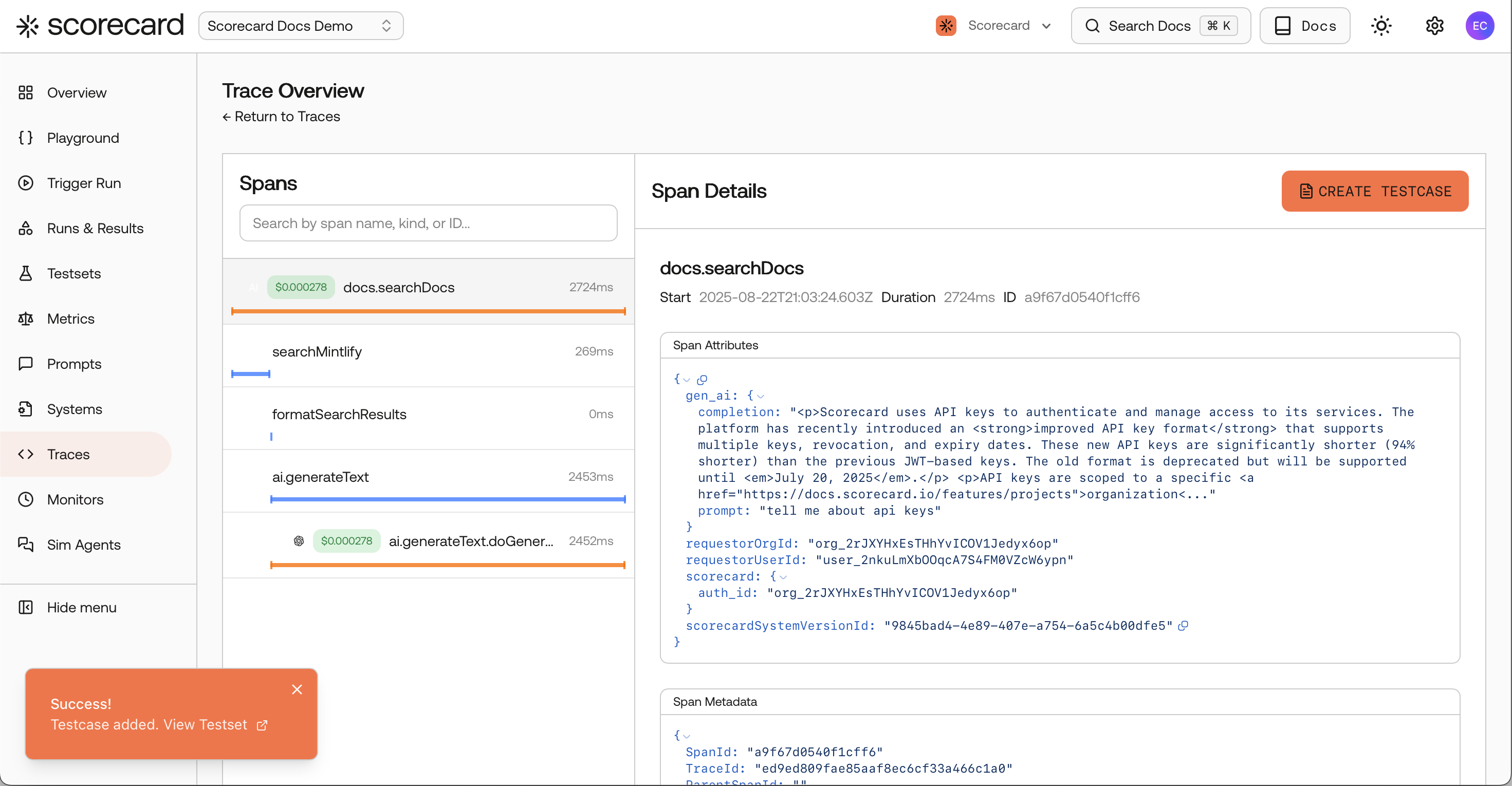

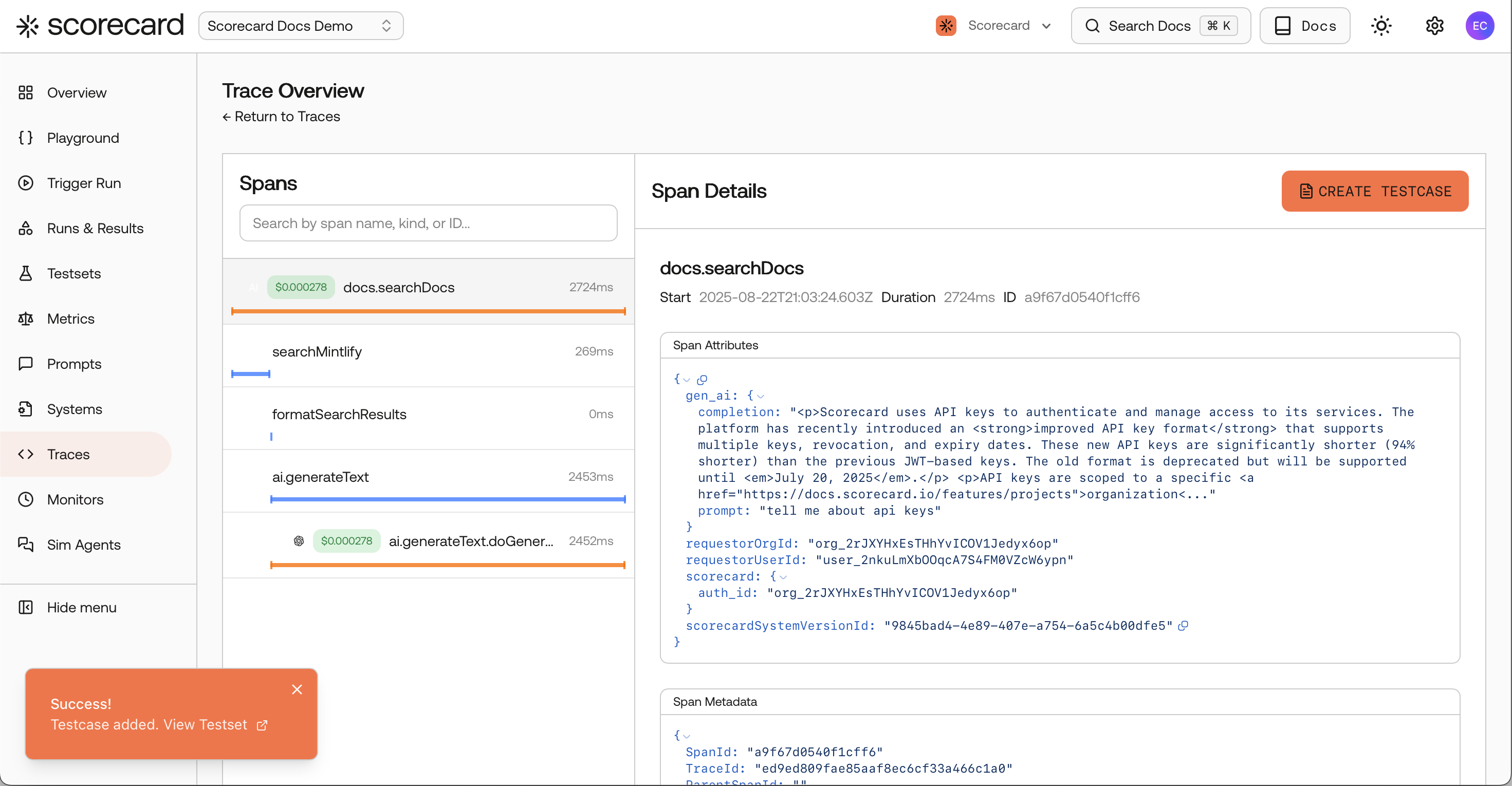

Create testcase from span.

Why it matters

- Grow testsets from real user prompts and outputs.

- Reproduce tricky scenarios when evaluating new models or prompts.

- Track quality over time with consistent, production-grounded inputs.

If you call it something else

- Traces → Testcases: Similar to “snapshotting” production examples into a labeled dataset. Scorecard streamlines it in the trace UI and auto-maps prompt/completion fields.

- Where testcases live: Saved into Testsets so you can re-run evaluations and compare Runs & Results over time.

- What’s extracted: Prompt and completion are pulled from common keys (

openinference.*,ai.prompt/ai.response,gen_ai.*).

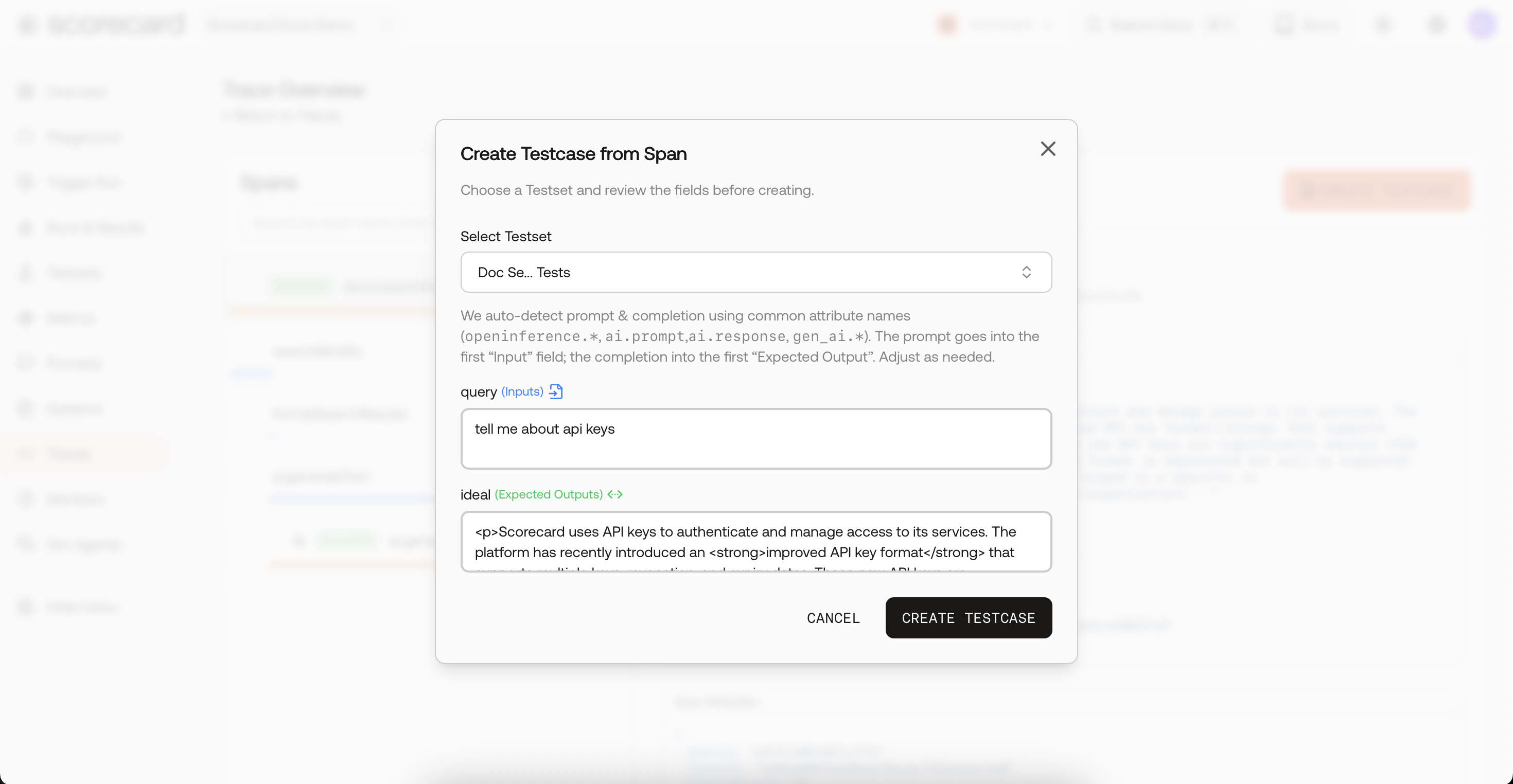

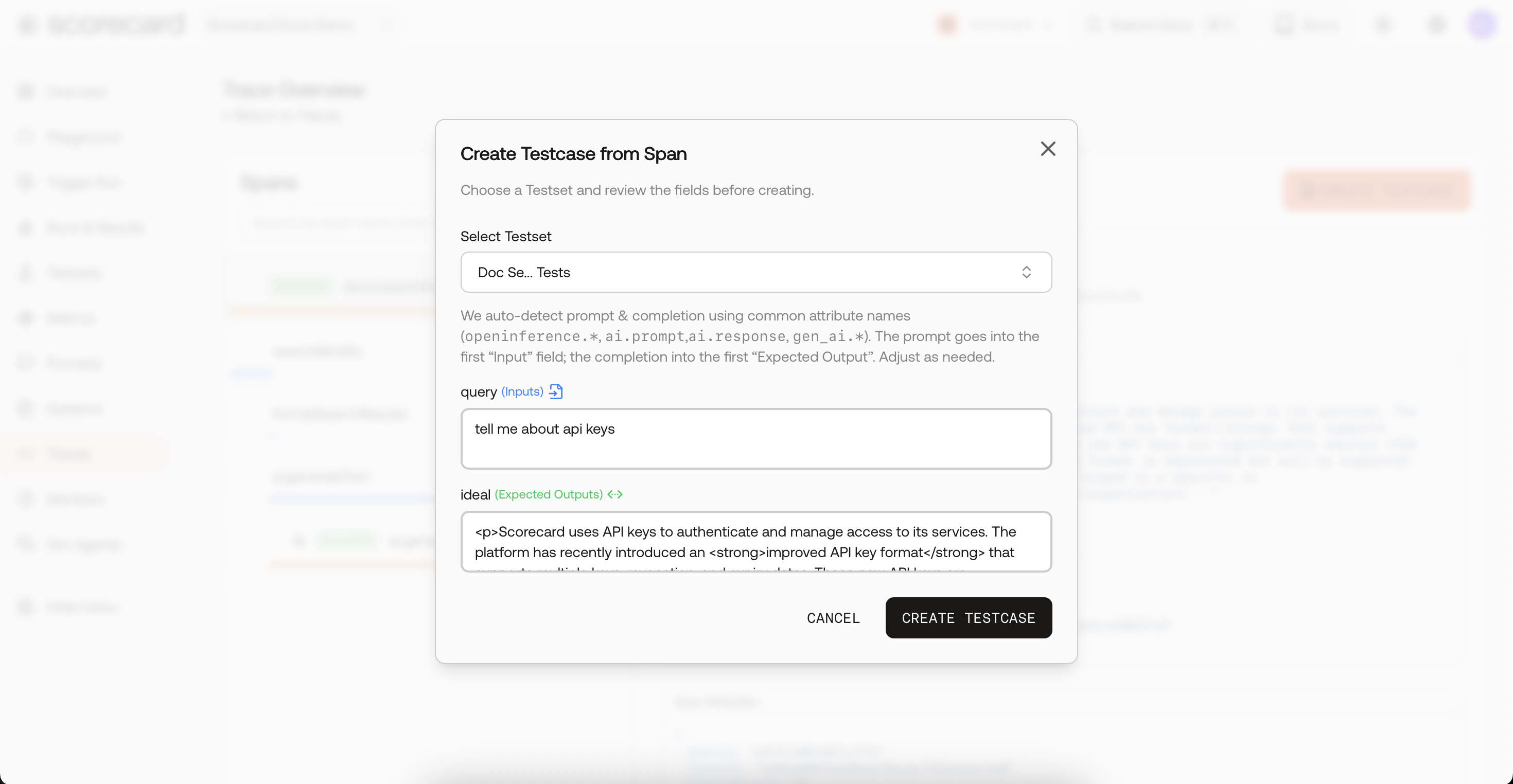

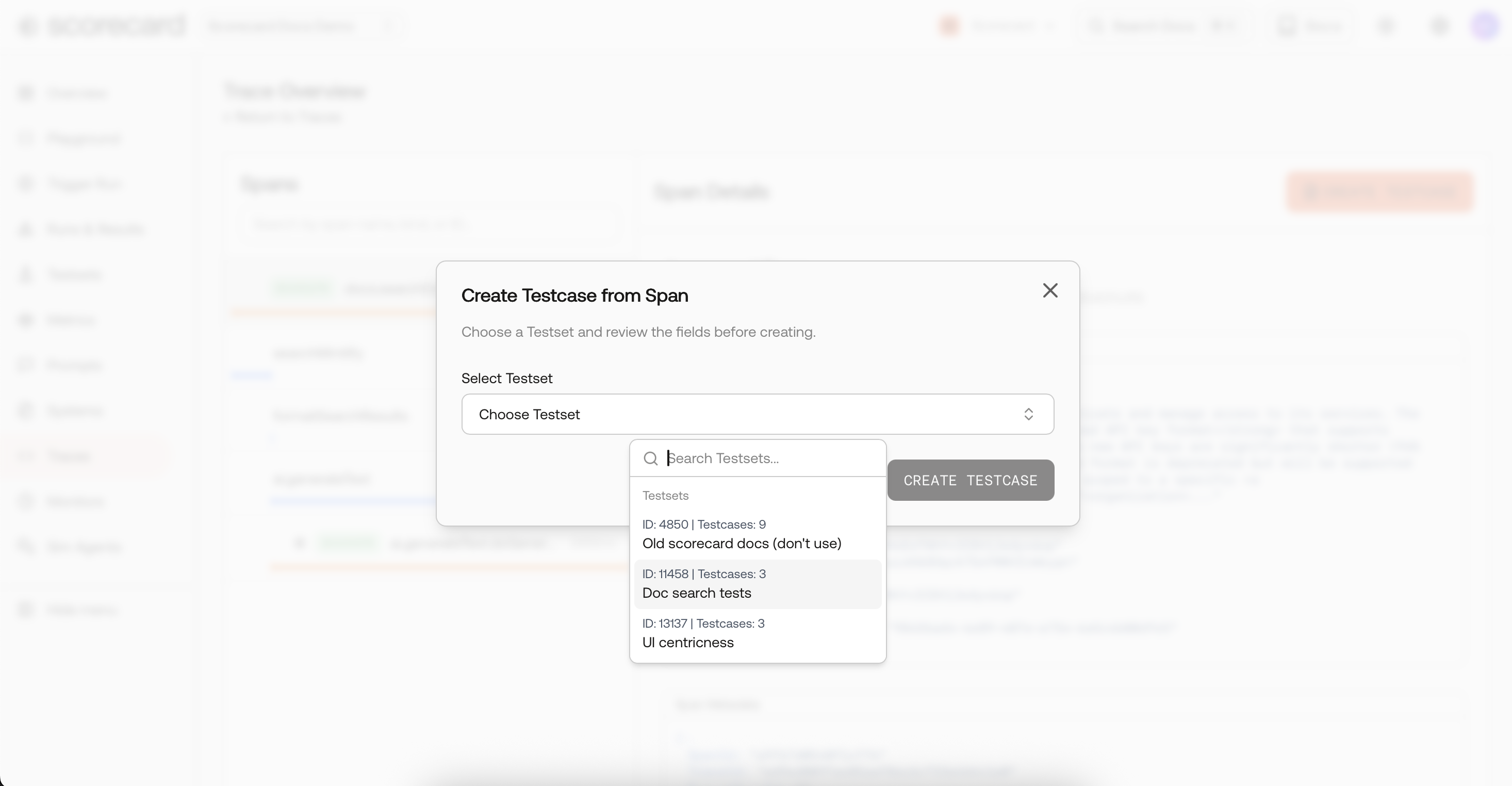

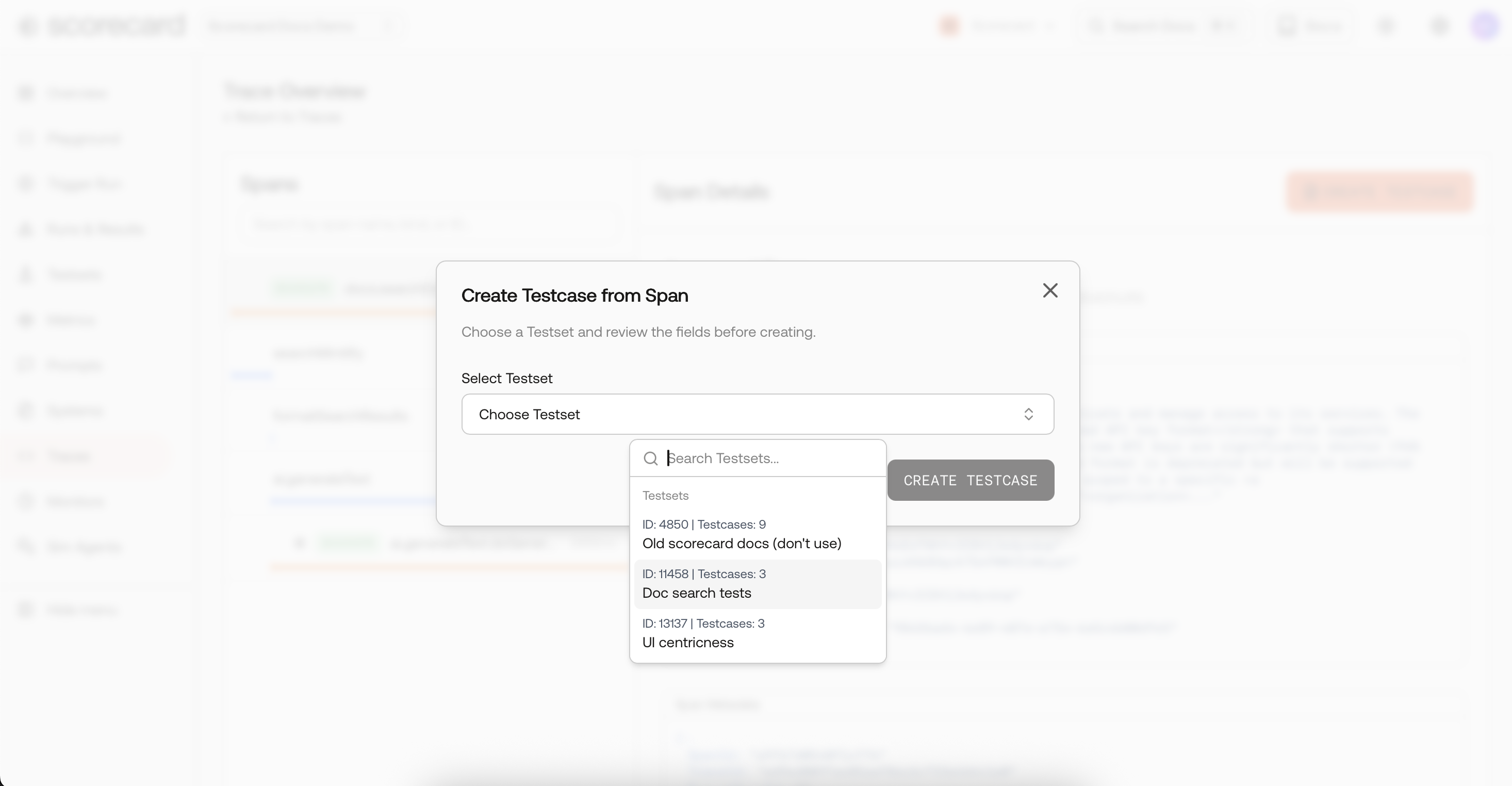

Create testcase modal.

How it works

- Open any trace and drill down to the span that contains the LLM call.

- Click Create Testcase (document icon).

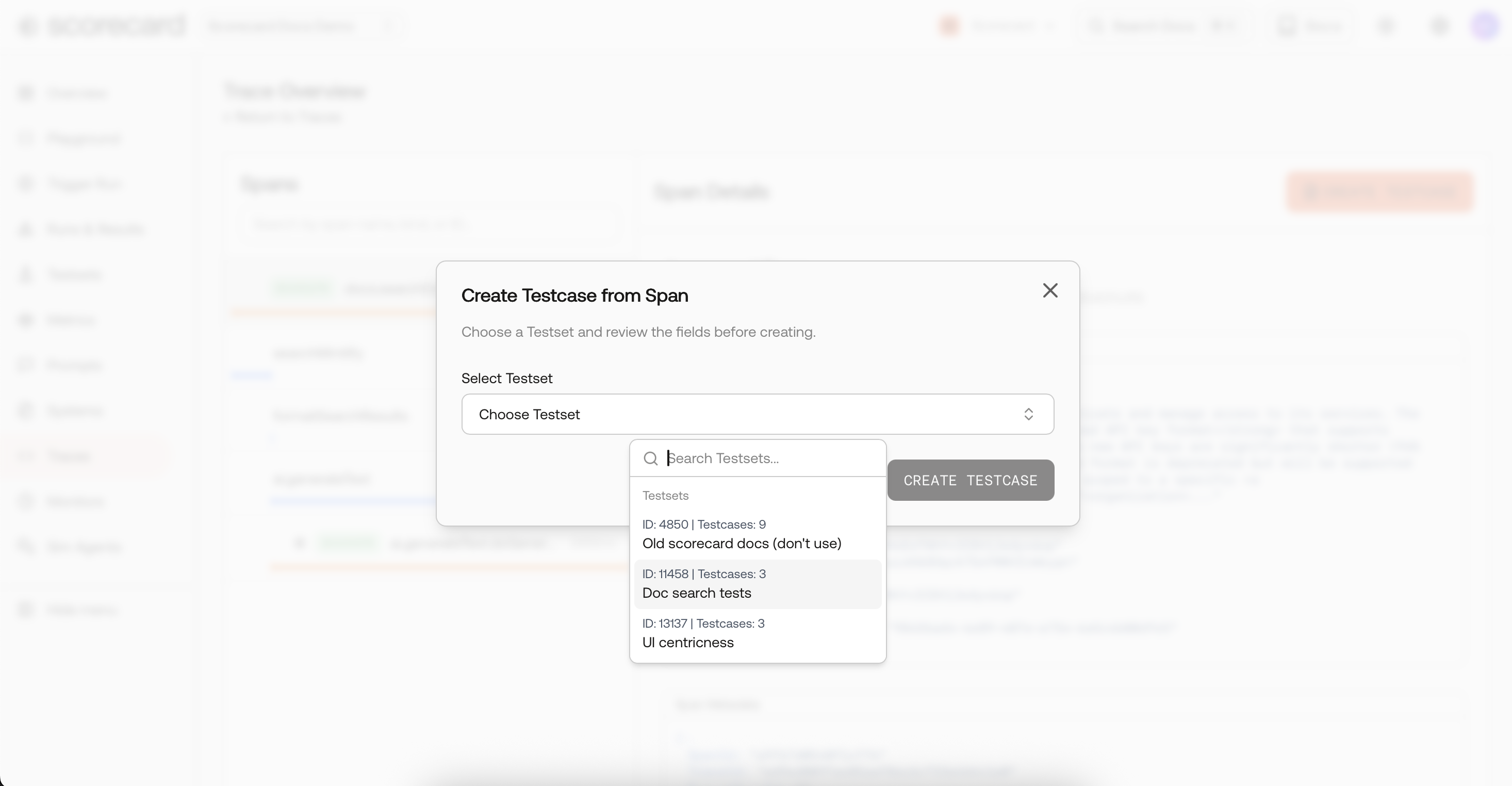

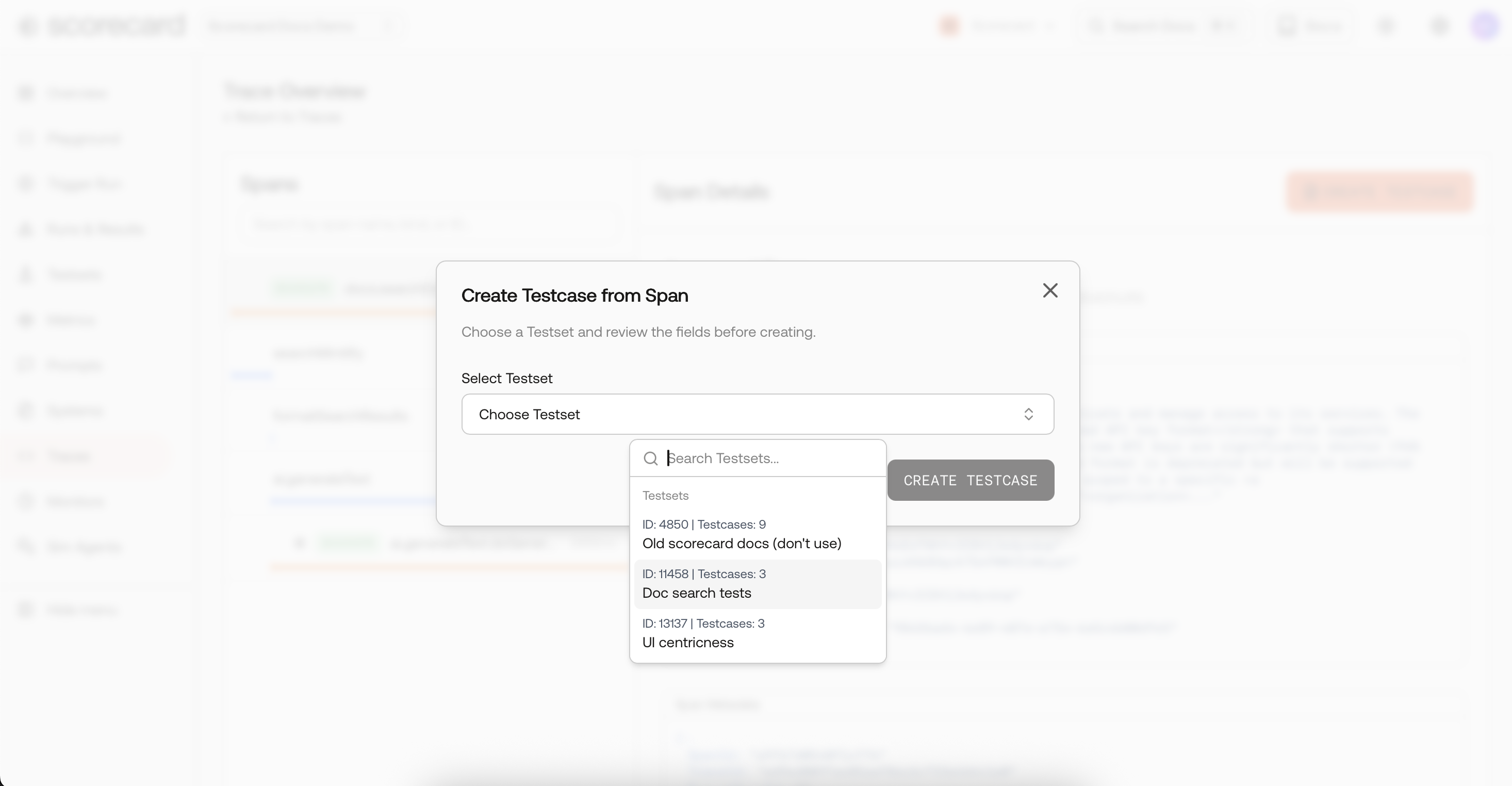

- Pick a Testset or create a new one.

- Scorecard auto-extracts the prompt and completion from span attributes (

openinference.*,ai.prompt/ai.response,gen_ai.*). Adjust fields before saving. - The testcase appears immediately in the selected testset.

Select Testset dialog.

Create testcase result .

Use cases

- create gold-standard datasets from production data

- run offline evaluations on real cases, and validate changes before deployment.

Automating this flow? Use the

/testcases API to create testcases programmatically from traces.