Metrics serve as benchmarks for assessing the quality of LLM responses, while scoring is the process of applying these metrics to generate actionable insights about your LLM application.

Assess Your LLM Quality With Scorecard’s Metrics

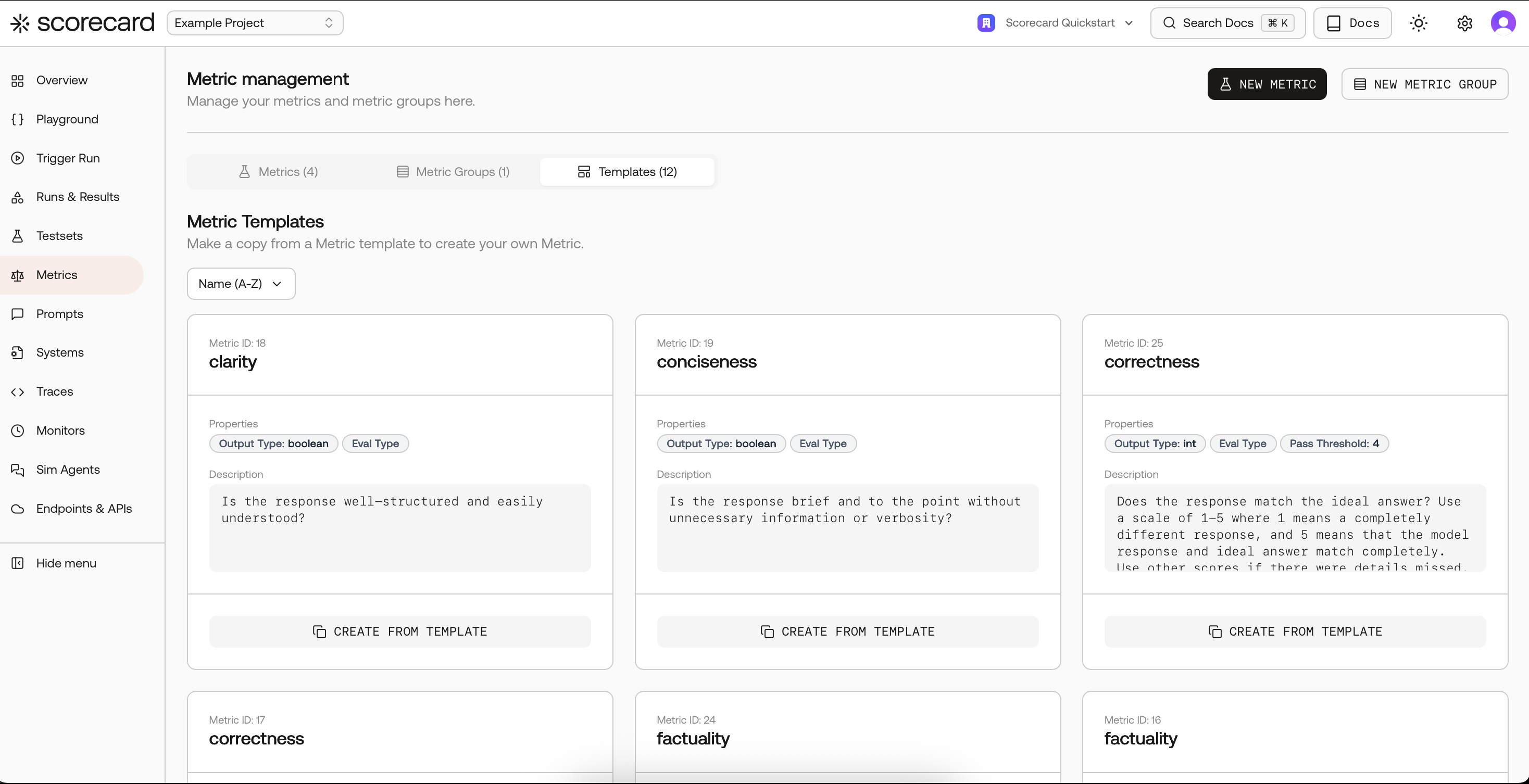

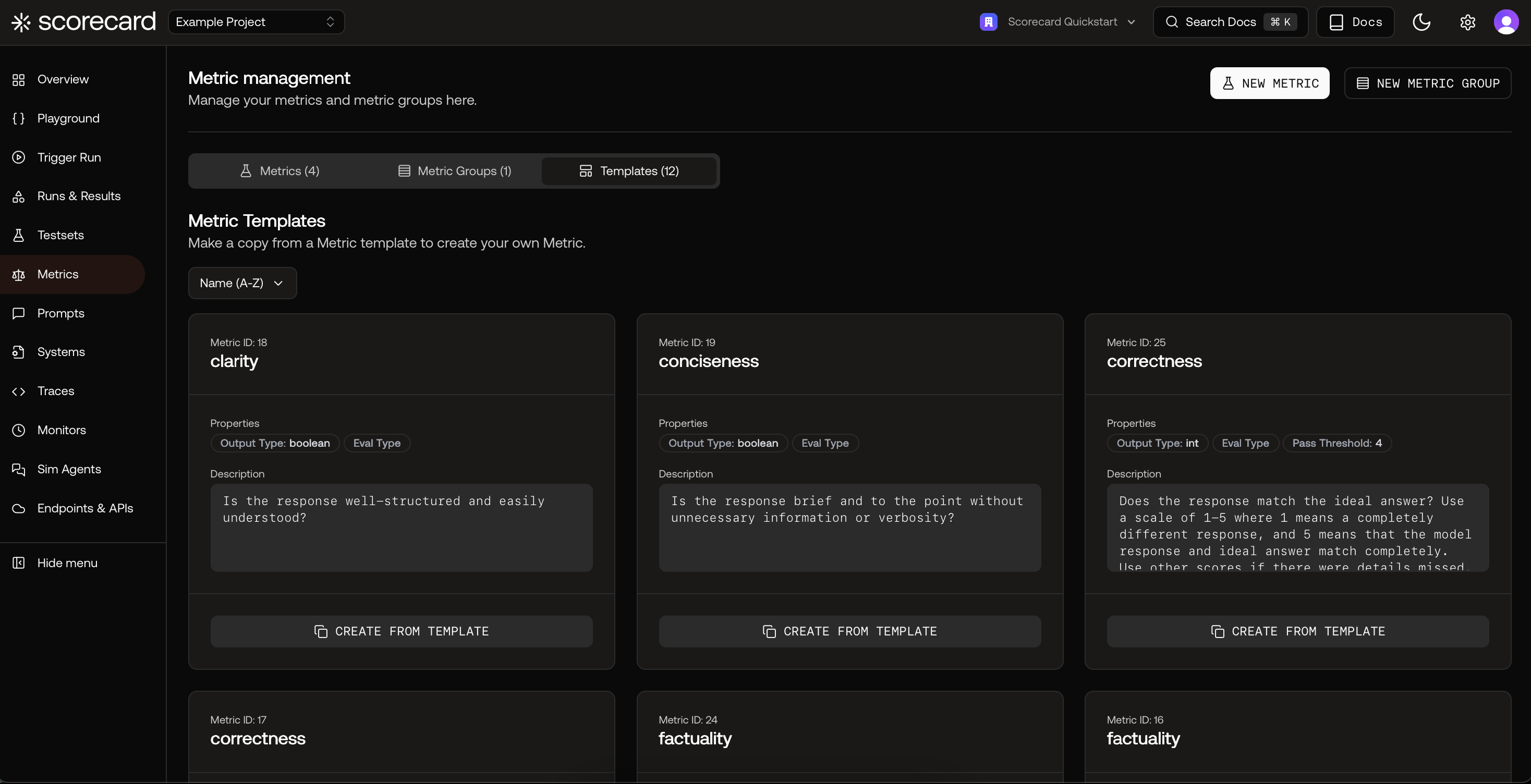

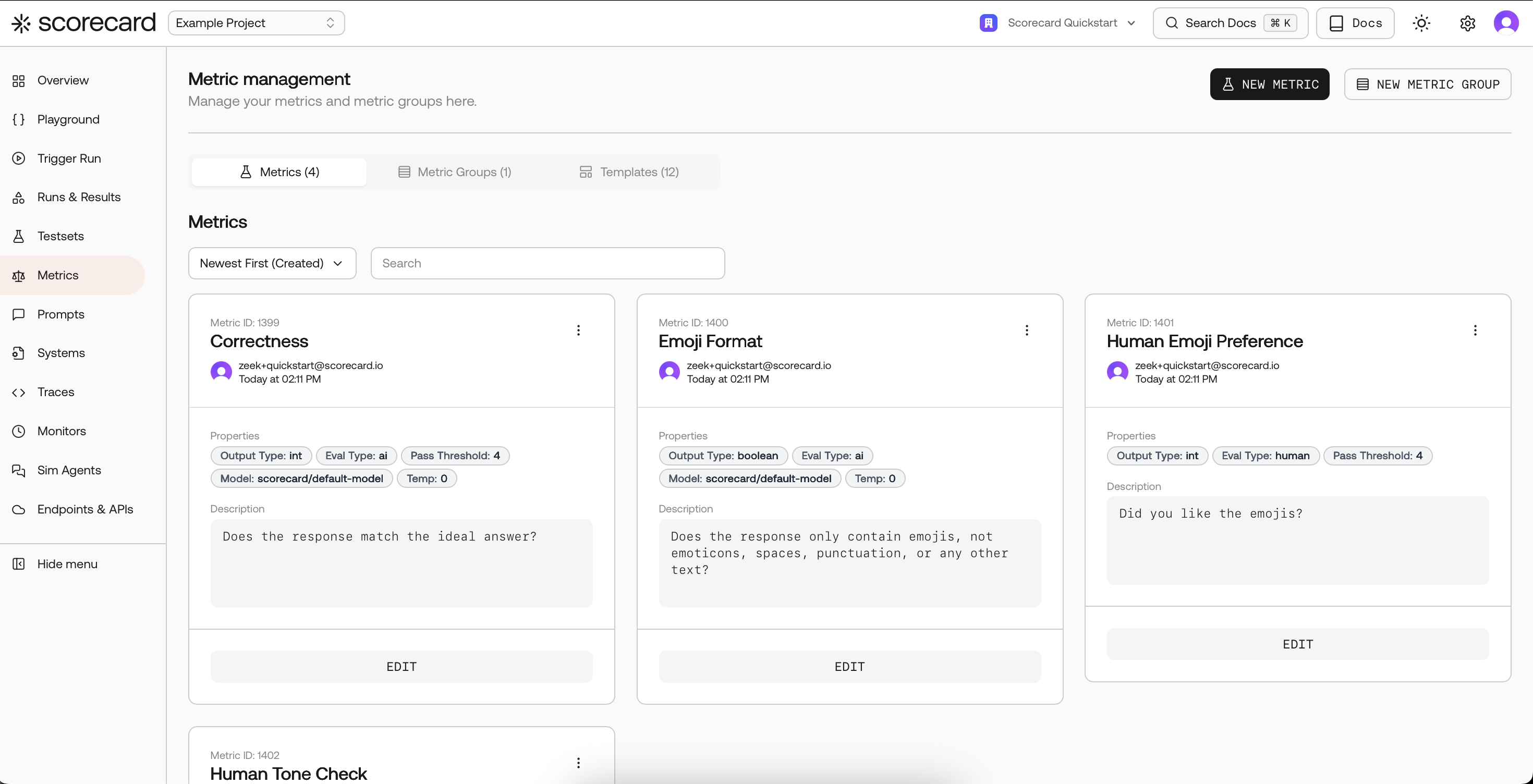

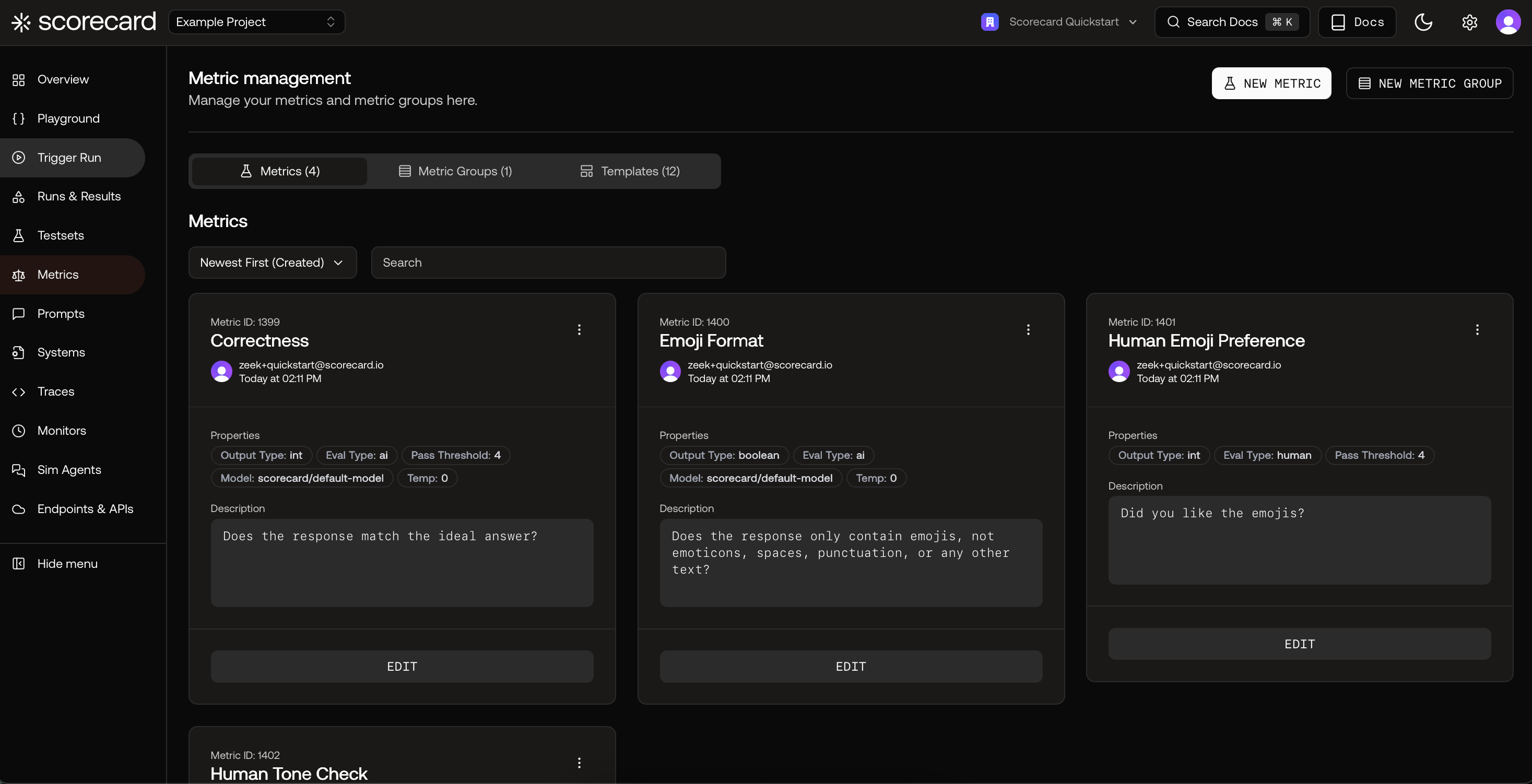

Scorecard’s metric system is organized into three main components:- Metrics: Individual evaluation criteria that assess specific aspects of your LLM’s performance

- Metric Groups: Collections of related metrics that work together to provide comprehensive evaluation

- Templates: Pre-built metric configurations that you can copy and customize for your use case

Metric Templates

The Scorecard team consists of experts in LLM evaluation, with extensive experience in assessing and deploying large-scale AI applications at some of the world’s leading companies. Our Scorecard Core Metrics, validated by our team, represent industry-standard benchmarks for LLM performance. You can explore these Core Metrics in the Scoring Lab, under “Templates,” to select the ones best suited for your LLM evaluation needs.

Overview of Scorecard Core Metrics in Templates

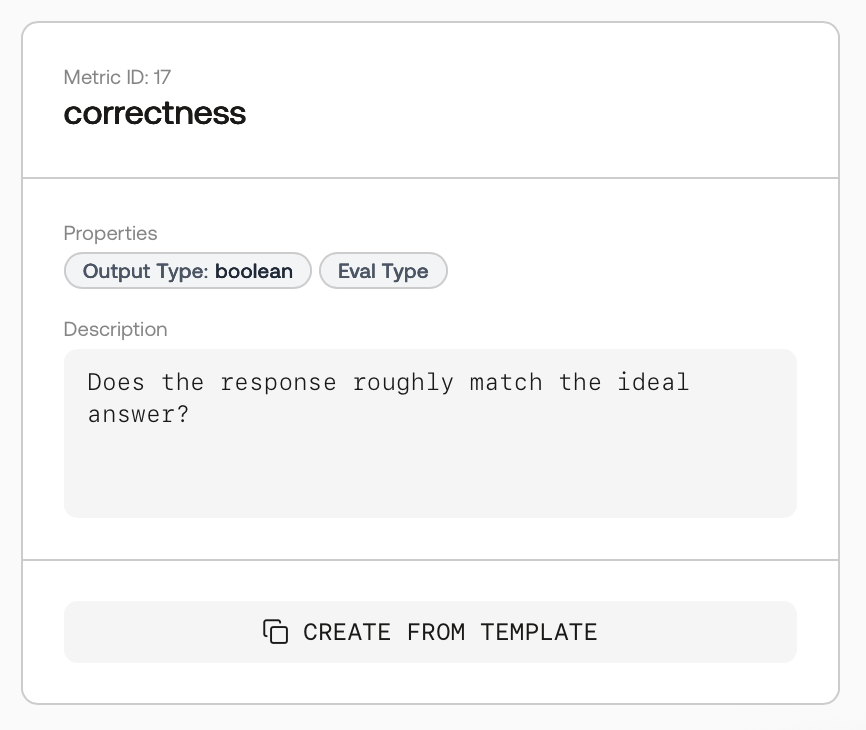

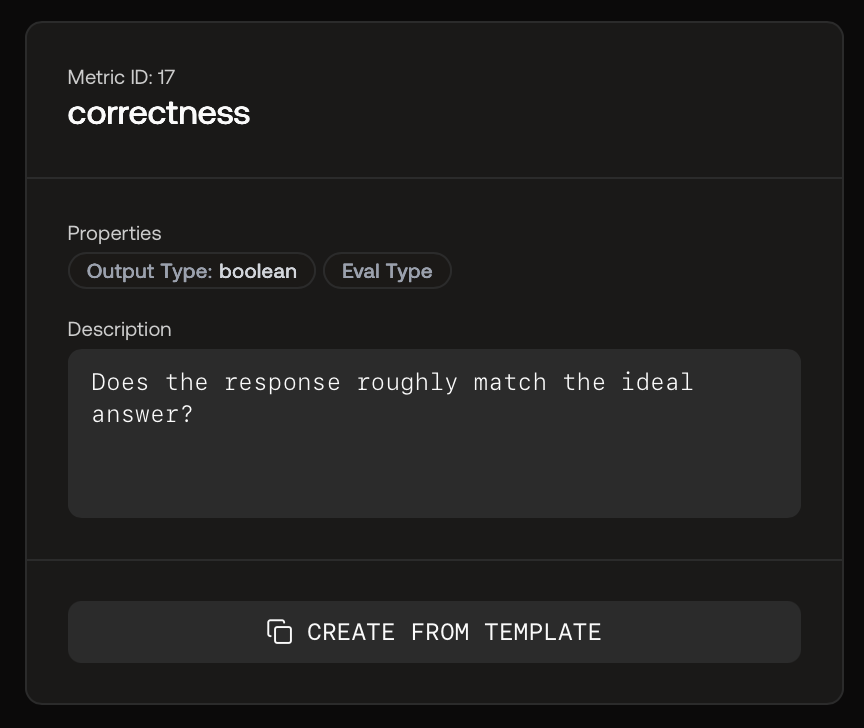

Metric Template to Copy

Second-Party Metrics from MLflow

Scorecard provides support for running MLflow metrics directly within the Scorecard platform and provides complementary additional capabilities such as aggregation, A/B comparison, iteration and more. Several of MLflow’s metrics of thegenai package are available in the Scorecard metrics library. These metrics include:

- Relevance

- Description: Evaluates the appropriateness, significance, and applicability of an LLM’s output in relation to the input and context.

- Purpose: Assesses how well the model’s response aligns with the expected context or intent of the input.

- Answer Relevance

- Description: Assesses the relevance of a response provided by an LLM, considering the accuracy and applicability of the response to the given query.

- Purpose: Focuses specifically on the relevance of responses in the context of queries posed to the LLM.

- Faithfulness

- Description: Measures the factual consistency of an LLM’s output with the provided context.

- Purpose: Ensures that the model’s responses are not only relevant but also accurate and trustworthy, based on the information available in the context.

- Answer Correctness

- Description: Evaluates the accuracy of the provided output based on a given “ground truth” or expected response.

- Purpose: Crucial for understanding how well an LLM can generate correct and reliable responses to specific queries.

- Answer Similarity

- Description: Assesses the semantic similarity between the model’s output and the provided targets or “ground truth”.

- Purpose: Used to evaluate how closely the generated response matches the expected response in terms of meaning and content.

Second-Party Metrics from RAGAS

You can also utilize metrics from the RAGAS framework, which is specialized in evaluating Retrieval Augmented Generation (RAG) pipelines. Scorecard also provides complementary additional capabilities such as aggregation, A/B comparison, iteration, and more.Component-Wise Evaluation

To assess the performance of individual components within a RAG pipeline, you can leverage metrics such as:- Faithfulness: Measures the factual consistency of the generated response against the provided context.

- Answer Relevancy: Assesses how pertinent the generated response is to the given prompt.

- Context Recall: Evaluates the extent to which the retrieved context aligns with the annotated response.

- Context Precision: Determines whether all ground-truth relevant items present in the contexts are ranked higher.

- Context Relevancy: Evaluates how relevant the retrieved context is in addressing the provided query.

End-to-End Evaluation

To assess the end-to-end performance of a RAG pipeline, you can leverage metrics such as:- Answer Semantic Similarity: Assesses the semantic resemblance between the generated response and the ground truth.

- Answer Correctness: Gauges the accuracy of the generated response when compared to the ground truth.

Templates for common MLflow and RAGAS metrics are available under Templates. Copy a template (e.g., Relevance, Faithfulness) and tailor the guidelines to your domain.

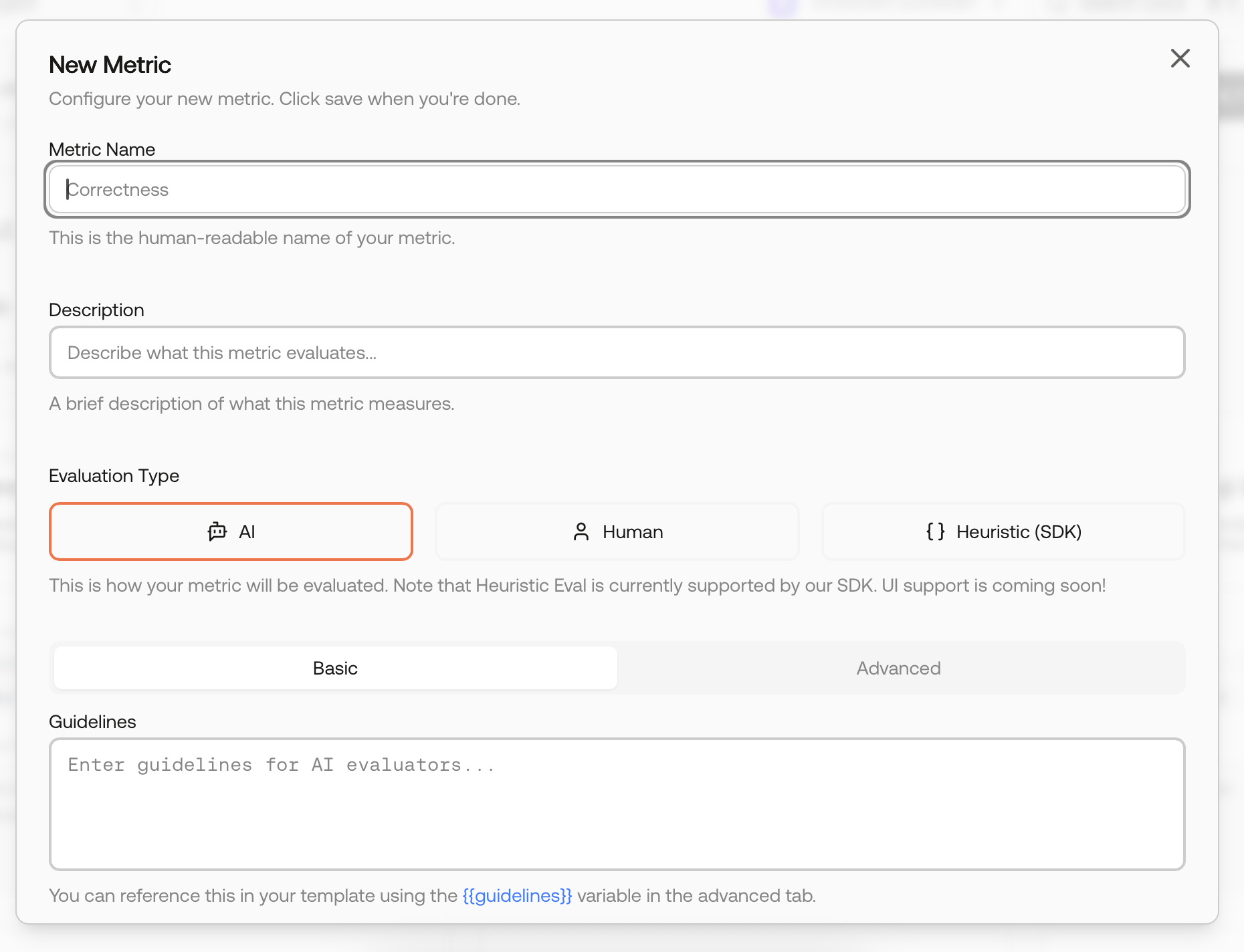

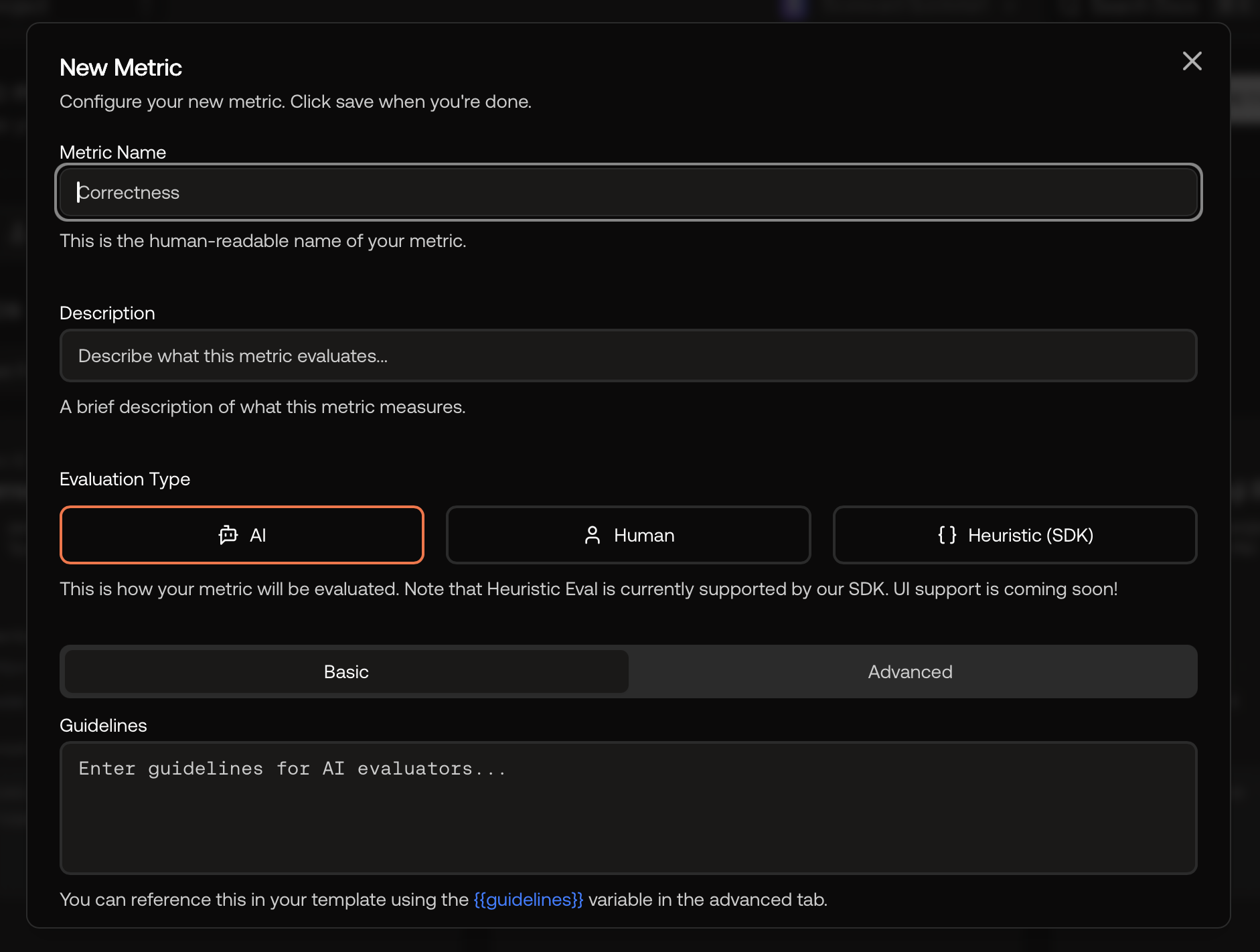

Define Custom Metrics for Your LLM Use Case

Adding a New Custom Metric in Scoring Lab

- Metric Name: a human-readable name of your metric.

- Metric Guidelines: natural language instructions to define how a metric should be computed.

- Evaluation Type: how your metric will be evaluated

- AI: takes the Metric Guidelines as prompt and computes the metric via an AI model.

- Human: a human subject-matter expert manually evaluates the metric.

- Heuristic: write custom evaluation logic in Python or TypeScript that runs in a secure sandbox. See Heuristic Metrics below.

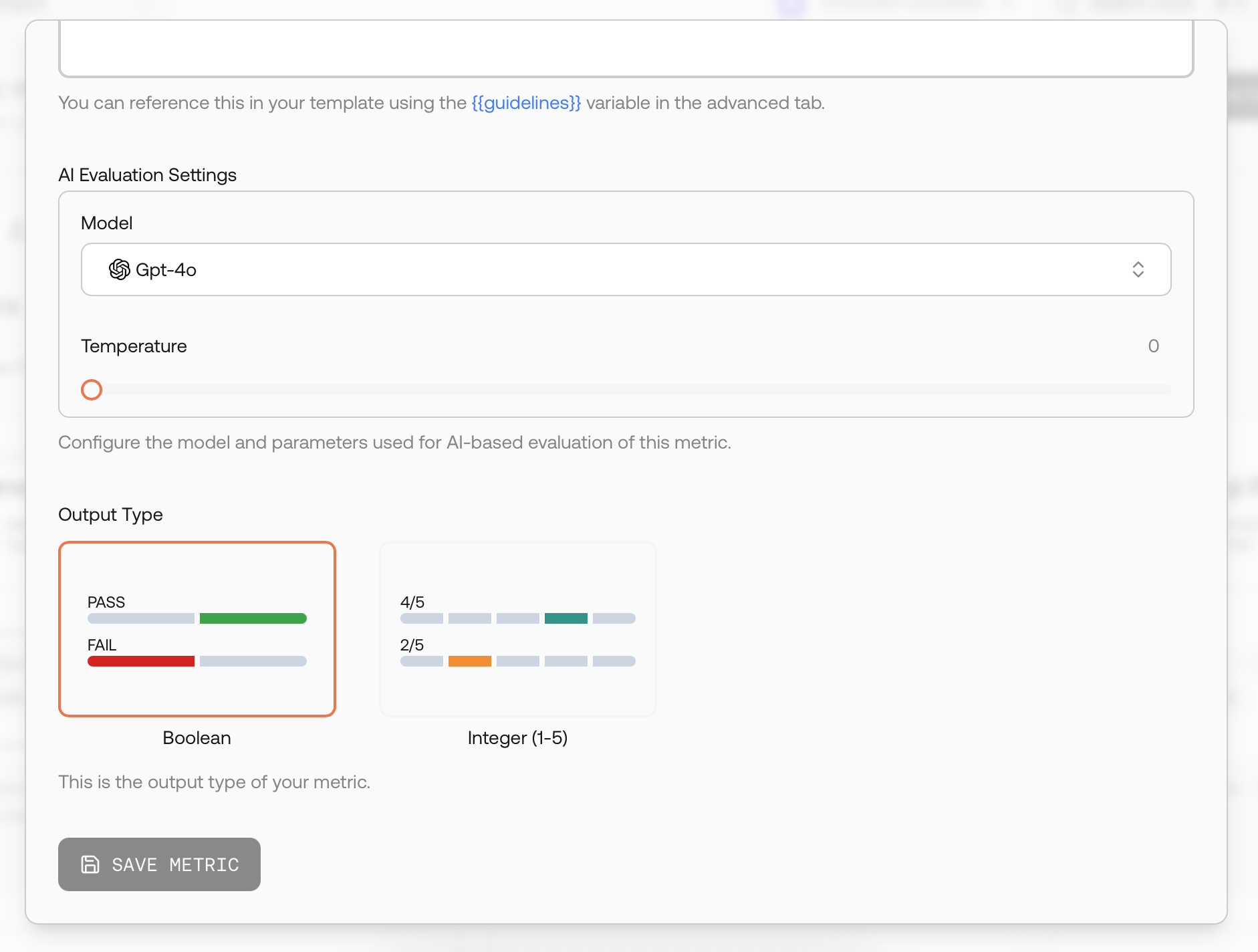

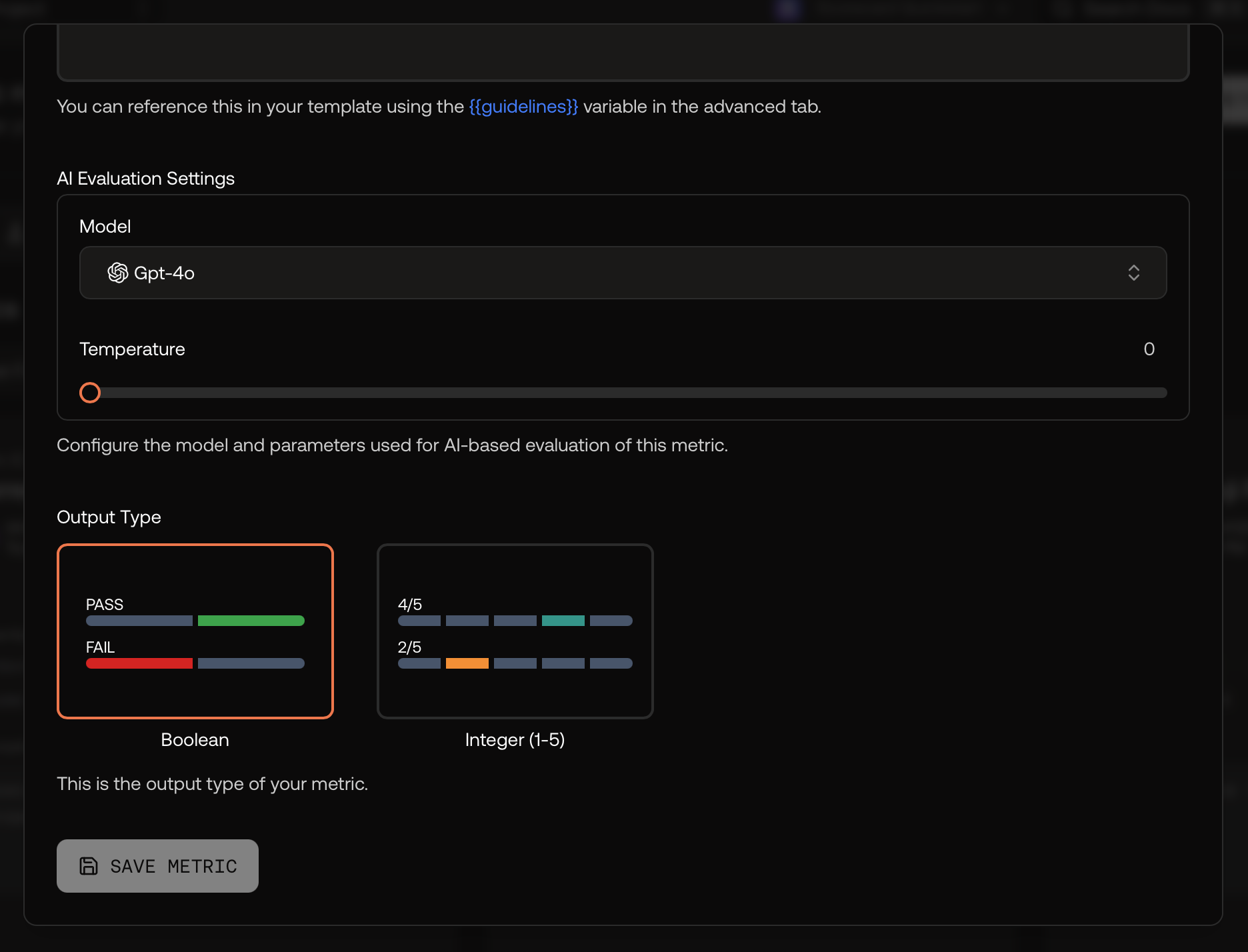

- Output Type: the output type of your metric.

- Boolean

- Integer (1-5)

- Float (0.0-1.0)

New Custom Metric

Output Types

Boolean Output Type

- Range: true/false.

- Pass/Fail:

binaryScoredirectly represents pass/fail. - Good for: format checks, refusals, policy/guardrails, presence/absence.

- Aggregation: Ratios of passes over total and counts across runs and Metric Groups.

Integer Output Type

- Range: 1–5 (coarse ordinal scale where higher is better).

- Pass/Fail Threshold: Configure a

passingThreshold(e.g., 4). A record passes ifintScore ≥ passingThreshold. - Good for: rubric-based quality judgments (helpfulness, factuality, completeness) with simple guidance for SMEs.

- Aggregation: Means and distributions across runs/testsets and within Metric Groups.

Float Output Type

Float metrics produce a normalized score between 0.0 and 1.0 and are useful when you want a graded, continuous measure instead of a binary or 1–5 integer rating.- Range: 0.0–1.0, where higher is better by convention.

- Pass/Fail Threshold: Configure a

passingThreshold(e.g., 0.90). A record passes iffloatScore ≥ passingThreshold. - Good for: semantic similarity, confidence/uncertainty, coverage, or quality scores you expect to average across many samples.

- Aggregation: Scorecard aggregates float metrics via means across runs/testsets and within Metric Groups, enabling trend tracking over time.

Automated Scoring

Increasing Complexity When Manually Evaluating Large Testsets

When introducing a framework to evaluate a Large Language Model (LLM), the process typically begins on a small scale by manually evaluating a few Testcases. These initial Testcases form the basis of the first Testset, and subject-matter experts (SMEs) evaluate the LLM outputs of these Testcases. As the evaluation process iterates, the number of Testcases grows, allowing testing across more dimensions using different metrics. However, as the number of Testcases increases, the time required to evaluate the metrics increases proportionally. Since SMEs have limited resources, the evaluation of large Testsets can quickly become a bottleneck, hindering the progress of the LLM evaluation process.AI-Supported Scoring With Scorecard

Scorecard offers a solution to this problem by outsourcing the evaluation part to an AI model. With Scorecard’s AI-powered scoring, valuable time can be saved and SMEs can focus on complex edge cases instead of well-defined and easy-to-score metrics. This streamlines the LLM evaluation process, enabling teams to iterate and deliver faster.Define Metrics to Score With AI

When defining a new metric to be used for your LLM evaluation, you can specify the evaluation type. Choose the evaluation type “AI” to have a metric automatically scored by an AI model.

New Metric With AI-Scoring

Basic and Advanced AI Metric Configuration

The AI metric creation flow now offers two modes:- Basic Mode: In this mode, you define the metric guidelines using natural language instructions. Make sure to accurately describe how the AI model should score the metric.

- Advanced Mode: For more control, you can access the advanced mode to modify the full prompt template beyond just the guidelines, allowing for more sophisticated evaluation logic.

AI Evaluation Settings

In the AI Evaluation Settings section of the metric creation or editing modal, you can configure the model and parameters used for AI-based evaluation. This gives you fine-grained control over how your metrics are evaluated:- Model Selection: Choose from available models (such as GPT-4o) to perform the evaluation

- Temperature: Control the randomness of the AI evaluation (typically set to 0 for consistent scoring)

- Additional Parameters: Configure other model-specific settings as needed

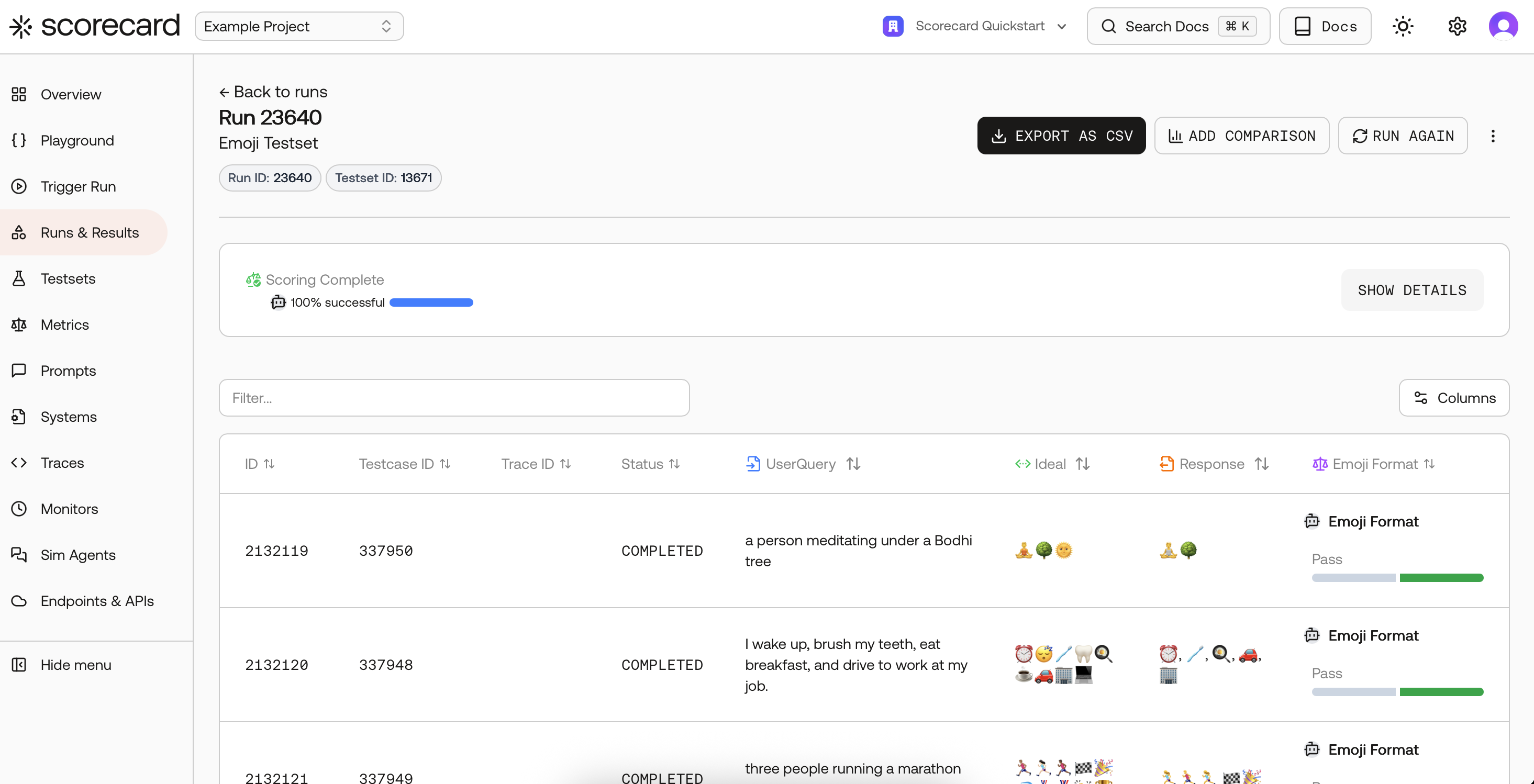

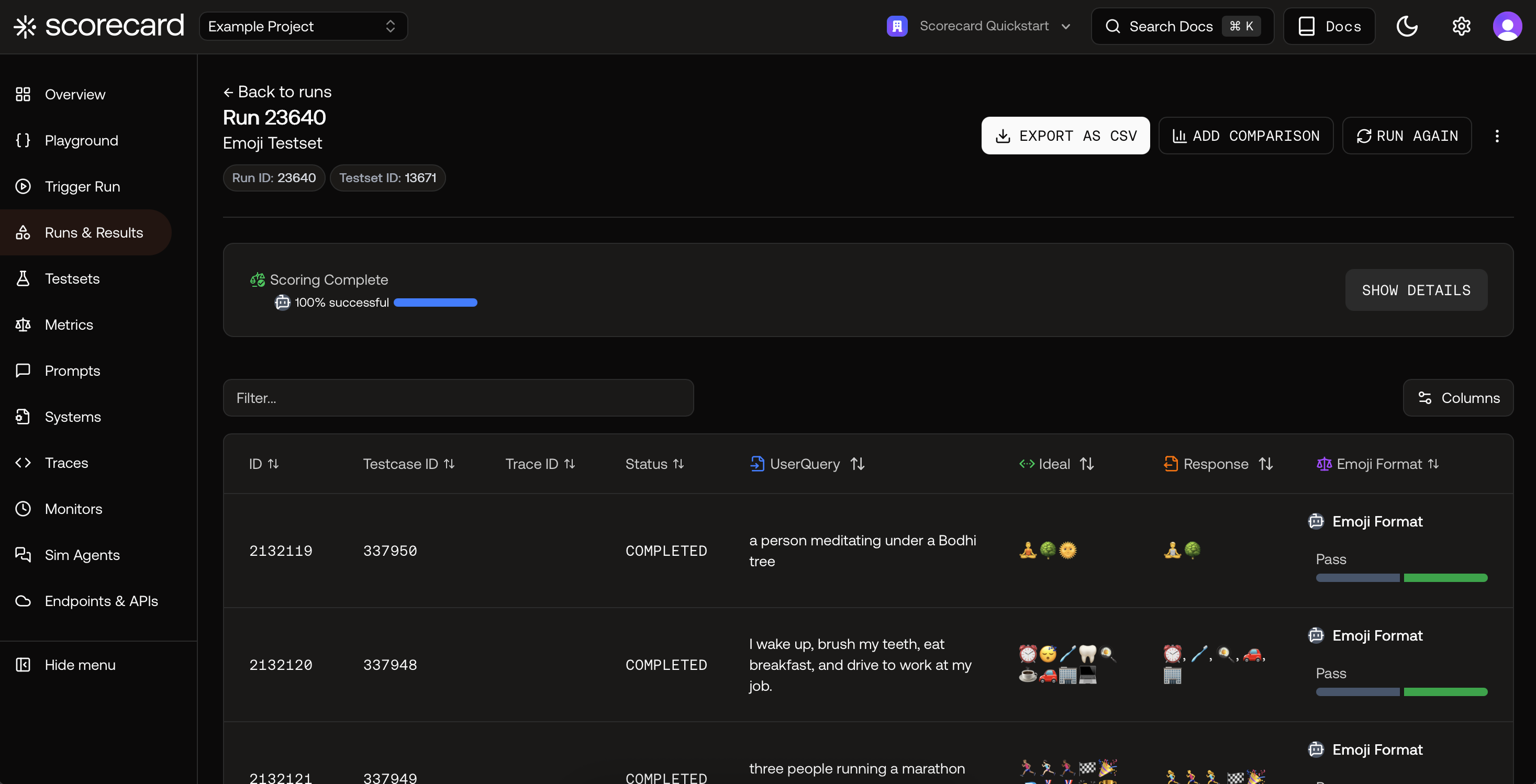

Score Your Testset With a Single Click

After you have run a Testset against your LLM and the model has generated responses for each Testcase, the Scorecard UI displays the status of “Awaiting Scoring”.

Run Scoring in the Scorecard UI

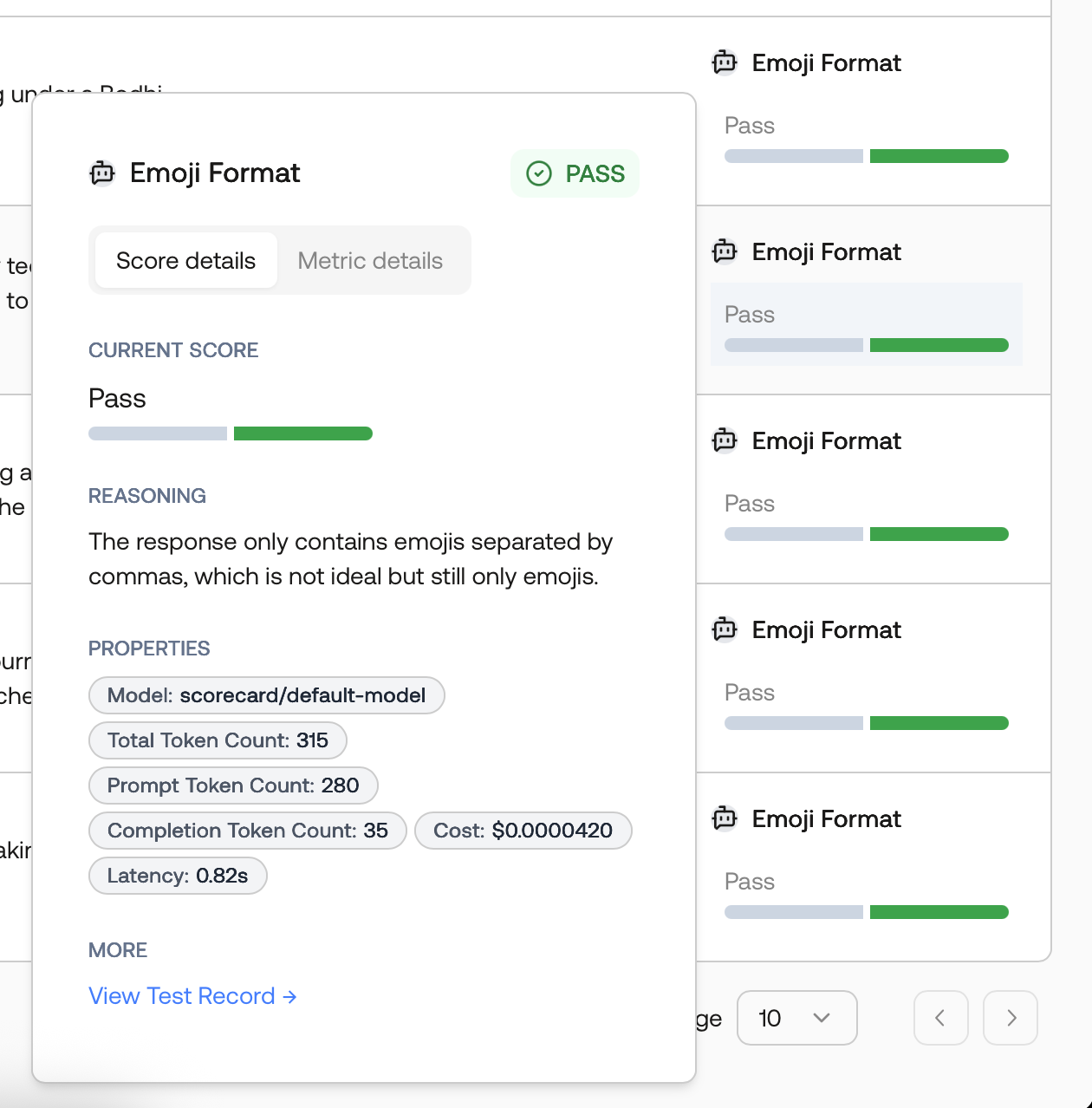

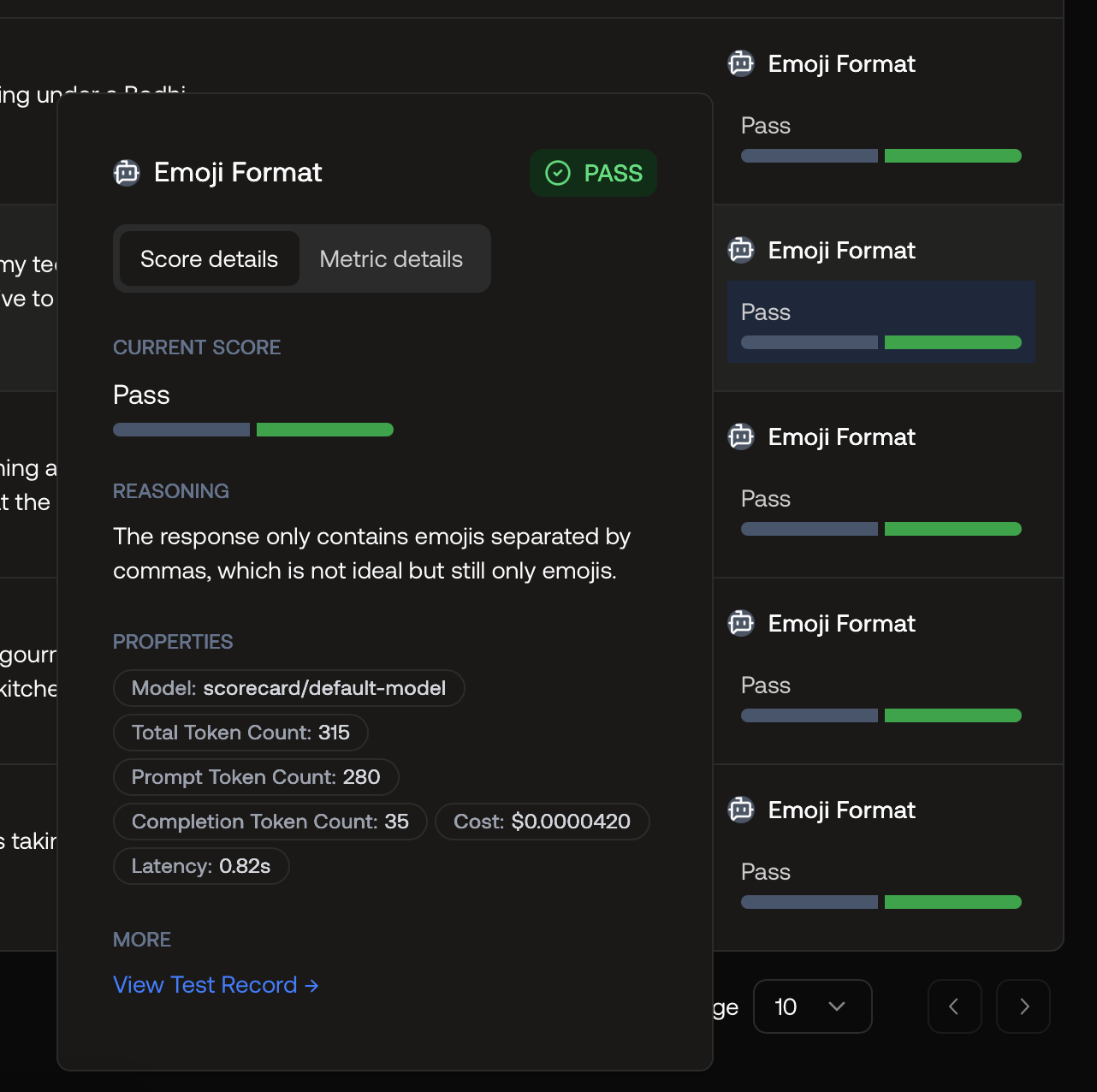

Score Explanations

Scorecard not only automates the scoring process, it also provides explanations of why the AI model scored the metrics in a certain way. This helps you understand the reasons of the AI scores, allowing you to re-evaluate whether the evaluations make sense.

Score Explained by the Scorecard AI Model

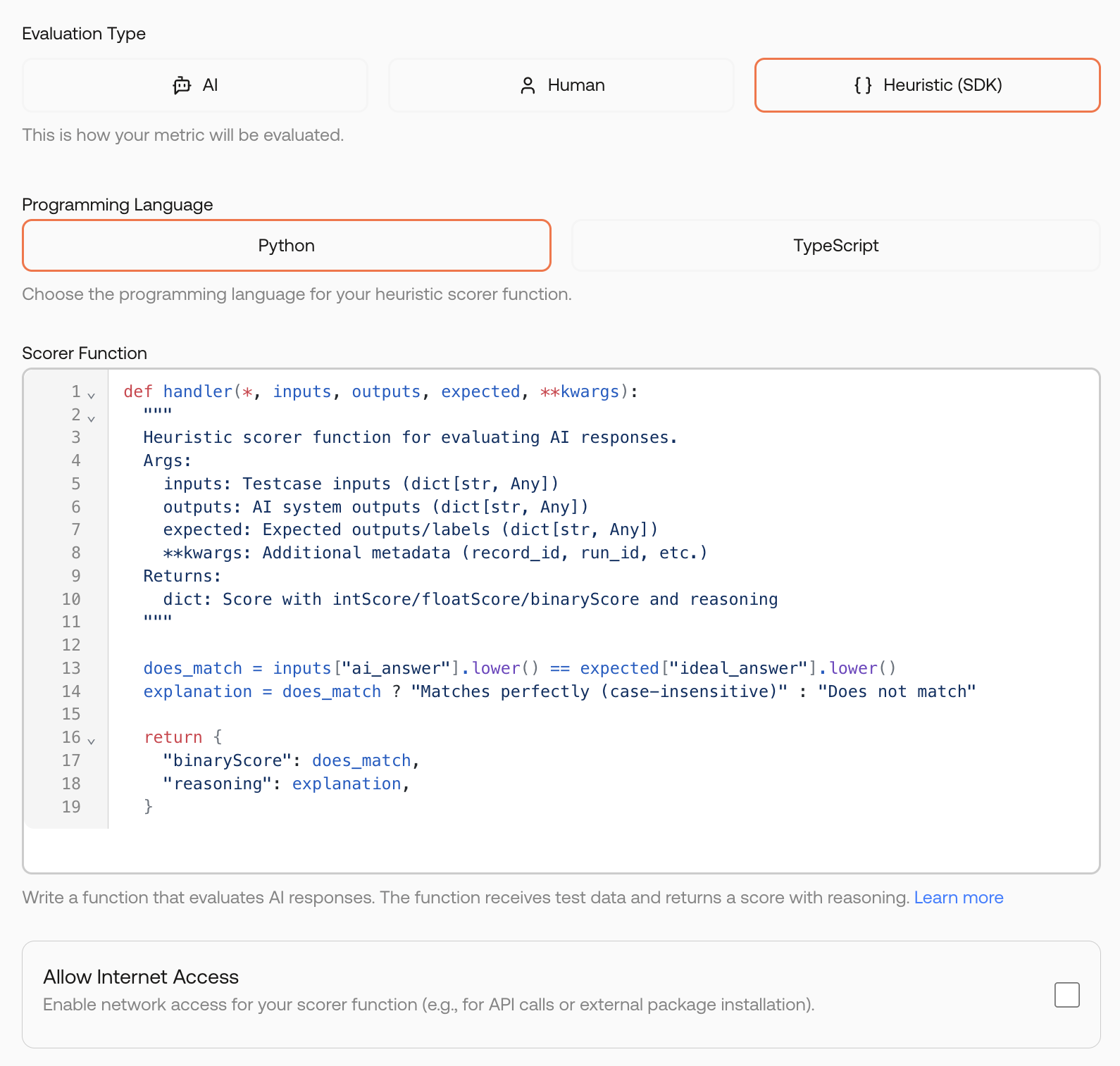

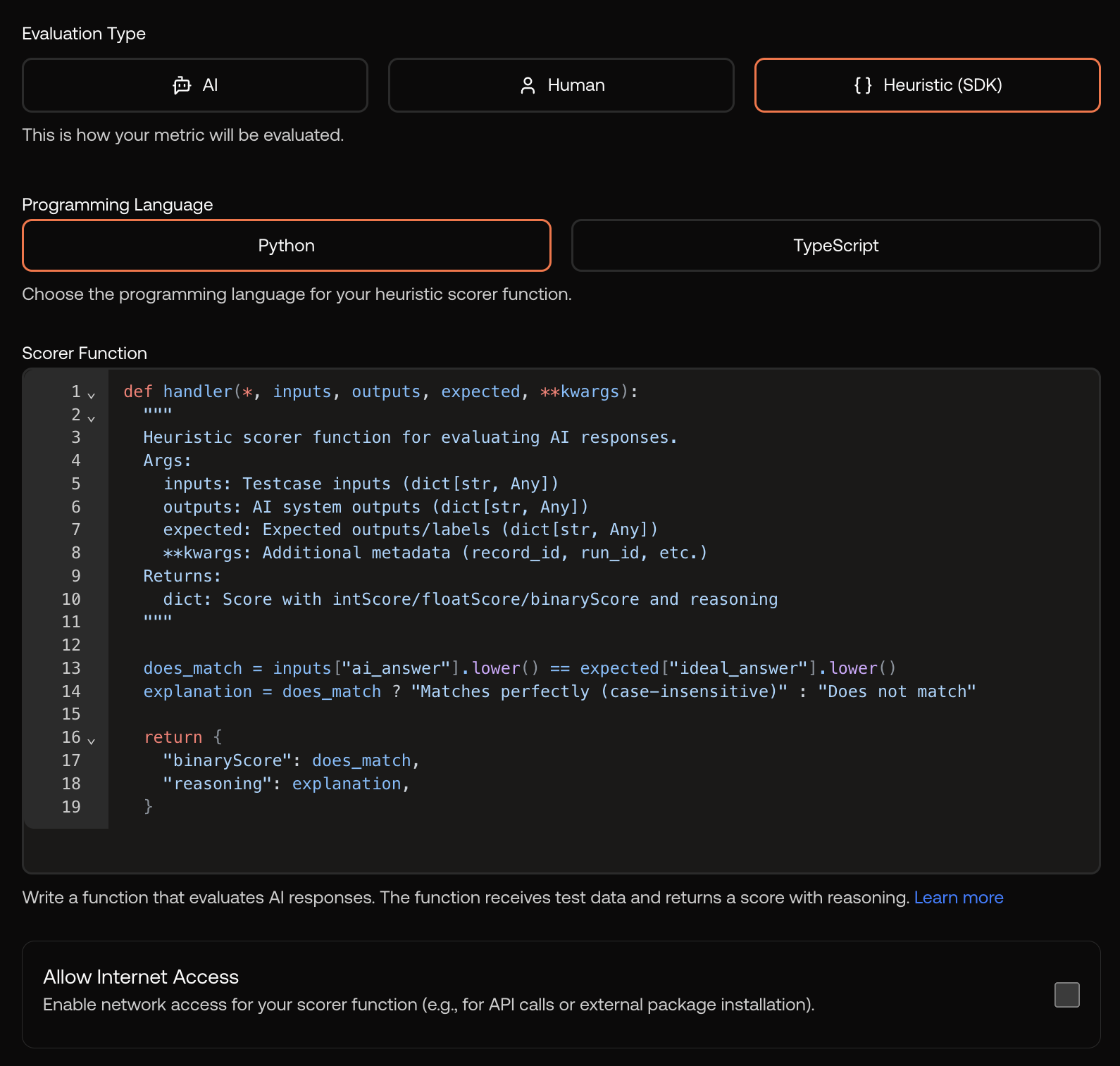

Heuristic Metrics

Heuristic metrics allow you to write custom evaluation logic using Python or TypeScript code. This is useful when you need deterministic, rule-based scoring that doesn’t require an AI model, such as:- Checking for specific keywords or patterns

- Validating JSON structure or format

- Computing text similarity scores

- Measuring response length or complexity

- Custom business logic checks

Creating a Heuristic Metric

When creating a new metric, select Heuristic as the evaluation type. You’ll see a code editor where you can write your evaluation logic.

Creating a heuristic metric with Python code

Writing Heuristic Code

Your code receives the record data and must return a score. Here’s the structure:- Python

- TypeScript

Secure Sandbox Execution

Heuristic code runs in a secure, isolated sandbox environment. This ensures:- Safety: Code cannot access external resources or affect other users

- Consistency: Same code produces same results across runs

- Performance: Execution is optimized for evaluation workloads

The sandbox has limited access to external libraries. Common utilities like string manipulation and JSON parsing are available. Contact support if you need specific libraries for your use case.

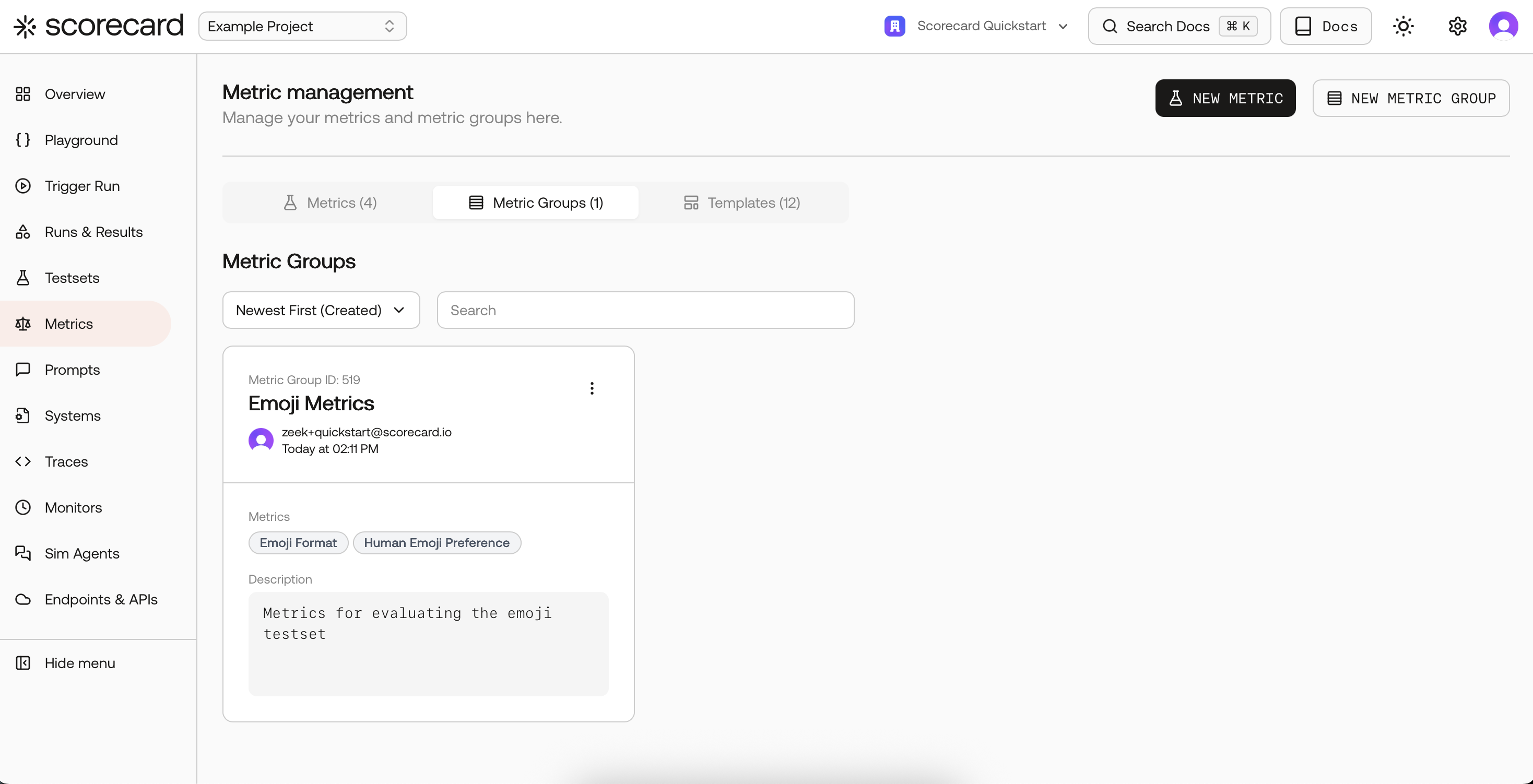

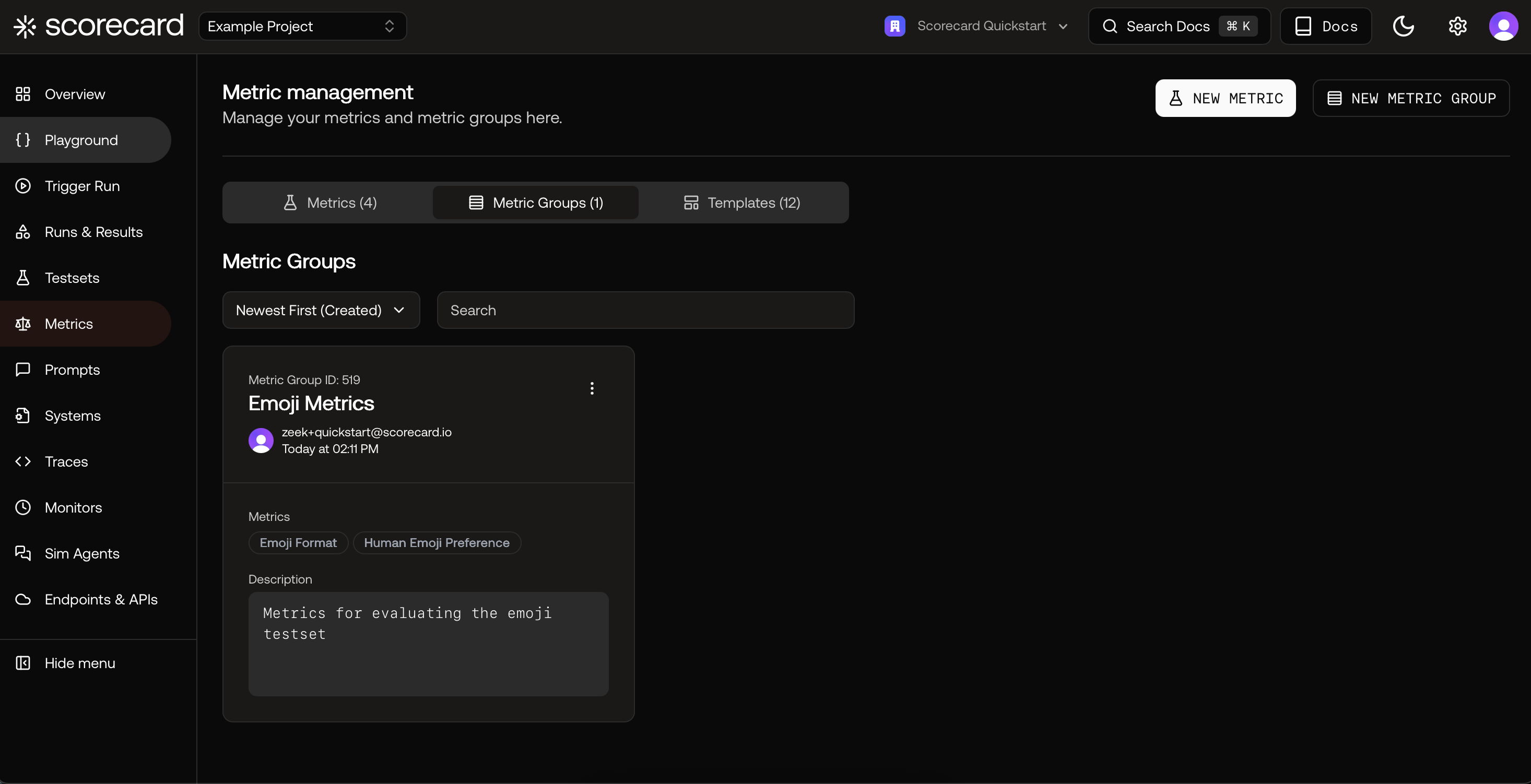

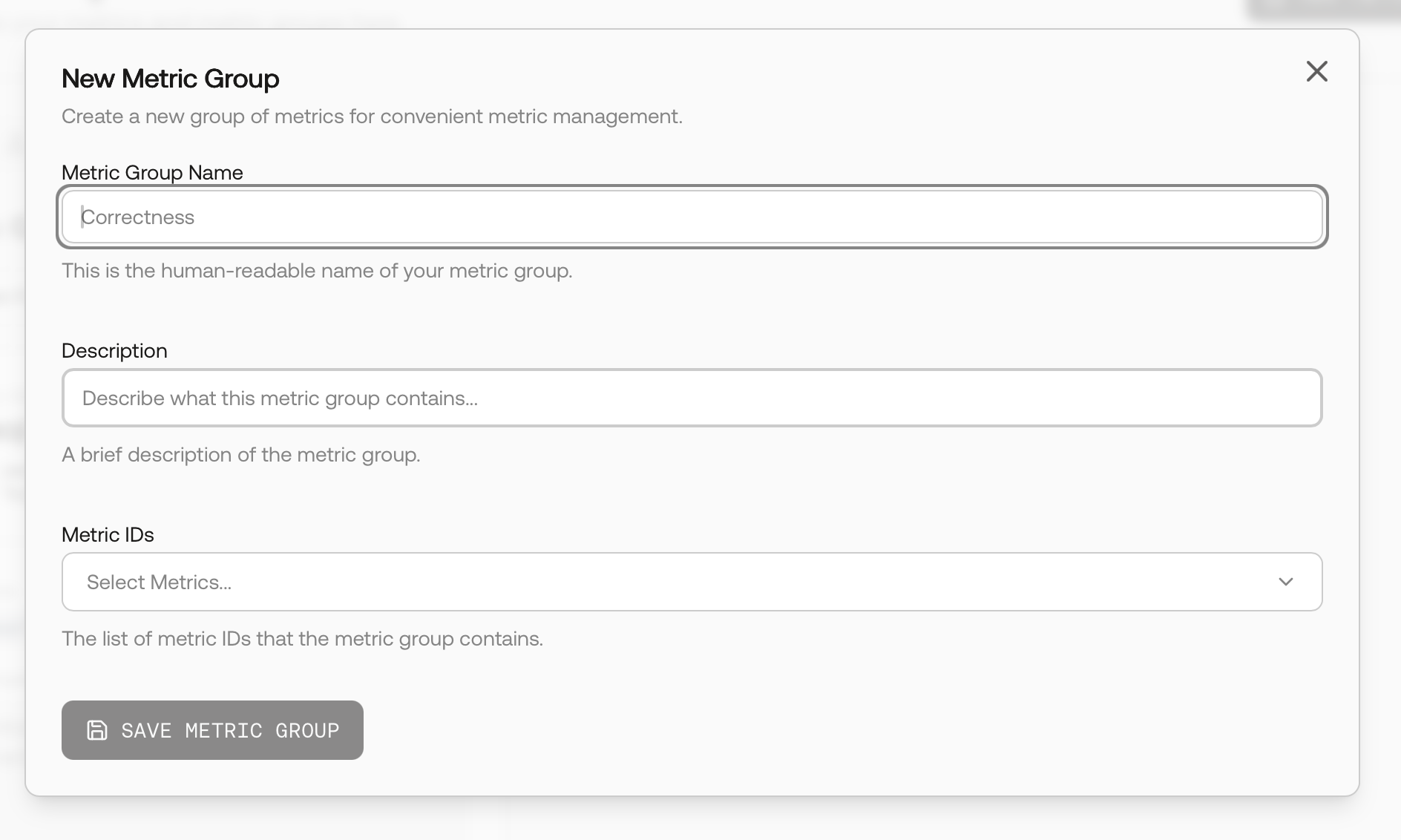

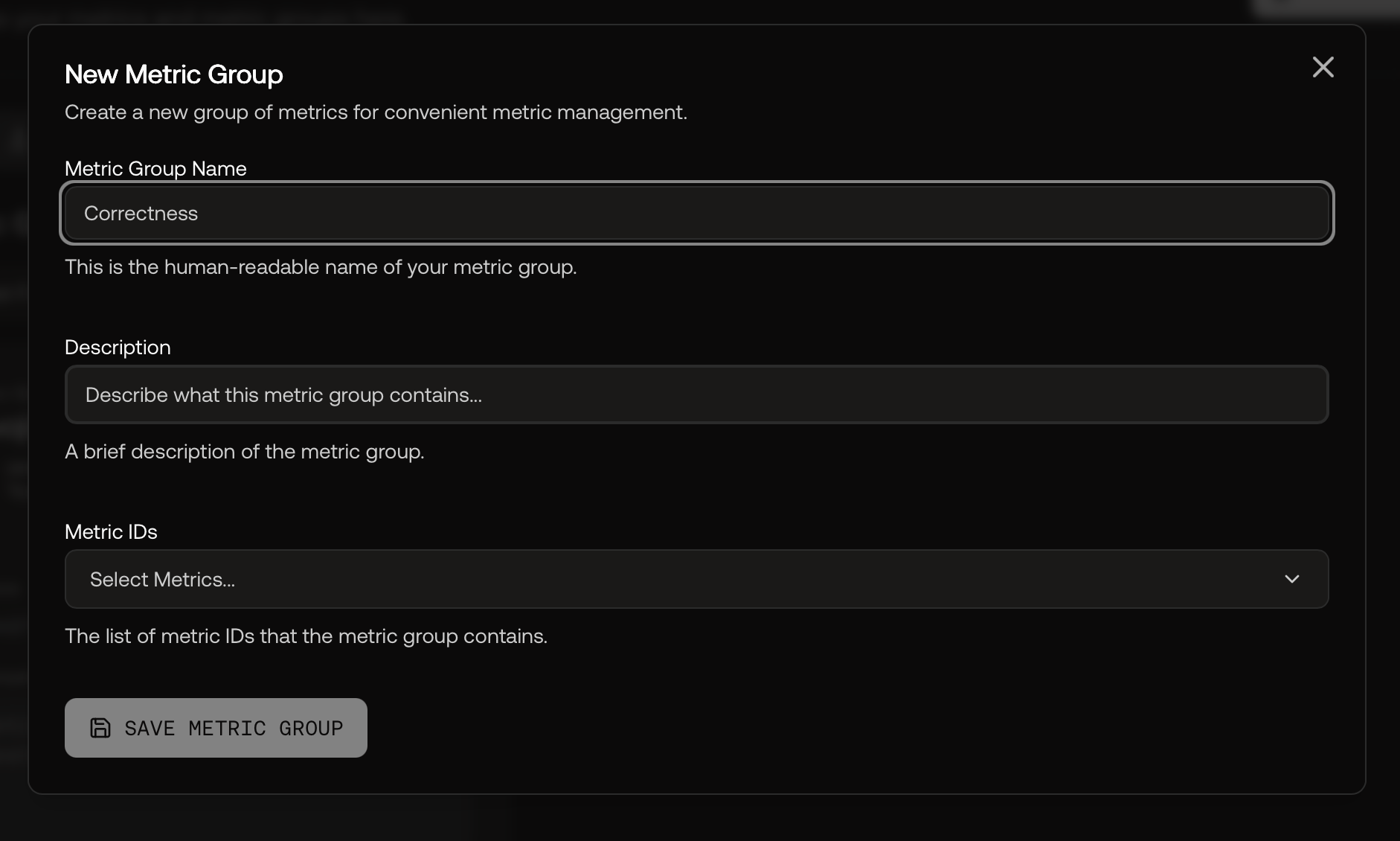

Metric Groups

Once you have defined all metrics that you need to properly assess the quality of your LLM application, you can group them together to form a Metric Group. A Scorecard Metric Group is a collection of metrics that are used together to score an LLM application. This Metric Group can be routinely run against your LLM application to yield a consistent measure of the performance and quality of its responses. Instead of manually selecting multiple metrics to score an LLM application every time for a particular use case, defining this set of metrics makes it easy to repeatedly score in the same way with multiple metrics. Different Metric Groups can serve different purposes by ensuring consistency in testing, e.g. a Metric Group for RAG applications, a Metric Group for translation use cases, etc.

Overview of Metric Groups in Scoring Lab

- Name: a human-readable name of your Metric Group.

- Description: a short description of your Metric Group.

Defining a New Metric Group