Why use GitHub Actions?

GitHub Actions is a powerful, flexible automation platform for software development workflows. With Scorecard’s GitHub action integration, you can test your code automatically on every PR, on a schedule, or manually with various configurations. Automated Testing: Perform extensive end-to-end tests for every push or pull request, or on a schedule. Instant Actionable Feedback: Catch issues before they reach production by getting direct, actionable feedback on your PRs. Continuous Monitoring: Monitor application behavior and ensure it aligns with expected outcomes.Setup the GitHub Integration

1. Setup the workflow

Follow the steps on your Scorecard organization’s GitHub configuration page.- Install the GitHub app.

- Choose which repository and branch to use.

- Configure when test runs should be triggered. For example:

- Nightly, or

- When a user kicks off a test run from the Scorecard UI, or

- When a pull request is opened, or

- When a pull request is merged.

- Add your Scorecard API key as a Github secret key named

SCORECARD_API_KEY. - Click “Create pull request” to create a PR in your repository to setup the Scorecard workflow.

2. Customize the workflow

When you click “Create pull request”, a PR will be created in your repository with the following files:- scorecard-eval.yml

- run_tests.py

- requirements.txt

.github/workflows/scorecard-eval.yml is the GitHub Actions workflow file that will be used to run the tests.You can update your testing parameters and triggering conditions here. For example, use environment variables to add any API keys you need to run your system.scorecard-eval.yml

3. Trigger a run automatically

Depending on your configuration, every push or PR automatically triggers Scorecard tests.4. Trigger a run manually

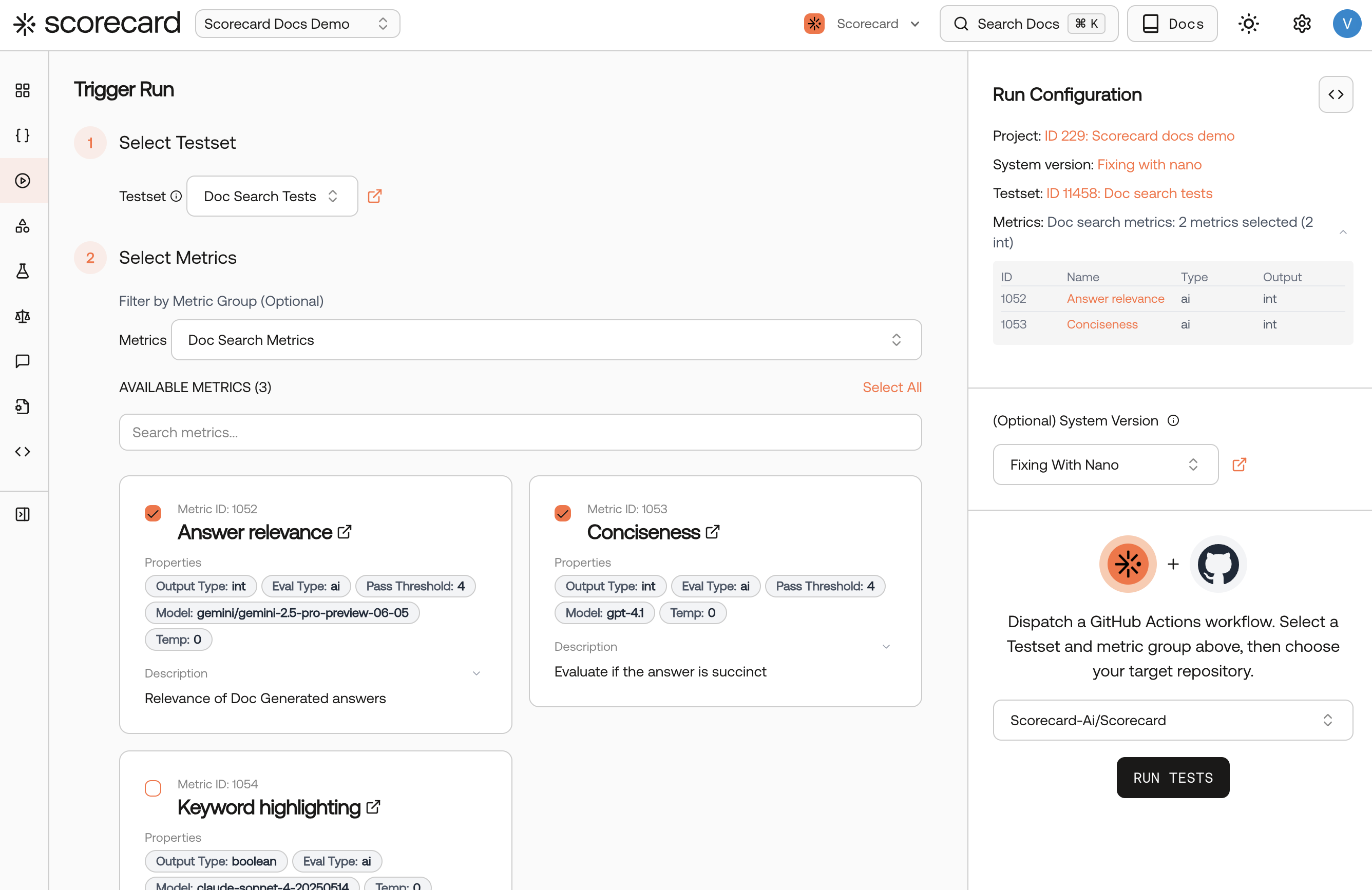

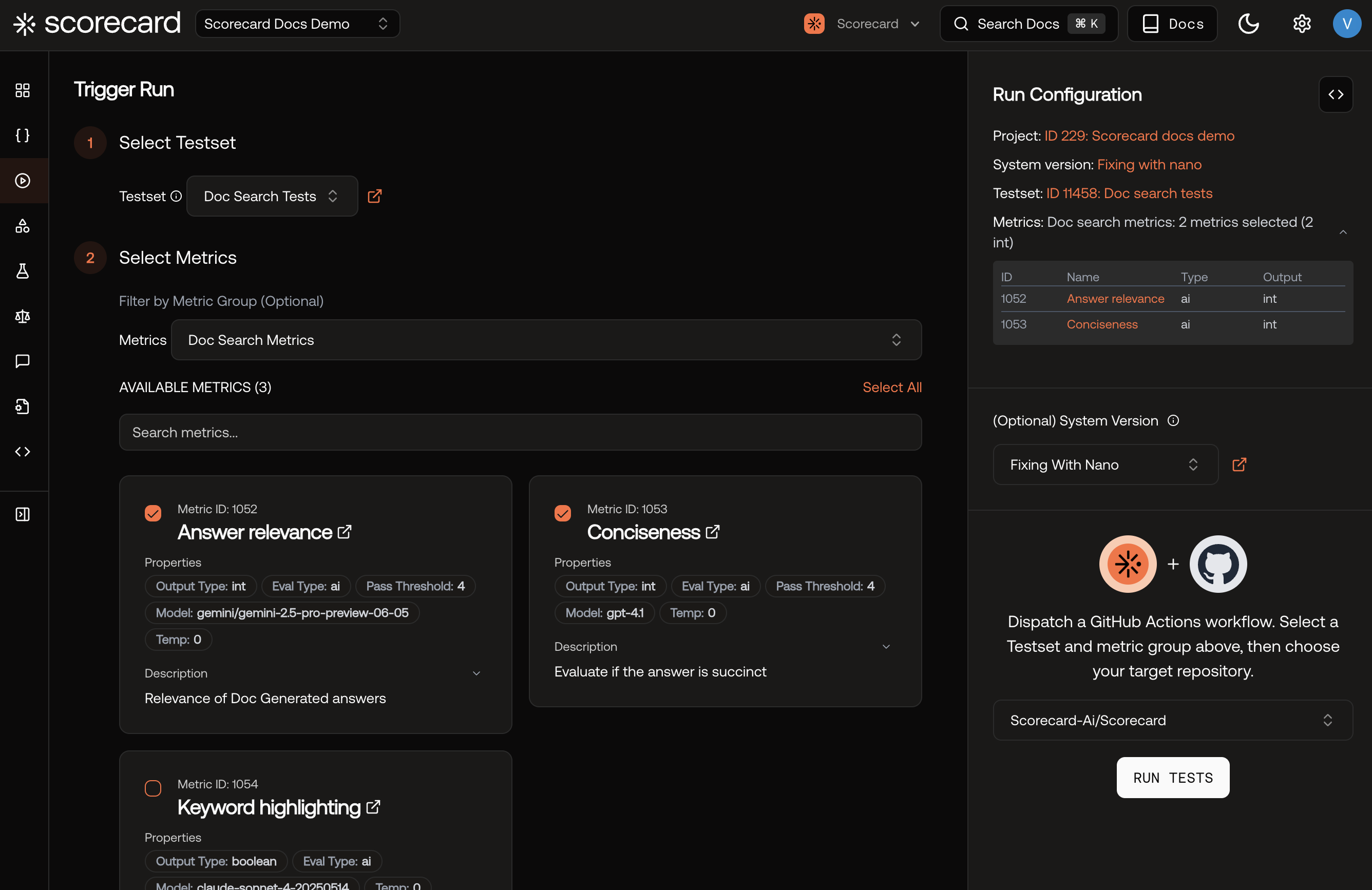

You can trigger a run manually from the Scorecard UI using the Trigger Run page. Just select a testset and some metrics, then click “Run tests”!