Looking for general tracing guidance? Check out the Tracing Quickstart for an overview of tracing concepts and alternative integration methods.

Steps

1

Install dependencies

Install the Traceloop SDK and the LangChain instrumentation package.

2

Set up environment variables

Configure the Traceloop SDK to send traces to Scorecard. Get your Scorecard API key from Settings.

Replace

<your_scorecard_api_key> with your actual Scorecard API key (starts with ak_).3

Initialize tracing

Initialize the Traceloop SDK with LangChain instrumentation before importing LangChain modules.

4

Run your LangChain application

With tracing initialized, run your LangChain application. All LLM calls, chain executions, and agent actions are automatically traced.Here’s a full example:

example.py

You may see

Failed to export batch warnings in the console. These can be safely ignored - your traces are still being captured and sent to Scorecard successfully.5

View traces in Scorecard

Navigate to the Records page in Scorecard to see your LangChain traces.

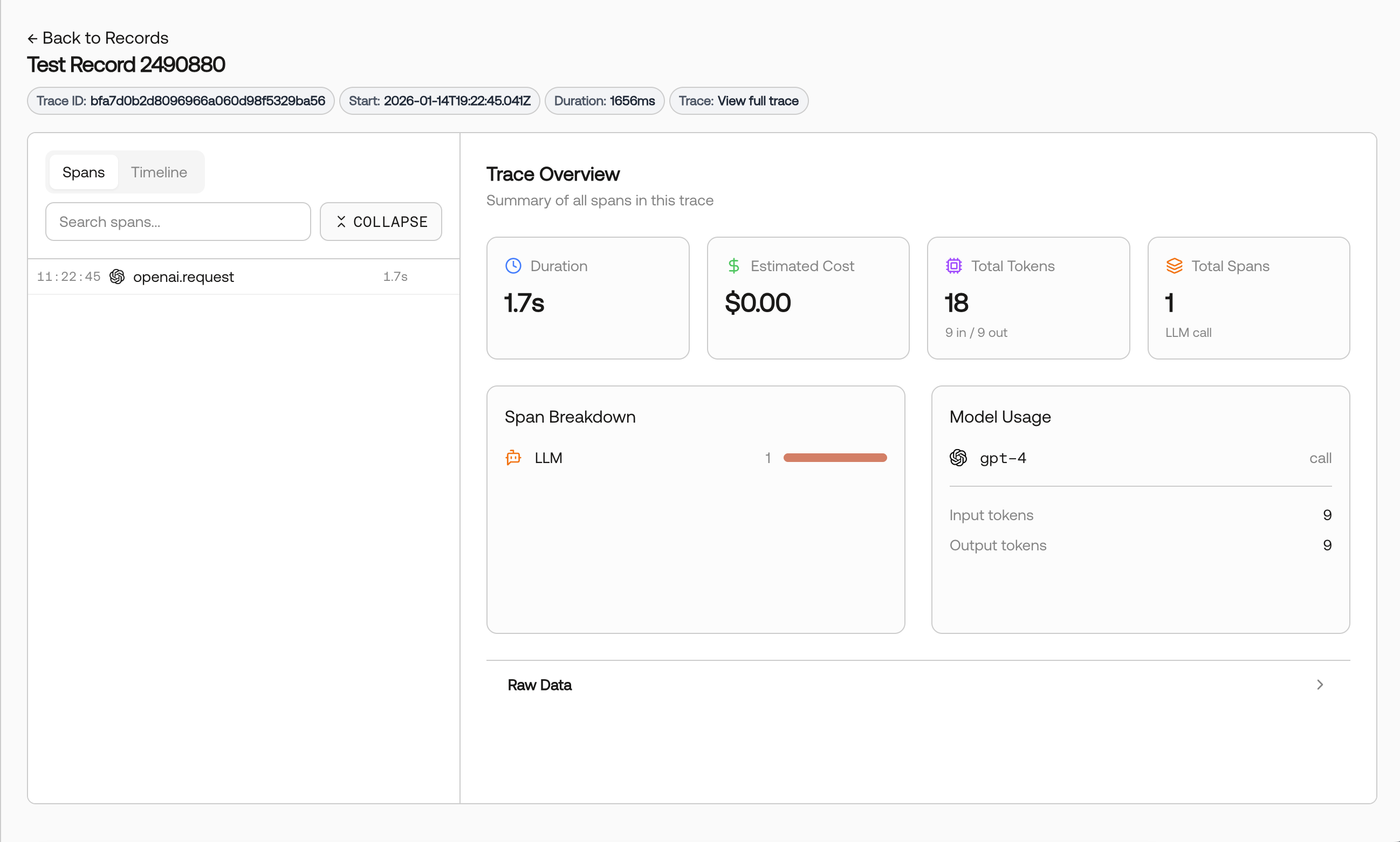

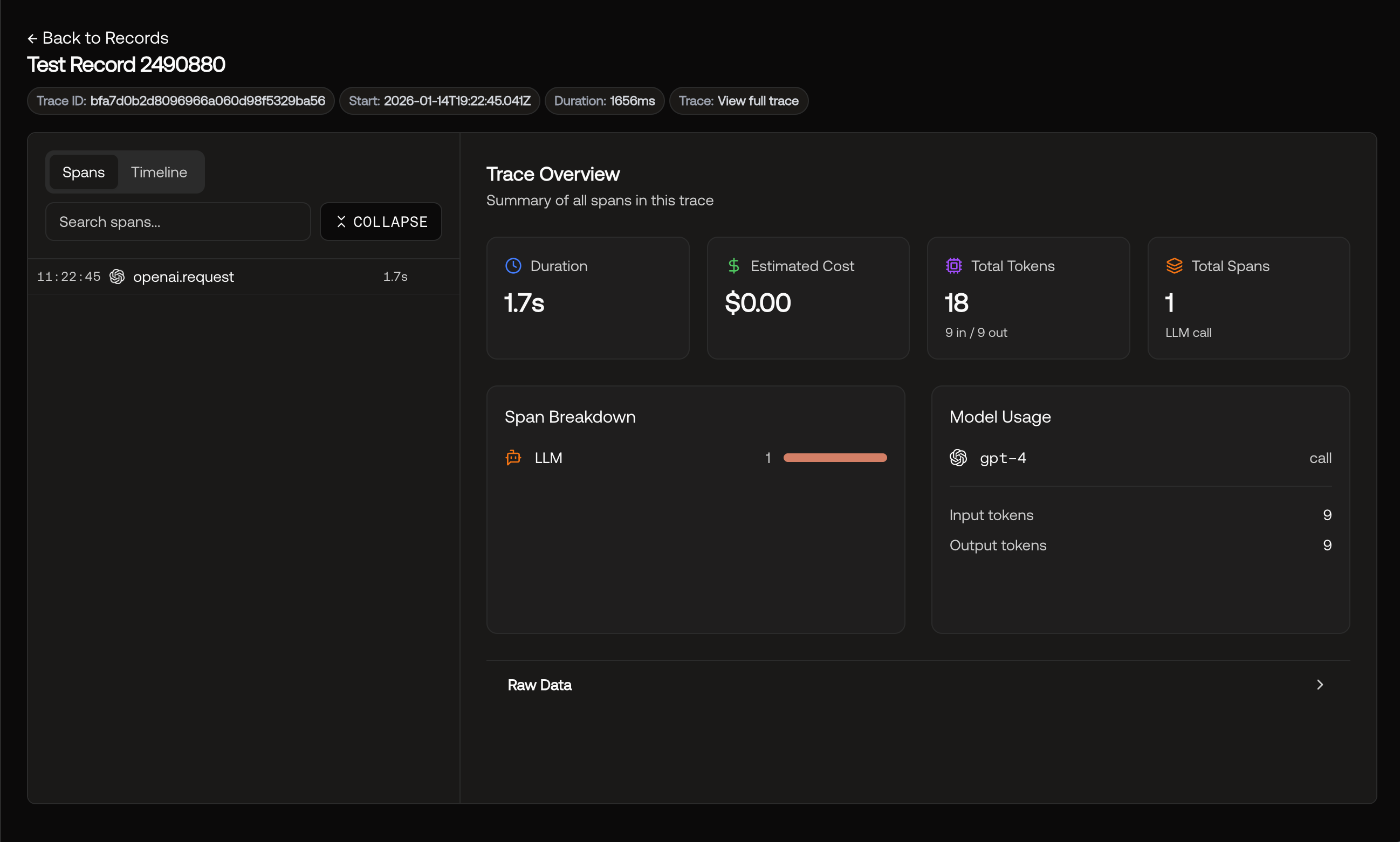

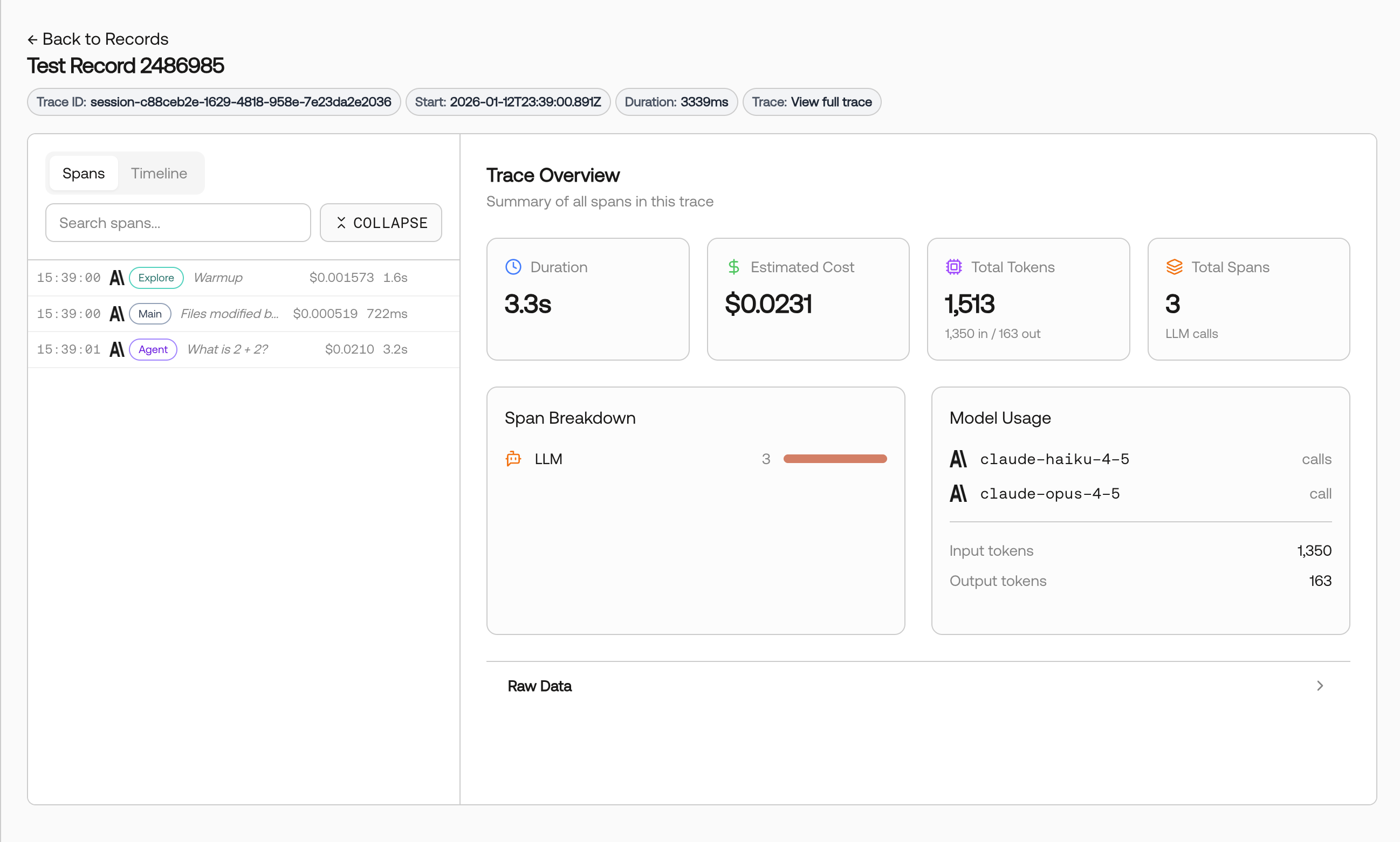

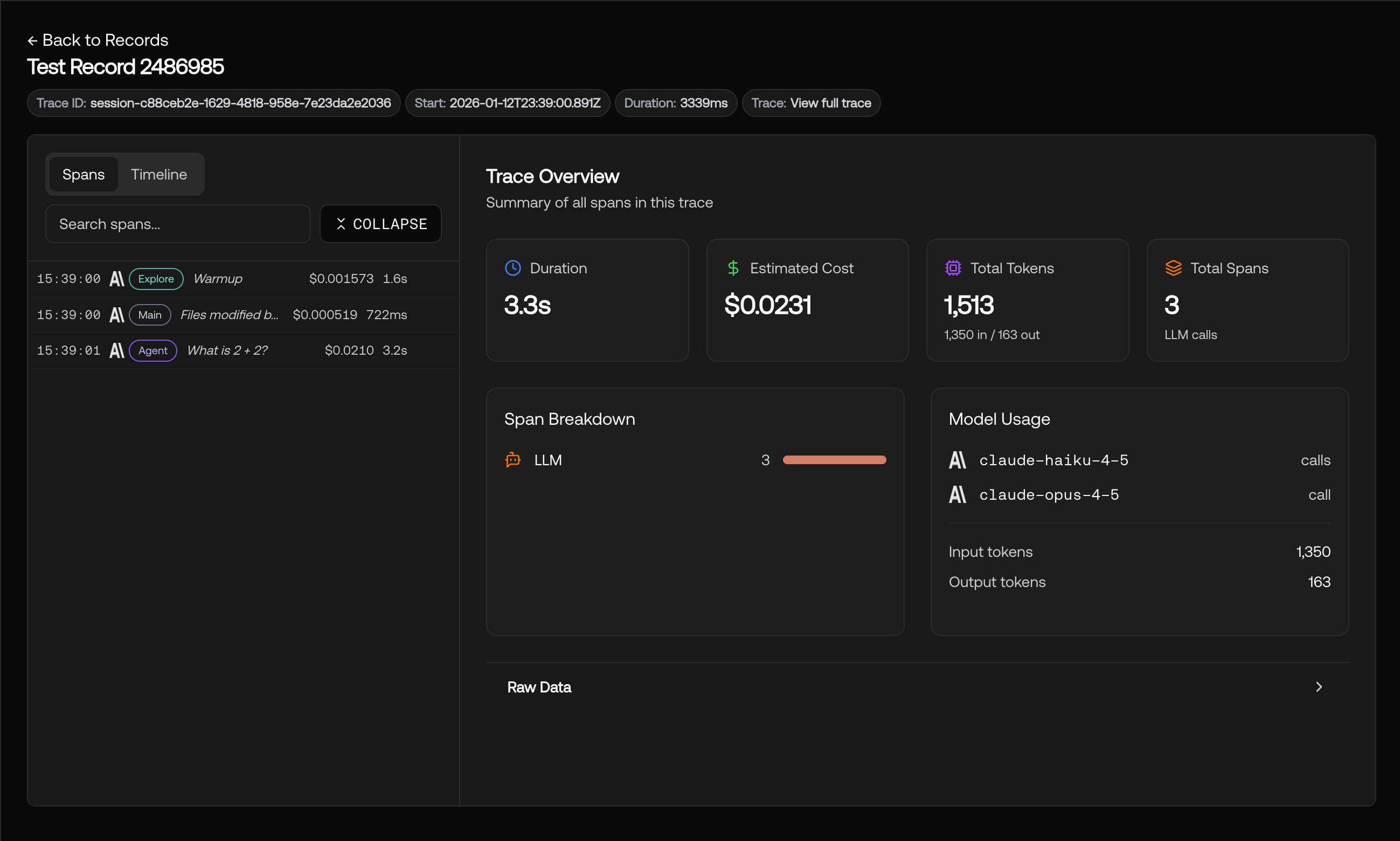

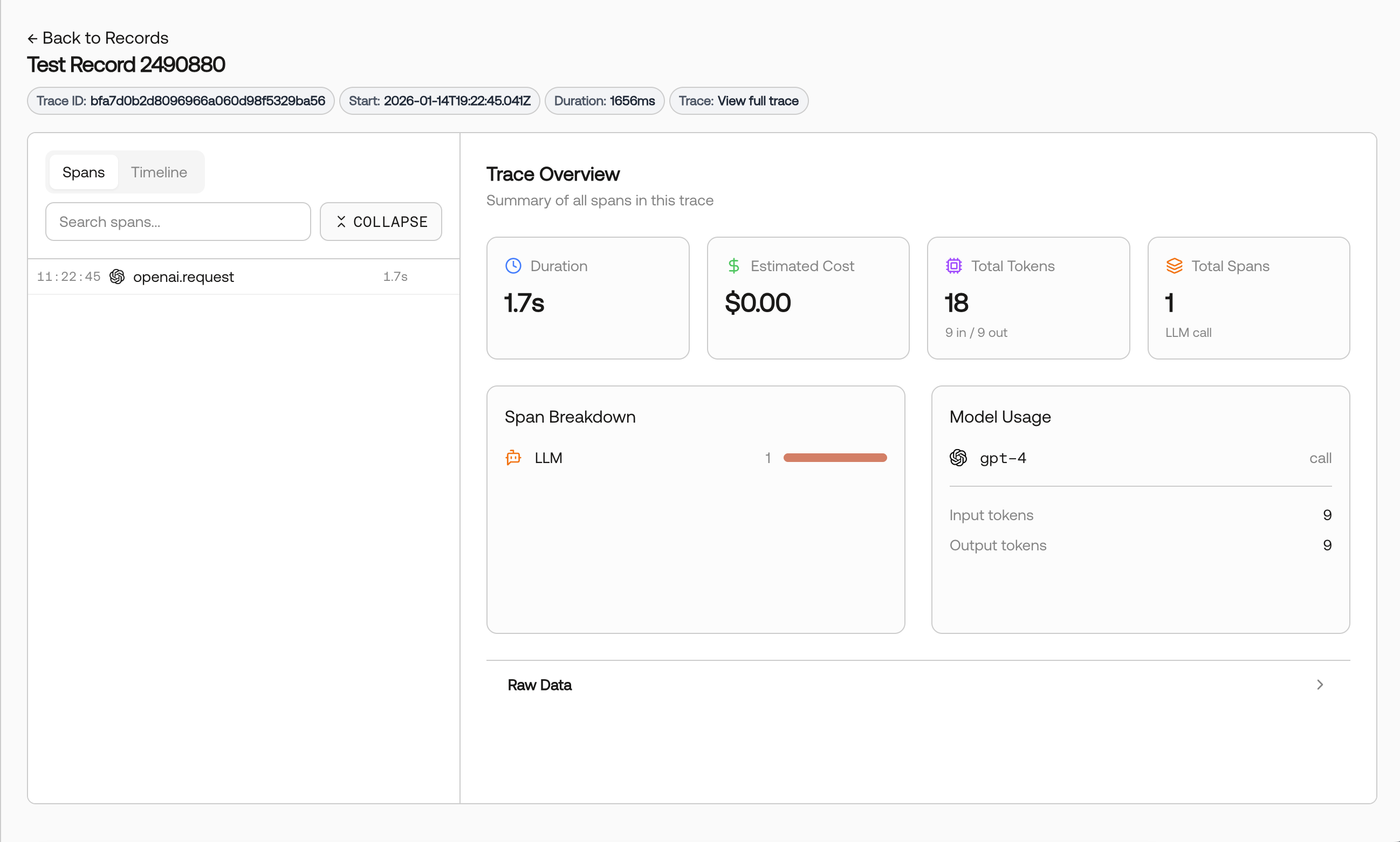

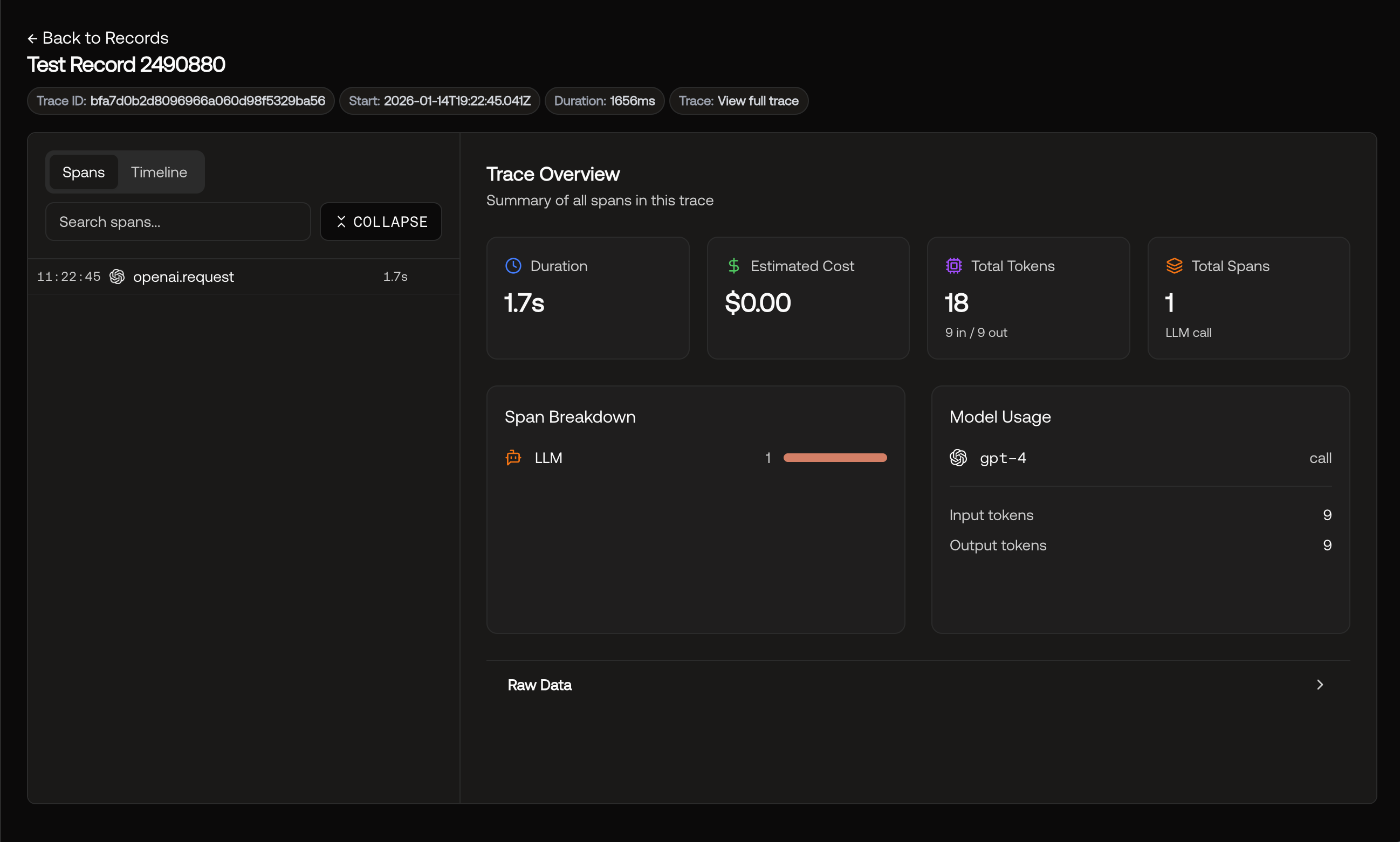

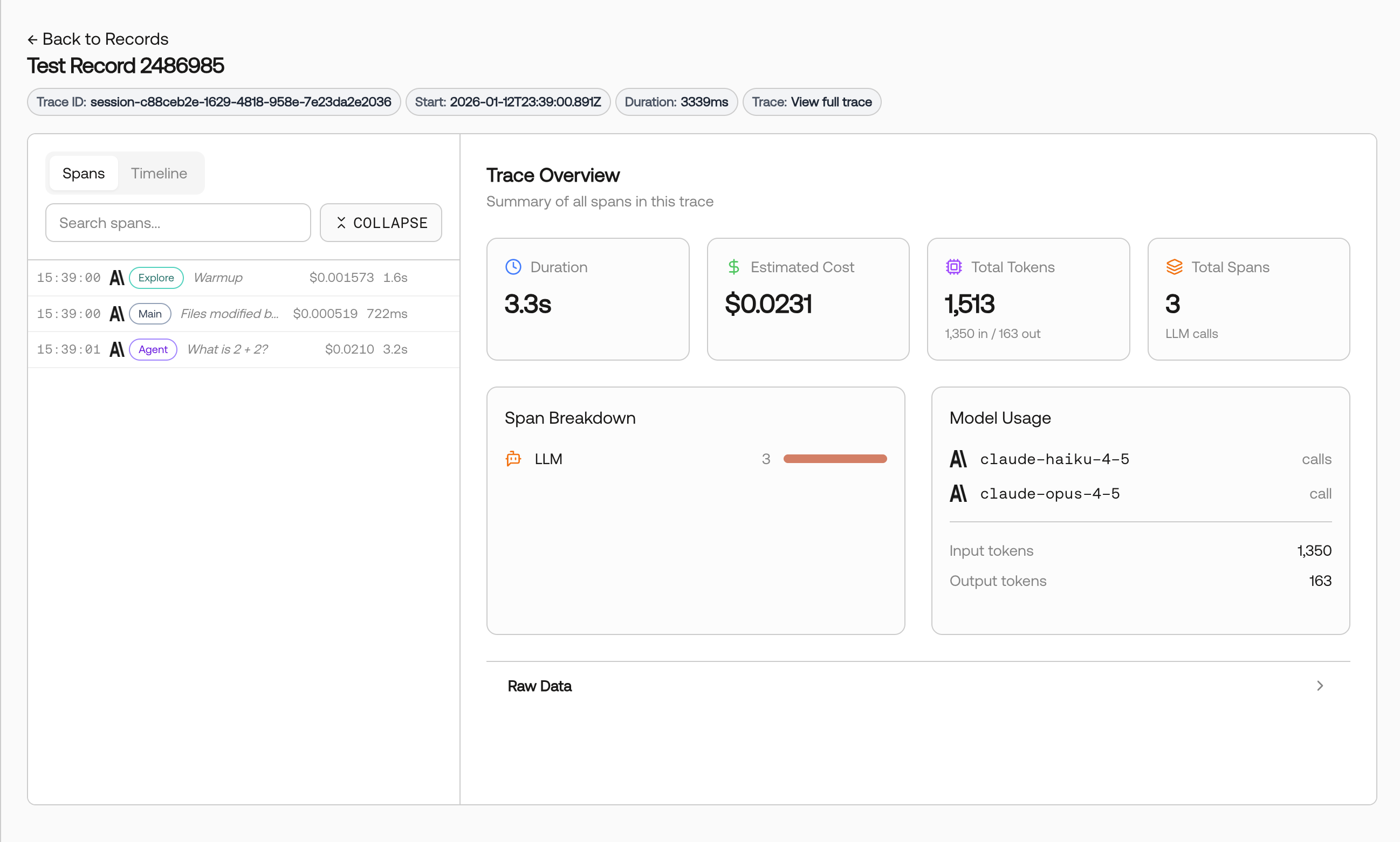

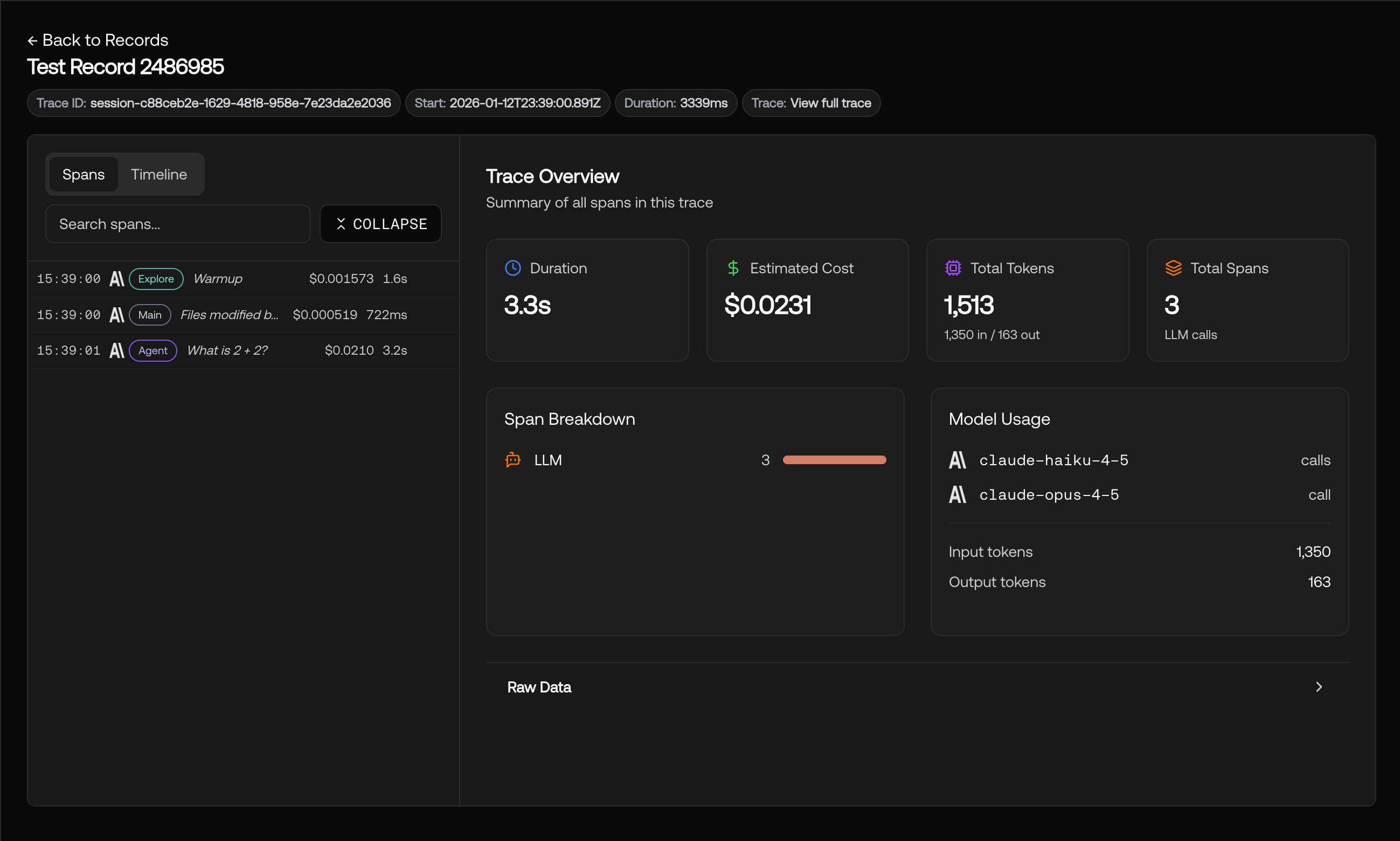

Click on any record to view the full trace details, including chain execution, LLM calls, and token usage.

Click on any record to view the full trace details, including chain execution, LLM calls, and token usage.

It may take 1-2 minutes for traces to appear on the Records page.

What Gets Traced

OpenLLMetry automatically captures comprehensive telemetry from your LangChain applications:| Trace Data | Description |

|---|---|

| LLM Calls | Every LLM invocation with full prompt and completion |

| Chains | Chain executions with inputs, outputs, and intermediate steps |

| Agents | Agent reasoning steps, tool selections, and action outputs |

| Retrievers | Document retrieval operations and retrieved content |

| Token Usage | Input, output, and total token counts per LLM call |

| Errors | Any failures with full error context and stack traces |