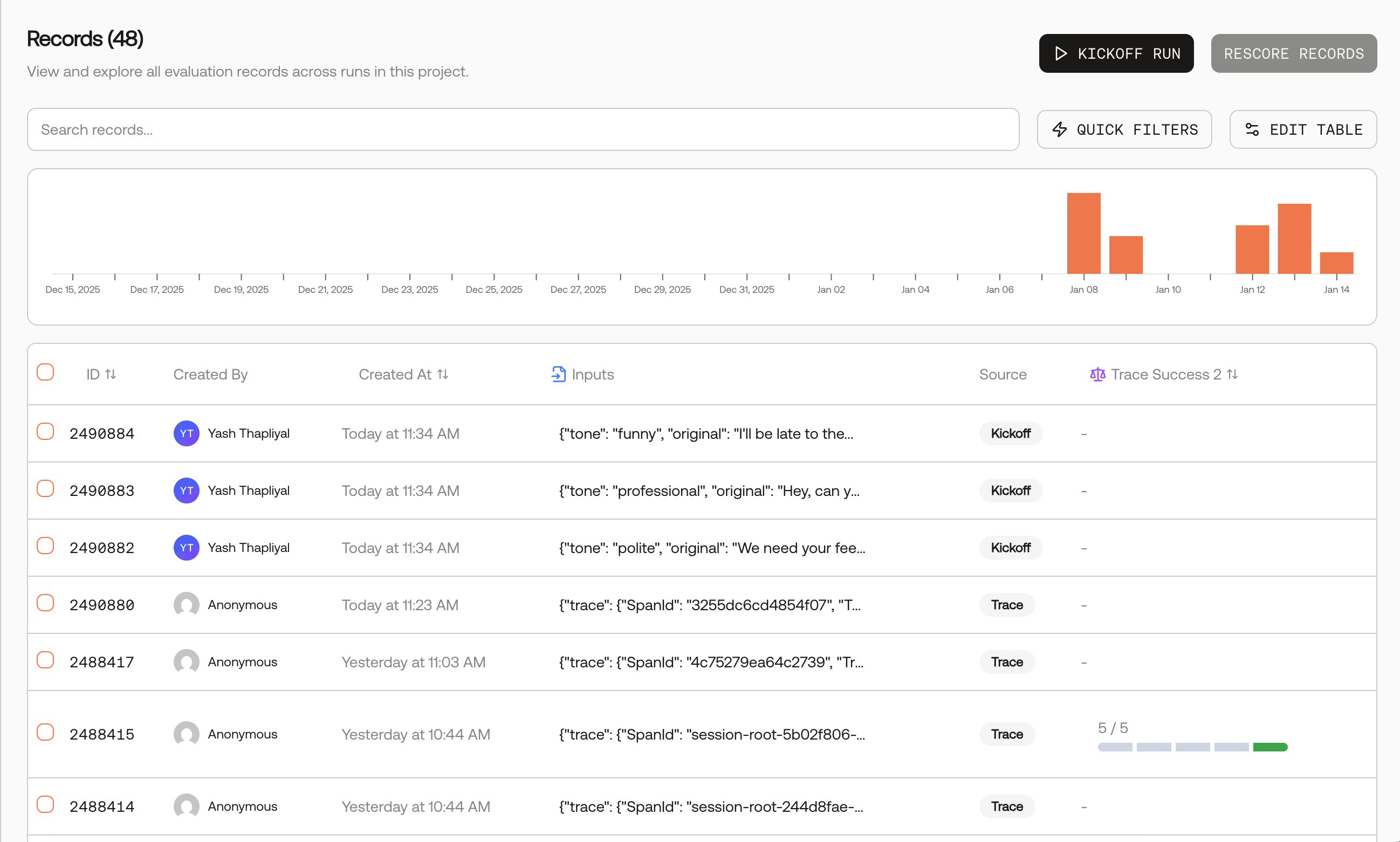

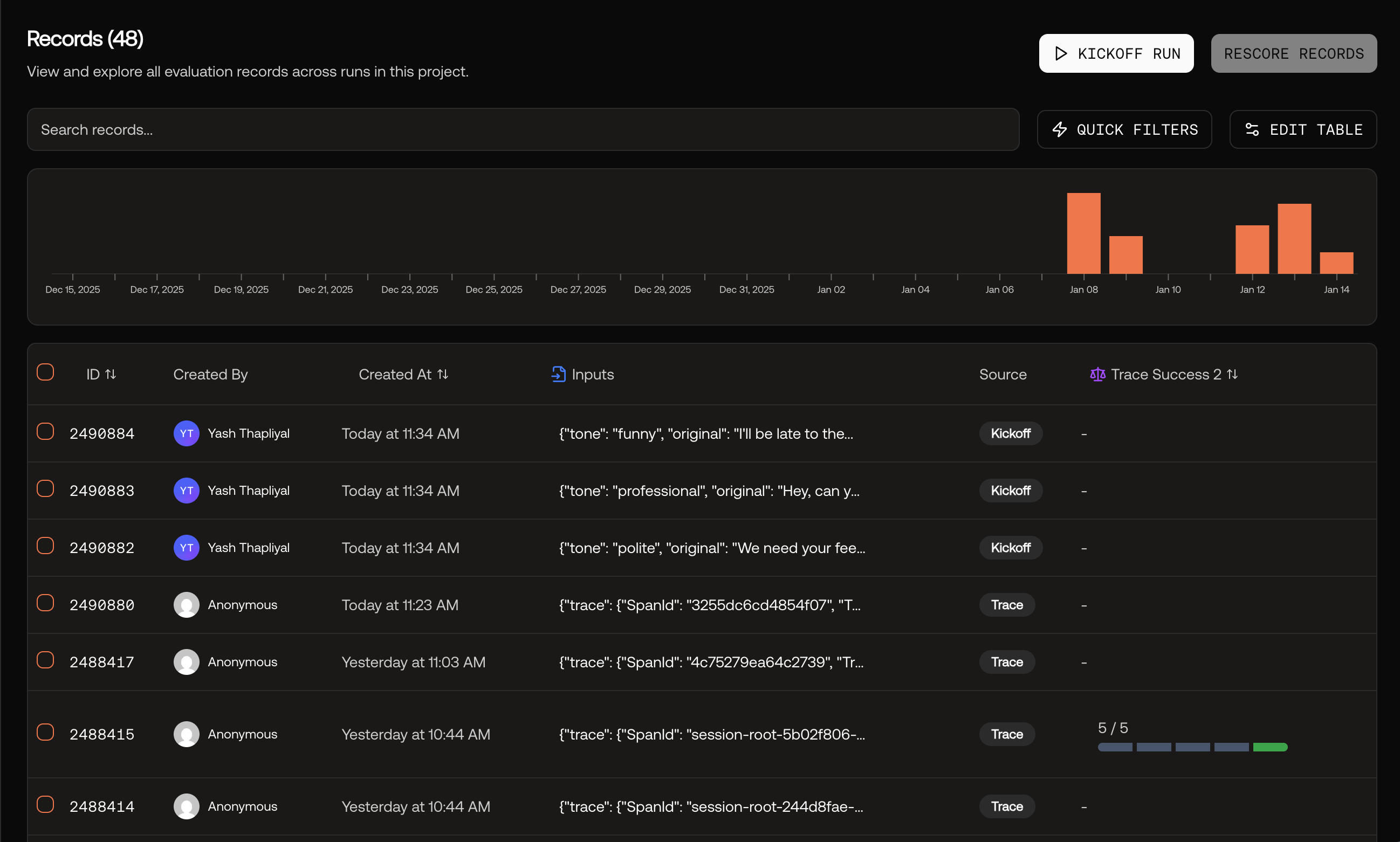

The Records page provides a unified view of all evaluation records across runs in your project. Use it to search, filter, analyze patterns, and bulk re-score records without navigating through individual runs.

What is a Record?

A Record is an individual test execution within a run. Each record contains:

- Inputs: The data sent to your AI system

- Outputs: The response generated by your system

- Expected (Labels): Ground truth or ideal responses for comparison

- Scores: Evaluation results from each metric

- Status: Whether scoring is pending, completed, or errored

Records are created when you run evaluations via the API, Playground, or from traces.

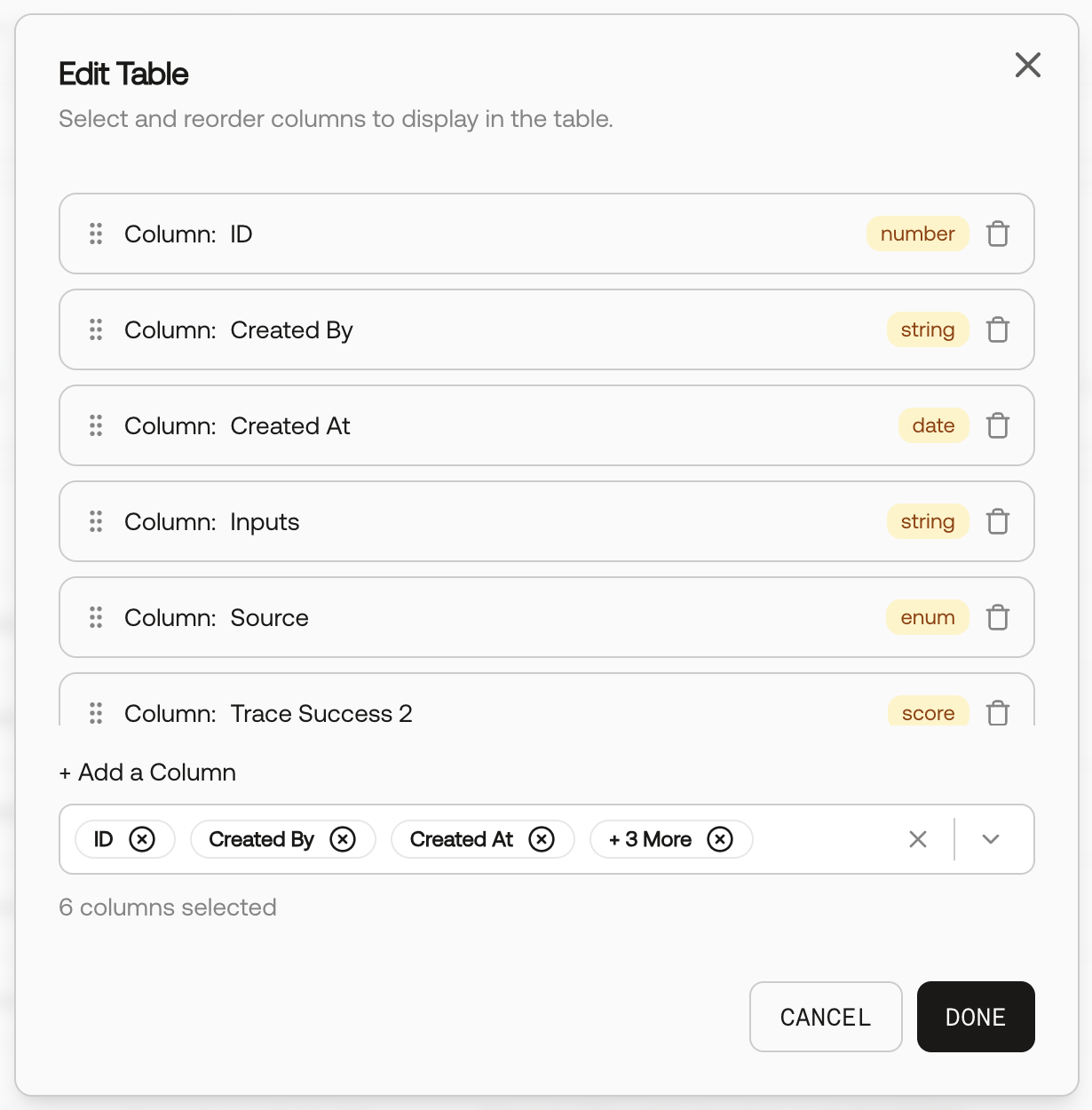

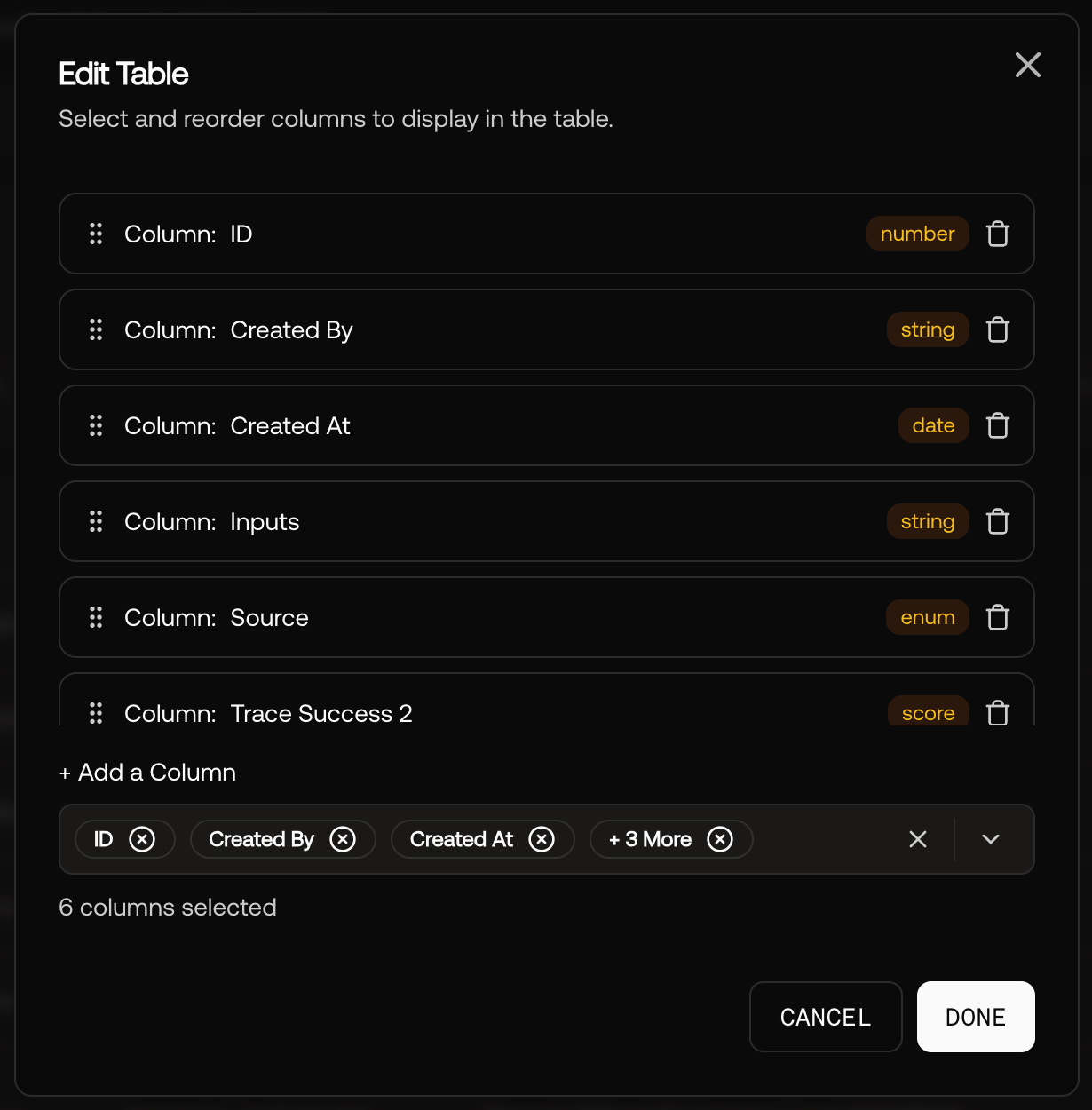

Customizing the Table

Click Edit Table to customize which columns appear and their order. You can add, remove, and reorder columns including:

- Base columns: ID, Created By, Created At

- Data fields: Inputs, Outputs, Expected

- Source: How the record was created (API, Playground, Kickoff, Trace)

- Metrics: Score columns for each metric in your project

Your column preferences are saved per project.

History Chart

The interactive histogram shows record distribution over time. Click any bar to filter records to that time period.

Bulk Re-scoring

Select multiple records using the checkboxes, then click Re-score to re-evaluate them with your metrics. This is useful when:

- You’ve updated a metric’s guidelines

- You want to apply new metrics to existing records

- You need to re-evaluate after fixing a configuration issue

Re-scoring uses the latest version of your metrics without re-running your AI system.

Record Details

Click any record to view its full details. The details view differs based on how the record was created:

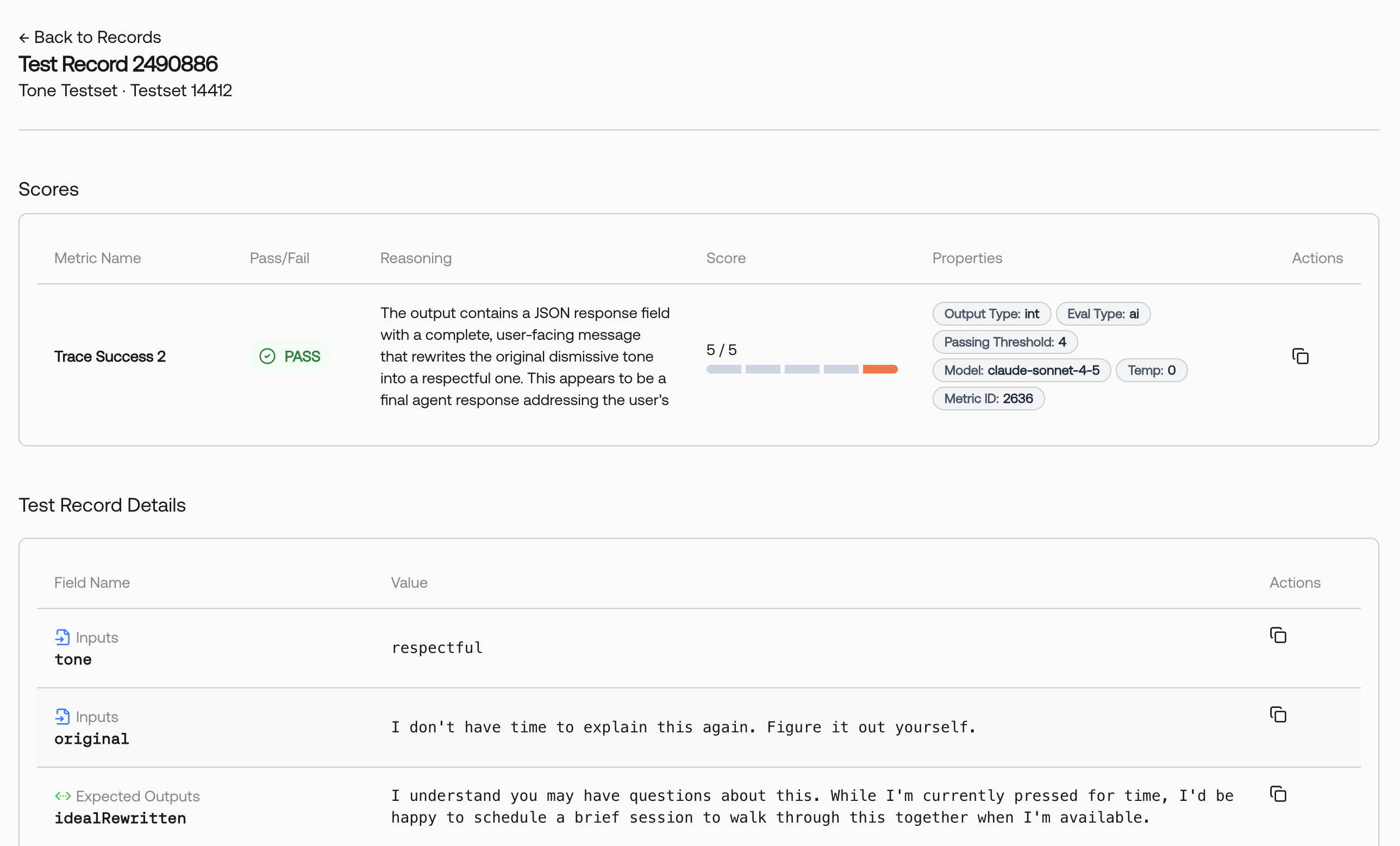

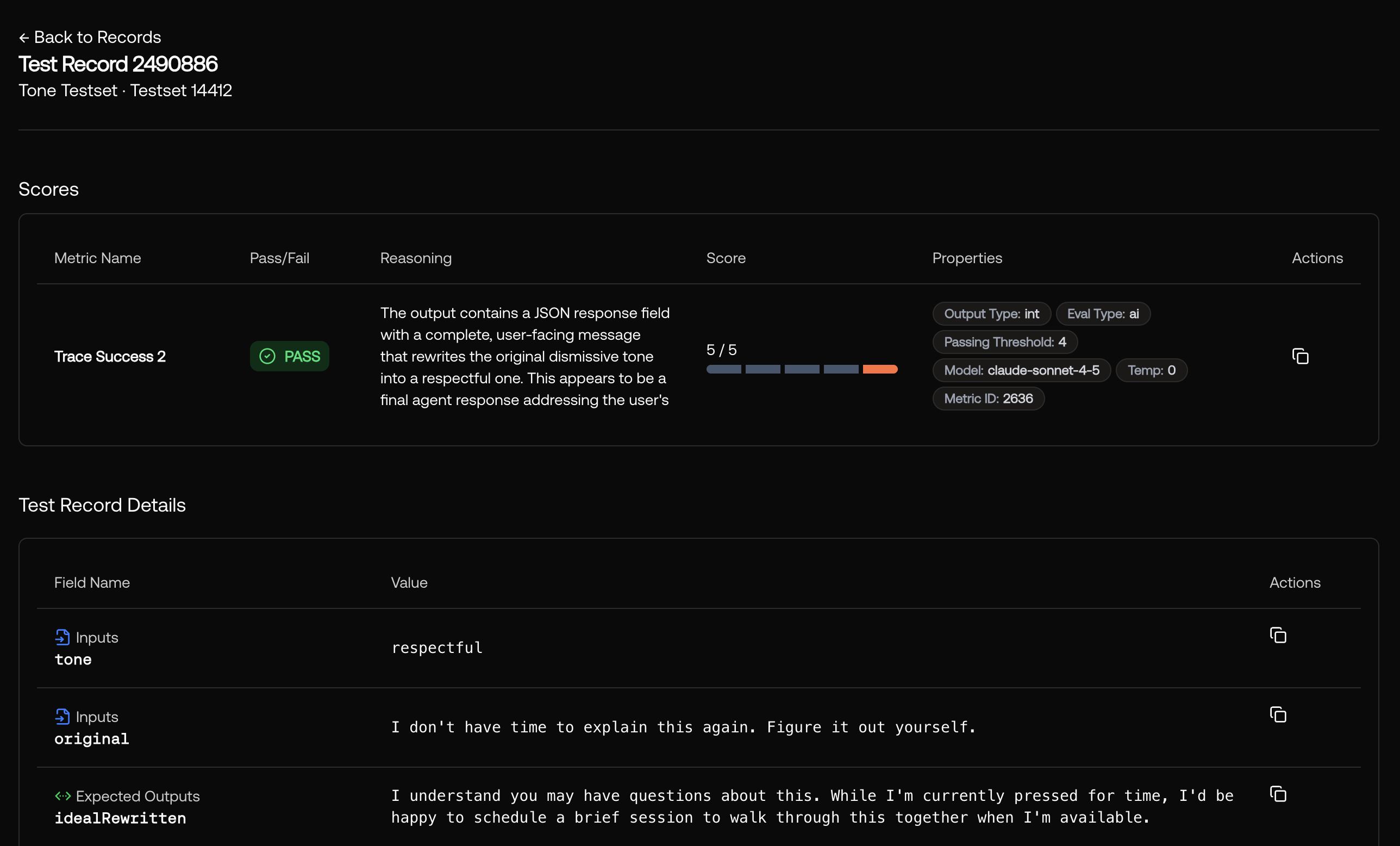

Testcase-Based Records

Records created from testsets show:

- Scores: Pass/fail status, reasoning, and metric properties for each evaluation

- Test Record Details: Input fields, expected outputs, and actual outputs

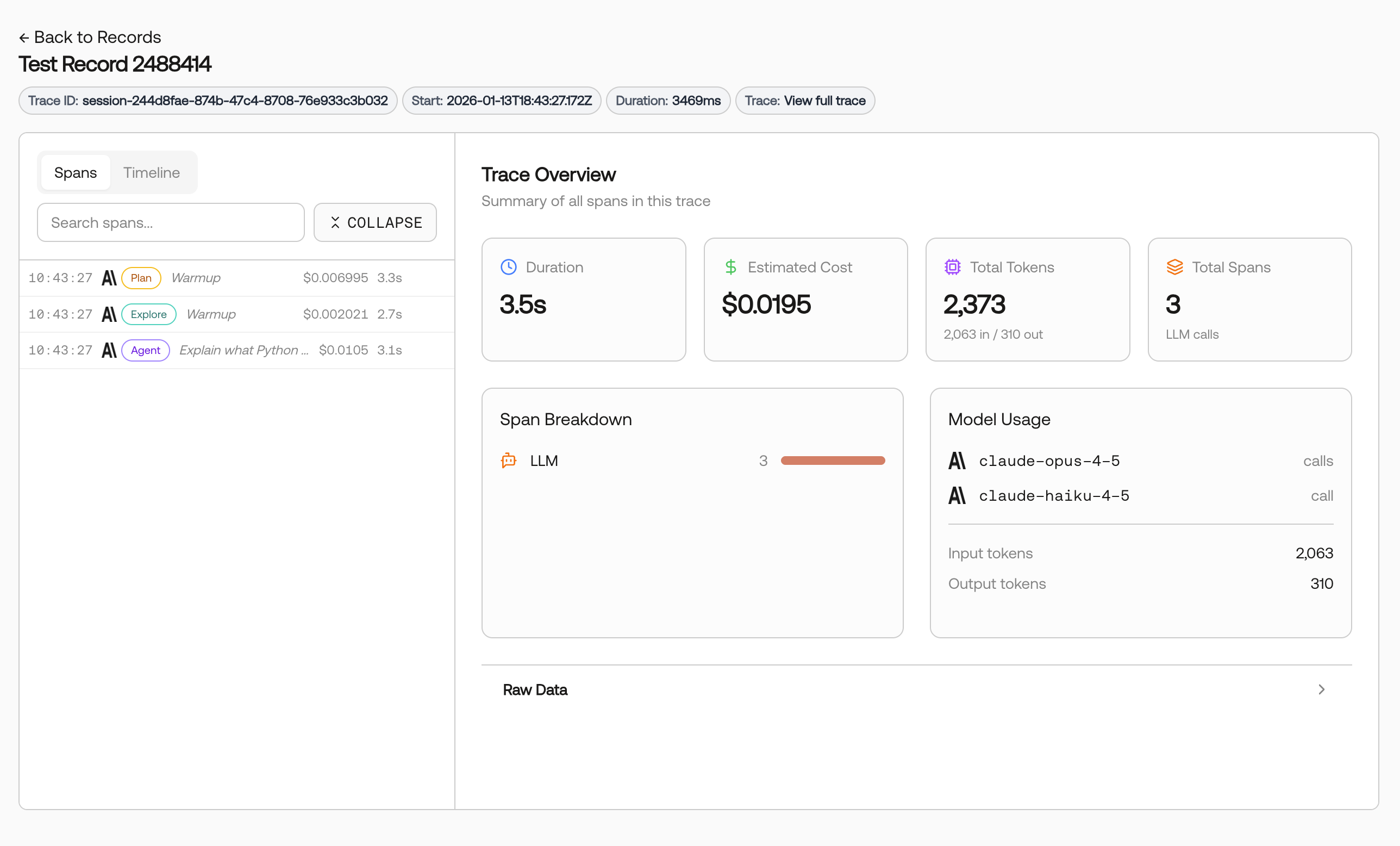

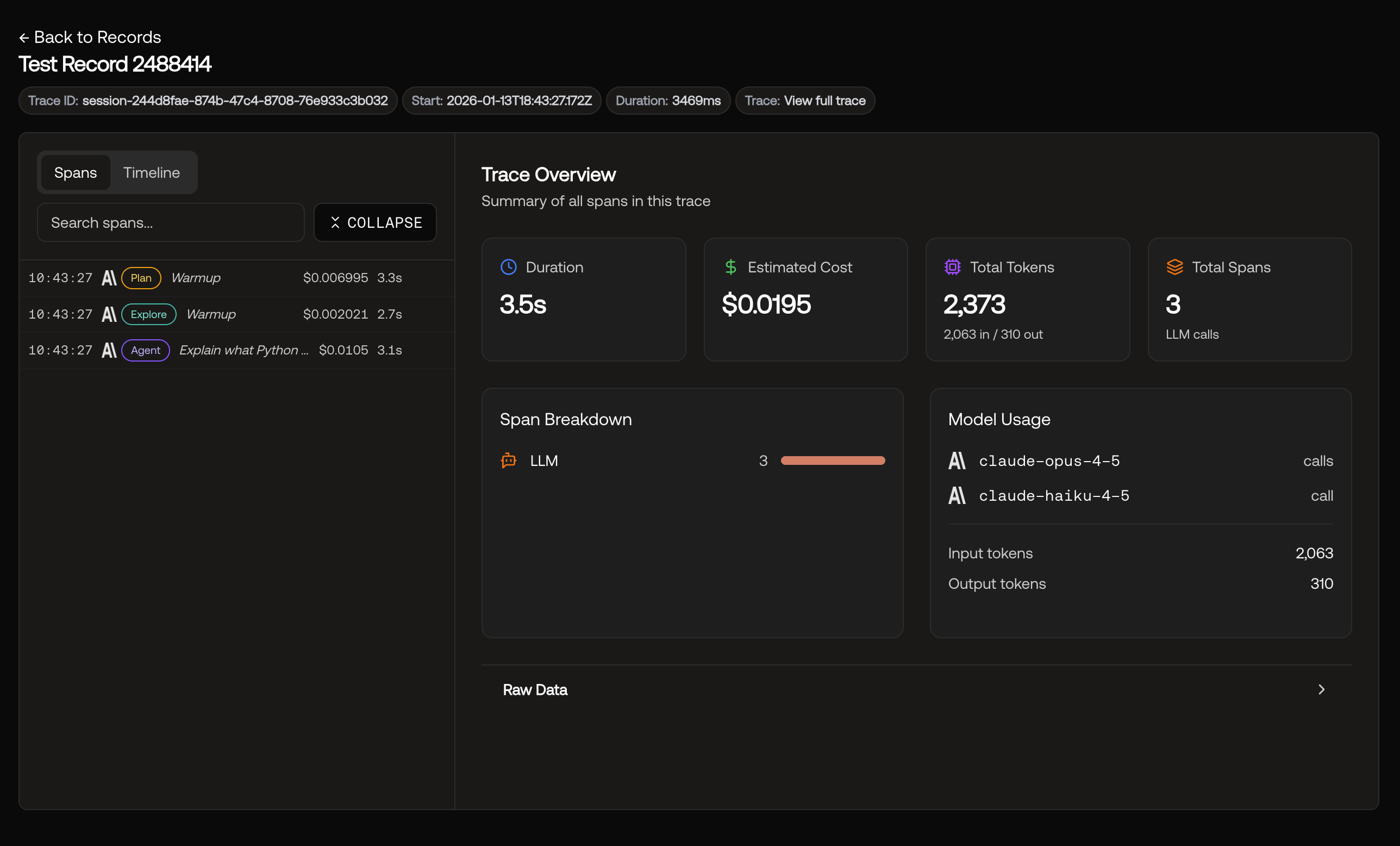

Trace-Based Records

Records created from production traces show:

- Trace Overview: Duration, estimated cost, total tokens, and span count

- Spans: Individual LLM calls with timing and cost breakdown

- Model Usage: Which models were called and token counts

Use Cases

- Cross-run analysis: Find patterns across multiple evaluation runs

- Debugging failures: Filter by

metric.status:fail to investigate failing records

- Quality review: Review records from specific time periods or sources

- Metric iteration: Re-score records after updating metric guidelines